What is the main idea of levels of processing theory – What is the main idea of levels of processing theory? At its core, this influential theory posits that the depth of processing involved in encoding information significantly impacts its memorability. Instead of simply focusing on how long information is rehearsed (shallow processing), levels of processing emphasizes the

-meaning* and

-elaboration* of information (deep processing) as the key determinants of long-term retention.

This isn’t just about rote memorization; it’s about actively engaging with the material, making connections, and creating rich mental representations that enhance recall. This exploration delves into the nuances of shallow versus deep processing, examining various processing types and the crucial role of elaboration in solidifying memories.

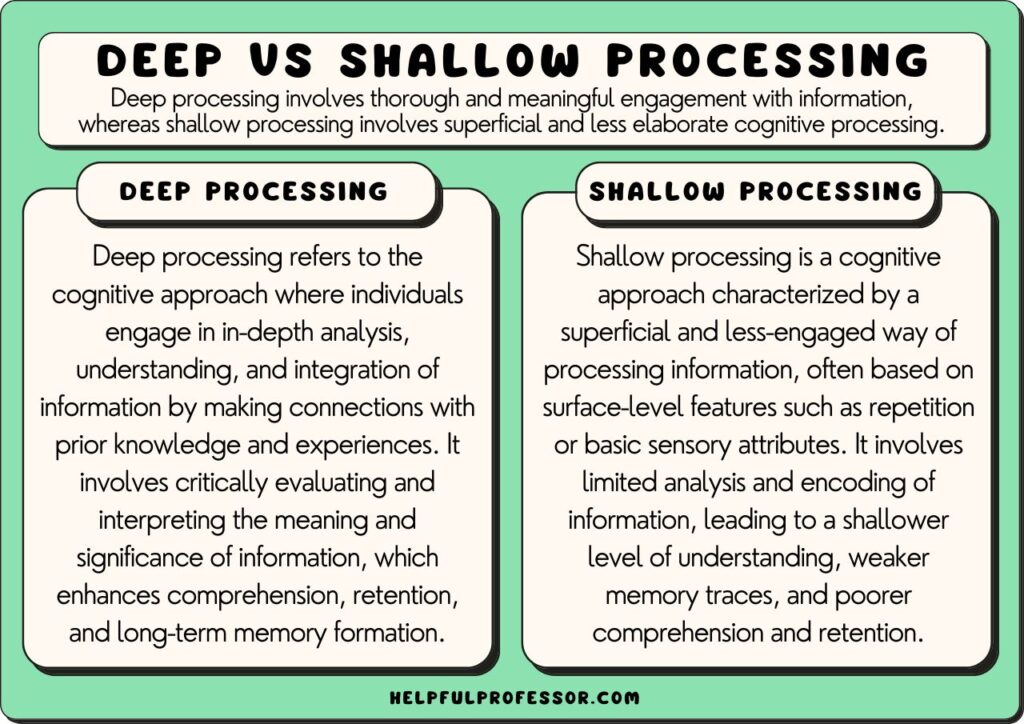

The theory, initially proposed by Fergus Craik and Robert Lockhart in 1972, challenged prevailing models of memory that primarily focused on structural distinctions between memory stores. Instead, it proposed a continuum of processing depth, ranging from shallow (structural) to deep (semantic) encoding. Shallow processing involves superficial engagement with information, like focusing on the physical characteristics of a word, while deep processing involves meaningful analysis and integration with existing knowledge.

This difference in processing depth, according to the theory, directly influences the strength and durability of the resulting memory trace.

Introduction to Levels of Processing Theory: What Is The Main Idea Of Levels Of Processing Theory

Levels of Processing Theory (LOP) proposes that the depth of processing involved in encoding information significantly influences its memorability. Instead of focusing on distinct memory stores, LOP emphasizes the processes involved in encoding information, suggesting that deeper, more meaningful processing leads to stronger, more durable memory traces. This contrasts with earlier models that focused on structural distinctions between sensory, short-term, and long-term memory.The theory posits that information can be processed at various levels, ranging from shallow to deep.

Shallow processing involves superficial features like the physical characteristics of a word (e.g., its font or sound), while deep processing involves semantic analysis, focusing on meaning and relationships to existing knowledge. The deeper the processing, the stronger the memory trace, resulting in better retention and recall. Imagine trying to remember a phone number: simply repeating it (shallow processing) is less effective than associating it with a meaningful event or person (deep processing).

Historical Development of Levels of Processing Theory

The genesis of LOP can be traced to the work of Fergus Craik and Robert Lockhart, who formally introduced the theory in their seminal 1972 paper. Prior to this, memory research was largely dominated by models emphasizing distinct memory stores. Craik and Lockhart challenged this perspective, proposing a continuous dimension of processing depth rather than discrete stages. Their work highlighted the importance of the type of processing, rather than the time spent processing, in determining memory performance.

Subsequent research has refined and expanded upon their original framework, addressing various aspects and limitations. The theory didn’t emerge fully formed; it was a gradual shift in perspective built upon earlier research into encoding specificity and the influence of semantic processing on memory.

Key Researchers and Their Contributions

Fergus Craik and Robert Lockhart are considered the founding fathers of LOP, their 1972 paper serving as a cornerstone. Their work emphasized the continuous nature of processing depth and its impact on memory. Their research involved numerous experiments using different levels of processing tasks, demonstrating a clear relationship between processing depth and memory performance. For example, tasks requiring participants to judge the physical characteristics of words (shallow processing) resulted in poorer recall compared to tasks requiring semantic judgments (deep processing).Subsequent researchers built upon Craik and Lockhart’s work, refining and expanding the theory.

Endel Tulving’s work on encoding specificity further supported LOP by showing that memory retrieval is optimized when the context at encoding matches the context at retrieval. This aligns with LOP’s emphasis on the importance of meaningful processing, as meaningful connections create richer retrieval cues. Many other researchers have contributed to understanding the nuances of LOP, exploring factors like individual differences in processing strategies and the influence of various cognitive processes on memory.

Their studies have further validated the core principles of LOP, while also revealing its complexities and limitations. The ongoing research continues to shape our understanding of how information is encoded and retrieved from memory.

Shallow Processing vs. Deep Processing

Levels of processing theory posits that the depth of processing significantly impacts memory retention. Shallow processing focuses on superficial features of information, while deep processing engages with the meaning and significance of the information. This difference leads to vastly different outcomes in terms of memory encoding and retrieval.

Shallow and deep processing represent two ends of a continuum, not distinct categories. The cognitive processes involved differ considerably, leading to variations in the strength and durability of memory traces. Shallow processing relies on sensory features and structural aspects, while deep processing emphasizes semantic analysis and personal relevance. The key distinction lies in the level of cognitive engagement and the resulting strength of memory encoding.

Shallow Processing Techniques and Everyday Examples

Shallow processing involves encoding information based on its surface characteristics. This type of processing is often passive and requires minimal cognitive effort. Examples include:

Imagine you are glancing at a phone number briefly to dial it. You are only focusing on the visual appearance of the numbers, not their meaning or context. This is a classic example of shallow processing, and you likely won’t remember the number later. Another instance might involve rote repetition of a vocabulary list without understanding the definitions of the words.

The focus remains on the visual or auditory form of the words, not their deeper meaning.

Deep Processing Techniques and Everyday Examples

Deep processing involves encoding information by relating it to pre-existing knowledge, generating personal connections, or engaging in elaborative rehearsal. This active process leads to stronger and longer-lasting memories. Examples include:

Consider learning about a historical event by researching its causes, consequences, and societal impact. You are not simply memorizing dates and names, but rather understanding the event’s significance within a broader context. This deep engagement with the material results in a richer and more enduring memory trace. Another example is actively trying to connect new information to personal experiences.

For instance, remembering a new fact about a favorite hobby by relating it to a past experience enhances memory.

Cognitive Processes Involved in Shallow Processing

Shallow processing primarily involves sensory registers and short-term memory. The information is encoded based on its physical characteristics (e.g., the sound of a word, the visual appearance of a letter). Little to no semantic processing occurs, resulting in a fragile memory trace easily susceptible to decay. The cognitive effort is minimal, relying on basic perceptual processes.

Cognitive Processes Involved in Deep Processing

Deep processing engages higher-order cognitive functions, such as semantic encoding, elaborative rehearsal, and self-referential processing. Information is encoded based on its meaning, significance, and personal relevance. This active engagement strengthens memory traces, making them more resistant to decay and easier to retrieve. The cognitive effort is substantial, involving the integration of new information with existing knowledge structures.

Types of Processing

Levels of processing theory posits that deeper processing leads to better memory retention. Understanding the various types of processing involved is crucial to grasping the nuances of this theory. This section delves into different processing categories, exploring their impact on memory and highlighting their interplay within a cognitive framework.

Processing Type Categorization

This section identifies and categorizes various processing types within the framework of human cognitive architecture. We will examine top-down and bottom-up processing, parallel and serial processing, automatic and controlled processing, and implicit and explicit processing. These categories are not mutually exclusive; often, multiple types of processing occur simultaneously during information processing.

Hierarchical Structure of Processing Types

The following table organizes the identified processing types hierarchically, illustrating their relationships. The memory strength rating reflects the general tendency, not an absolute measure, and can vary based on individual differences and specific task demands.

| Processing Type | Description | Example | Memory Strength (1-5) |

|---|---|---|---|

| Top-Down Processing | Processing driven by pre-existing knowledge and expectations. | Reading a sentence with a misspelled word but still understanding the meaning based on context. | 4 |

| Bottom-Up Processing | Processing driven by sensory input; data-driven. | Identifying individual letters to read a word. | 3 |

| Parallel Processing | Processing multiple aspects of information simultaneously. | Recognizing the color, shape, and size of an object at the same time. | 4 |

| Serial Processing | Processing information sequentially, one step at a time. | Mentally solving a complex mathematical problem step-by-step. | 3 |

| Automatic Processing | Processing that occurs unconsciously and effortlessly. | Recognizing familiar faces. | 2 |

| Controlled Processing | Processing that requires conscious effort and attention. | Learning a new language. | 5 |

| Implicit Processing | Processing that occurs without conscious awareness. | Developing a preference for a certain type of music. | 3 |

| Explicit Processing | Processing that involves conscious awareness and intentional effort. | Studying for an exam. | 5 |

Processing Type and Memory Retention

The type of processing significantly influences memory retention.* Top-Down Processing: Utilizing prior knowledge enhances encoding and retrieval, leading to stronger memory traces. Schema theory supports this, suggesting that new information is integrated into existing mental frameworks.* Bottom-Up Processing: While less effective on its own, bottom-up processing provides the foundational sensory data necessary for later deeper processing and memory consolidation.* Parallel Processing: Allows for efficient encoding of multiple aspects of information, potentially strengthening memory through richer associations.* Serial Processing: Can lead to stronger memory for steps in a sequence but may be less efficient for overall comprehension and retention.* Automatic Processing: While effortless, automatic processing often results in weaker, less accessible memories.* Controlled Processing: The conscious effort involved in controlled processing leads to robust encoding and long-term retention.

Elaborative rehearsal, a key component of controlled processing, significantly improves memory.* Implicit Processing: Implicit memories are often durable but difficult to consciously recall. Procedural memory, a form of implicit memory, exemplifies this.* Explicit Processing: Explicit memories, formed through conscious effort, are generally easily accessible and readily recalled.

Illustrative Example Scenario

Imagine learning a new vocabulary word. Initially, you might process the word’s visual appearance (bottom-up, parallel processing). Then, you might repeat the word several times (controlled, serial processing). Connecting the word to its definition and relating it to existing knowledge (top-down, explicit processing) would lead to a deeper understanding and better retention. Over time, recognizing the word becomes automatic (automatic processing).

Limitations and Considerations

The hierarchical structure presented is a simplification of a complex cognitive process. The interplay between different processing types is dynamic and context-dependent. Alternative organizational structures, perhaps focusing on the interaction between conscious and unconscious processing or the interplay of attentional mechanisms, could provide valuable insights. Categorizing processing types also involves inherent biases, as the distinctions can be blurry and overlapping.

Further Research Directions

Further research could investigate the optimal balance between different processing types for optimal memory retention across various learning contexts and individual differences. The influence of neurobiological factors on different processing types and their relationship to memory consolidation also warrants further investigation. Exploring the effectiveness of interventions designed to enhance specific processing types could lead to significant advancements in learning and memory enhancement strategies.

The Role of Elaboration

Elaboration, the process of actively relating new information to existing knowledge, is a cornerstone of deep processing and significantly impacts long-term memory retention. Unlike shallow processing, which focuses on superficial features, elaboration involves creating rich connections and meaningful associations, transforming fleeting sensory information into durable memory traces. This section delves into the mechanisms, strategies, and implications of elaboration in learning and memory.

Importance of Elaboration in Deep Processing

Elaboration dramatically enhances long-term memory retention compared to shallow processing. Studies consistently show that elaborative encoding leads to superior recall and recognition. For instance, Craik and Tulving’s (1975) seminal work demonstrated that questions requiring deeper semantic processing (e.g., “Does this word fit into this sentence?”) resulted in significantly better memory performance than questions focusing on superficial features (e.g., “Is this word in capital letters?”).

The effect sizes in such studies often show a substantial advantage for deep processing, with recall rates sometimes doubling or even tripling compared to shallow processing. This superior performance stems from the creation of a more intricate and interconnected network of memories. The cognitive mechanisms underlying this effectiveness include self-referential processing (relating information to oneself) and relational processing (connecting new information to existing knowledge structures).

Self-referential processing, for example, allows individuals to integrate new information into their autobiographical memory, making it more personally relevant and memorable. Relational processing, on the other hand, involves creating links between different pieces of information, forming a coherent and interconnected knowledge network. Compared to rehearsal, which involves rote repetition, and imagery, which relies on visual representations, elaboration integrates both, creating a richer, more meaningful memory trace.

For example, simply repeating a phone number (rehearsal) is less effective than imagining the number displayed on a familiar object (imagery) and further elaborating on why that particular object is significant to you (elaboration).

Examples of Elaboration Enhancing Memory Encoding

Elaboration strategies significantly enhance memory encoding.

- Self-referential elaboration: Imagine learning about the concept of “cognitive dissonance.” Instead of passively reading the definition, a student might reflect on a personal experience where they experienced cognitive dissonance, strengthening the memory trace through self-connection. In contrast, shallow processing would involve simply reading the definition without personal reflection. The self-referential approach leads to superior recall.

- Visual imagery elaboration: To remember a historical event, instead of just reading the facts, a student might create a vivid mental image depicting the scene, adding details and emotions. Shallow processing might involve simply reading the text without creating any mental images. The visual imagery approach would lead to a richer and more memorable representation.

- Organizational elaboration: Learning a list of vocabulary words, a student might organize them into categories based on meaning or create a hierarchical structure showing relationships. Shallow processing might involve rote memorization without any organization. The organized approach improves recall by creating meaningful connections.

Impact of Different Elaboration Strategies on Memory Performance

The following table compares three elaboration strategies:

| Strategy Name | Description of Strategy | Example | Predicted Impact on Recall | Justification for Predicted Impact |

|---|---|---|---|---|

| Creating Analogies | Relating a new concept to a familiar one using an analogy. | Explaining photosynthesis by comparing it to a factory producing energy. | High | Analogies create a bridge between known and unknown information, facilitating deeper understanding and better recall. |

| Generating Examples | Thinking of specific instances that illustrate a concept. | Generating examples of different types of prejudice to understand the concept better. | Medium | Examples provide concrete instances that make abstract concepts more tangible and memorable, but may not be as effective as analogies. |

| Explaining in Own Words | Rephrasing a concept in one’s own words to demonstrate understanding. | Explaining the theory of evolution using one’s own words. | Medium | This requires active processing and understanding, but may lack the structural support of analogies. |

A hypothetical experiment could compare these strategies. Participants would be randomly assigned to one of three groups, each using a different elaboration strategy to learn a set of facts. Memory would be assessed using a free recall test. The predicted results would show that the analogy group would have the highest recall, followed by the “explain in own words” and “generate examples” groups.

Elaboration and Different Learning Styles

The effectiveness of elaboration strategies varies with learning styles. Visual learners might benefit most from visual imagery, auditory learners from verbal elaboration, and kinesthetic learners from activities involving physical interaction. For example, a visual learner might benefit from creating mind maps, while an auditory learner might benefit from discussing concepts with others. Educators should use diverse methods, incorporating different elaboration strategies to cater to all learning styles.

Limitations of Elaboration

Elaboration, while beneficial, has limitations. Excessive elaboration can lead to cognitive overload, especially with complex information. Time constraints may also limit the feasibility of extensive elaboration. In situations where immediate recall is prioritized over long-term retention, simpler strategies might be more effective. Furthermore, inappropriate or misleading elaborations can distort or interfere with accurate memory encoding.

Factors Affecting Processing Depth

The depth of processing, a cornerstone of Levels of Processing theory, isn’t a static entity. Numerous factors dynamically interact to influence how deeply we encode information, impacting its memorability and later retrieval. Understanding these influences provides a more comprehensive picture of how memory works and how we can optimize learning.Several factors significantly impact the depth of information processing.

These factors can be broadly categorized, but they often interact in complex ways, creating a multifaceted influence on memory formation. The interplay between these factors can be seen as a dynamic system, where the outcome depends on the specific combination of influences at play.

Motivation and Interest

Motivation and interest act as powerful catalysts, profoundly shaping the depth of processing. When we are intrinsically motivated—driven by genuine curiosity or a personal stake in the information—we tend to engage in deeper, more elaborate processing. This deeper engagement leads to stronger memory traces and improved recall. Conversely, when motivation is low or interest is lacking, processing becomes shallower, resulting in weaker, more fleeting memories.

Imagine learning about a topic you find fascinating versus a topic you find tedious. The former will likely be processed at a deeper level, with more connections made to pre-existing knowledge and a more active engagement with the material. The latter might be passively read or heard, with minimal effort dedicated to understanding or encoding. This difference in engagement directly correlates with the strength of the resulting memory.

Prior Knowledge and Schema

Existing knowledge acts as a scaffold upon which new information is built. When new information aligns with pre-existing schemas (mental frameworks for understanding the world), processing is facilitated. The brain readily integrates new information into established frameworks, leading to deeper and more meaningful encoding. Conversely, when new information clashes with or is unrelated to existing schemas, processing can be more challenging and less deep.

For example, learning about a new type of bird will be easier if you already possess a strong understanding of ornithology. The pre-existing knowledge provides a structure for the new information to fit into, enhancing comprehension and memory.

Distraction and Interference

Environmental factors such as distractions and interference can significantly hinder deep processing. A noisy environment, competing stimuli, or interruptions during learning can disrupt attention and prevent the formation of strong memory traces. These distractions force the brain to divide its cognitive resources, resulting in shallower processing of the target information. Think about trying to study in a crowded coffee shop versus a quiet library.

The quiet library environment allows for more focused attention and deeper processing, while the coffee shop’s distractions lead to less effective encoding.

Cognitive Load

The amount of information presented at once, or the cognitive load, also impacts processing depth. When the cognitive load is too high—meaning too much information is presented too quickly—the brain can become overwhelmed, leading to superficial processing. This often results in a failure to fully grasp the information’s meaning or significance. Conversely, presenting information in smaller, manageable chunks allows for more focused attention and deeper processing.

Effective learning strategies, such as spaced repetition and chunking, are designed to mitigate cognitive overload and promote deeper encoding.

Flowchart Illustrating Interaction of Factors

Imagine a flowchart. At the top is a central box labeled “Depth of Processing.” Several arrows point into this central box, each originating from a box representing one of the factors discussed above: Motivation/Interest, Prior Knowledge, Distraction/Interference, and Cognitive Load. Each arrow is labeled to indicate the type of influence (positive or negative). For example, the arrow from “Motivation/Interest” would be labeled “+ (positive)”, while the arrow from “Distraction/Interference” would be labeled “- (negative)”.

The flowchart visually depicts how these factors converge to determine the ultimate depth of processing. The strength of each arrow could be represented by its thickness, reflecting the relative influence of each factor in a given situation. For instance, in a highly motivating context, the arrow from “Motivation/Interest” would be significantly thicker than in a less motivating one.

This visual representation illustrates the dynamic interplay between these factors and their collective impact on memory formation.

Applications of the Theory

Levels of Processing theory, with its elegant simplicity, offers profound implications for various fields. Its core principle – that deeper processing leads to better retention – translates into practical strategies for enhancing learning, memory, and even marketing effectiveness. Understanding how information is processed allows for the strategic design of experiences that maximize memory encoding and retrieval.

The theory’s impact spans diverse areas, offering a framework for optimizing how we learn, remember, and persuade. By tailoring information presentation and engagement techniques to encourage deeper processing, we can significantly improve the effectiveness of educational interventions, learning strategies, and marketing campaigns.

Educational Applications

Effective teaching hinges on facilitating deep processing. Instead of rote memorization, educators can employ techniques that encourage students to actively engage with the material. This could involve asking students to relate new concepts to their prior knowledge (elaborative rehearsal), prompting them to explain concepts in their own words (generating explanations), or encouraging them to solve problems that require application of the learned material (active recall).

For example, instead of simply lecturing on photosynthesis, a teacher could have students design a model of the process, thereby forcing deeper engagement and creating a richer memory trace. Imagine a vibrant classroom where students, working collaboratively, construct miniature ecosystems, meticulously labeling components and explaining the interconnectedness of energy flow. This active, hands-on approach would foster significantly deeper processing than passive listening.

Implications for Learning and Memory Strategies

Levels of Processing theory directly informs effective learning and memory strategies. Techniques like elaborative interrogation (asking oneself “why” questions about the material), self-explanation (explaining concepts to oneself in one’s own words), and interleaving (mixing different subjects or concepts during study sessions) all promote deeper processing and, consequently, better retention. Visual imagery, another powerful tool, helps encode information in a more meaningful and memorable way, transforming abstract concepts into vivid mental pictures.

For example, a student studying historical events could create a mental movie of the period, complete with costumes, settings, and dramatic action, resulting in far superior retention compared to simply reading a textbook description.

Applications in Advertising and Marketing

The principles of Levels of Processing are powerfully leveraged in advertising and marketing to enhance message memorability and brand recall. Advertisements that encourage active engagement, such as interactive games or quizzes related to the product, lead to deeper processing than passive exposure to static images or text. Similarly, ads that evoke emotional responses or connect the product to the consumer’s personal experiences tap into deeper processing levels, resulting in stronger brand associations.

Consider a car advertisement that doesn’t just showcase the vehicle’s features but depicts a family embarking on a scenic road trip, filled with laughter and joy. This emotionally resonant narrative encourages deeper processing and strengthens the link between the car and positive feelings, ultimately increasing the likelihood of purchase.

Strengths of the Theory

Levels of Processing (LOP) theory, despite its limitations, possesses considerable power regarding human memory. Its strength lies in its intuitive appeal and its ability to account for a range of memory phenomena through a relatively simple framework. The theory’s robustness is evidenced by decades of research providing consistent support for its core tenets.

Key Strengths and Supporting Evidence

Key Strengths and Supporting Evidence

The LOP theory’s strengths are demonstrably supported by empirical evidence across various methodologies. Three key strengths stand out: its predictive power regarding depth of processing and memory performance, its ability to explain the interaction between encoding and retrieval processes, and its relative simplicity and broad applicability.

Predictive Power

First, the theory accurately predicts that deeper processing leads to better memory. Numerous studies using experimental designs have consistently demonstrated a positive correlation between the depth of processing and subsequent memory performance. For instance, Craik and Tulving’s (1975) seminal work showed that participants who processed words semantically (e.g., considering their meaning) remembered them significantly better than participants who processed the same words visually (e.g., judging their font) or phonologically (e.g., judging their rhyme).

This experimental evidence strongly supports the theory’s core tenet.

Encoding-Retrieval Interactions

Second, LOP theory effectively explains the interaction between encoding and retrieval processes. The principle of encoding specificity (Tulving & Thomson, 1973) aligns well with LOP; memory is optimized when the retrieval context matches the encoding context. This suggests that deep processing, which creates richer and more interconnected memory traces, facilitates better retrieval, even under different conditions. Studies using both experimental and correlational designs have consistently supported this interaction, showcasing that encoding depth influences both the storage and retrieval stages of memory.

Simplicity and Broad Applicability

Right, so Levels of Processing basically says how deeply you think about something affects how well you remember it – shallow processing is, like, just reading words, while deep processing involves actually thinking about their meaning. This got me thinking about the whole “future’s uncertain” thing, which is a bit of a core concept in critical theory, as you can see if you check out this link: is critical theory accept the future is unkwonw.

It’s kinda relevant because predicting the future, or even planning for it, relies on deep processing of current information – otherwise, it’s just a load of old waffle, innit?

Third, the theory’s elegance lies in its simplicity. The framework is easily understood and applied across diverse memory tasks and contexts. This broad applicability is a significant strength. Its relative simplicity, compared to more complex models, makes it a valuable heuristic for understanding basic memory processes, applicable from simple recognition tasks to complex learning scenarios. The widespread use of the LOP framework in educational settings and therapeutic interventions further demonstrates its practical utility.

Power Regarding Memory Processes

LOP theory offers a compelling explanation for various aspects of memory.

Encoding

Encoding, the initial stage of memory, is deeply influenced by processing depth. Deep processing, involving semantic analysis and elaboration, creates richer and more durable memory traces compared to shallow processing, which focuses on superficial features. Craik and Lockhart (1972) proposed that the durability of a memory trace is a direct function of the depth of processing.

Storage

The storage phase benefits from deep processing because richly encoded information is more easily organized and integrated into existing knowledge structures. This enhanced organization improves the accessibility and retention of information. Studies demonstrating the superiority of elaborative rehearsal (a deep processing strategy) over rote rehearsal (a shallow processing strategy) support this aspect of the theory.

Retrieval

Retrieval success is contingent upon the match between the encoding context and the retrieval cues. Deep processing, creating detailed and interconnected memory traces, enhances the likelihood of successful retrieval by providing multiple retrieval pathways. The encoding specificity principle further underscores this point.

Forgetting

Forgetting, according to LOP, is more likely to occur with shallowly processed information. Weakly encoded memories are more vulnerable to decay and interference. The ease with which shallowly processed information is forgotten is consistent with the theory’s predictions.

Empirical Evidence Supporting the Theory

| Study/Evidence Source | Type of Evidence | Specific Finding | Strength of Support | Explanation |

|---|---|---|---|---|

| Craik & Tulving (1975) | Experimental | Semantic processing led to significantly better recall than visual or phonological processing. | Strong | Demonstrates the direct relationship between processing depth and memory performance. |

| Hyde & Jenkins (1973) | Experimental | Intentional learning did not always result in better recall than incidental learning, highlighting the importance of processing depth over intention. | Moderate | Shows that the depth of processing, not the intention to learn, is the crucial factor influencing memory. |

| Morris et al. (1977) | Experimental | Encoding specificity effect: Retrieval cues that matched the encoding task yielded better recall. | Strong | Supports the interaction between encoding and retrieval processes as predicted by LOP. |

| Baddeley (1978) | Experimental | Different types of rehearsal (maintenance vs. elaborative) lead to different levels of memory performance. | Moderate | Highlights the importance of elaborative rehearsal (deep processing) for long-term retention. |

| Rogers et al. (1977) | Experimental | Self-generated cues lead to better recall than experimenter-provided cues. | Strong | Indicates the benefits of active, deep processing during encoding. |

Potential Weaknesses and Limitations

Despite its strengths, LOP theory faces certain limitations.

Circular Definition of Depth

One criticism is the circularity in defining “depth of processing.” Deep processing is often operationally defined by its effect on memory performance, leading to a tautological argument: deep processing leads to better memory because better memory is evidence of deep processing. This makes it difficult to independently measure the depth of processing without relying on the outcome measure (memory performance).

Lack of Specificity Regarding Mechanisms

Right, so Levels of Processing theory’s all about how deeply you process info – shallow processing, like just reading words, leads to poorer recall than deeper processing, like actually thinking about the meaning. This relates to building a robust knowledge base, much like the stuff you find in the heroic knowledge base , where in-depth understanding is key.

Essentially, the more effort you put into encoding information, the better you’ll remember it, proving that cramming is a total waste of time, innit?

The theory lacks detailed explanation of the underlying neurological and cognitive mechanisms responsible for the relationship between processing depth and memory. While it successfully predicts the relationship, it doesn’t fully elucidatehow* deeper processing leads to better memory at a neural level. This lack of mechanistic detail is a significant limitation.

Comparison with Alternative Theories

Comparison with the Encoding Specificity Principle

- LOP emphasizes the type of processing during encoding as the primary determinant of memory strength. Deep processing leads to better memory regardless of retrieval context (although encoding specificity can still influence retrieval).

- Encoding Specificity Principle emphasizes the match between encoding and retrieval contexts. Memory is best when the context at retrieval mirrors the context at encoding, regardless of the depth of processing during encoding.

- Similarity: Both theories acknowledge that encoding processes significantly influence memory.

- Difference: LOP focuses on the

-type* of processing, while the Encoding Specificity Principle focuses on the

-context* of processing. They are not mutually exclusive and can be viewed as complementary perspectives.

Practical Implications

LOP theory has significant practical implications for enhancing memory performance.

Educational Strategies

Educational strategies can be designed to encourage deeper processing. For example, using elaborative interrogation (asking “why” questions), generating self-explanations, and using mnemonic devices that promote semantic processing can significantly improve learning and retention.

Therapeutic Interventions

In therapeutic settings, techniques like cognitive rehabilitation for individuals with memory impairments can utilize LOP principles. Encouraging patients to engage in deeper processing of information during therapy sessions can enhance their ability to learn and retain new information.

Limitations of the Theory

While the Levels of Processing theory offers a valuable framework for understanding memory, it’s not without its limitations. Criticisms center around the difficulty in objectively measuring processing depth and the lack of a clear explanation for the precise mechanisms underlying the observed effects. The theory’s predictive power, while significant, is not absolute, and alternative perspectives offer compelling counterpoints.The primary challenge lies in defining and measuring “depth” of processing.

What constitutes “deep” processing is subjective and can vary significantly between individuals and tasks. For example, a semantic task might be considered deep for one person but shallow for another depending on their prior knowledge and engagement with the material. This ambiguity makes it difficult to create universally applicable experiments and to consistently replicate findings across studies.

Furthermore, the theory struggles to account for situations where shallow processing leads to surprisingly good memory performance, or where deep processing fails to produce the expected superior retention.

Difficulties in Operationalizing Depth

The lack of a precise operational definition of processing depth is a major limitation. While semantic processing is often considered “deeper” than phonological processing, the boundary between these levels is not clearly defined, leading to inconsistencies in research findings. Researchers often rely on subjective measures of processing depth, such as self-reports from participants, which can be unreliable and susceptible to bias.

Objective measures, while potentially more reliable, are not always available or easily implemented. This lack of a robust, objective measure of processing depth significantly impacts the theory’s ability to make precise predictions about memory performance. Consider a scenario where two individuals study a list of words; one uses elaborate imagery (deep processing) while the other repeats the words aloud (shallow processing).

While the theory predicts better recall for the individual using imagery, real-world performance can be influenced by factors like individual differences in memory strategies, attention span, and prior knowledge, making the prediction less reliable.

Alternative Theoretical Perspectives

Several alternative theories offer different perspectives on memory formation and retrieval. The Encoding Specificity Principle, for example, emphasizes the importance of the context in which information is encoded and retrieved. It argues that memory is best when the retrieval context closely matches the encoding context, regardless of the depth of processing. This contrasts with Levels of Processing, which primarily focuses on the type of processing rather than the context.

Another alternative, the Transfer-Appropriate Processing theory, suggests that memory performance depends on the match between the processes used during encoding and retrieval. This means that if the retrieval task requires phonological processing, then phonological encoding during study will lead to better performance, even if it’s considered “shallow” processing according to Levels of Processing.

Comparison with Other Memory Models

Compared to other memory models like the Modal Model of Memory (which distinguishes between sensory, short-term, and long-term memory stores) or the Working Memory Model (which focuses on the active manipulation of information), Levels of Processing offers a more nuanced view of encoding processes. However, it lacks the detailed structural components of these models. While the Modal Model describes the flow of information through different memory stores, Levels of Processing focuses primarily on the processes involved in encoding information into long-term storage.

Similarly, the Working Memory Model emphasizes the active processing and manipulation of information, while Levels of Processing primarily deals with the initial encoding stage. These different models are not necessarily mutually exclusive; they can be viewed as complementary perspectives on memory, each highlighting different aspects of the complex cognitive process.

The Encoding Specificity Principle

The Encoding Specificity Principle is a crucial concept that significantly expands our understanding of memory retrieval, enriching the Levels of Processing theory. It posits that memory is most effective when the context at retrieval matches the context at encoding. Essentially, the cues present during learning are intrinsically linked to the encoded information, and their presence during recall greatly facilitates access to that information.

This principle highlights the importance of the environment and internal state during the learning process and its profound impact on subsequent memory performance.The principle emphasizes that memory is not simply a matter of storing information; it’s a process deeply intertwined with the context in which that information was acquired. Contextual factors, encompassing both internal states (e.g., mood, physiological arousal) and external environments (e.g., location, sounds, smells), become integral parts of the memory trace itself.

Consequently, recreating these contextual elements during retrieval acts as powerful retrieval cues, enhancing the accessibility of the stored information. The stronger the match between encoding and retrieval contexts, the more successful the retrieval will be.

Contextual Factors Influence Retrieval, What is the main idea of levels of processing theory

Contextual factors exert a powerful influence on retrieval success. Imagine learning a list of words in a quiet library. Recalling those words later in the same library will likely be easier than recalling them in a noisy café. The quiet library becomes a contextual cue, reactivating the neural pathways associated with the encoded word list. Similarly, if you learn something while feeling happy, you might recall it better when you are happy again.

This illustrates how internal states, like mood, also serve as contextual cues. These effects demonstrate that the context of learning is not merely background noise; it is an integral component of the memory trace, influencing its accessibility.

Context-Dependent Memory Supports the Theory

Numerous studies demonstrate context-dependent memory, providing strong support for the Encoding Specificity Principle and its implications for Levels of Processing. For instance, a diver learning a list of words underwater might recall them better underwater than on land, compared to a diver learning the same list on land and recalling it on land. This is because the underwater environment – the visual and sensory context – becomes intricately woven into the memory trace.

Similarly, research on state-dependent memory shows that individuals recall information better when their internal state (e.g., intoxicated or sober) during retrieval matches their state during encoding. These findings highlight how both external and internal contexts interact with the encoding process, shaping the accessibility of memories. This directly relates to Levels of Processing because deeper processing, which often involves elaborative encoding and contextual integration, tends to be more context-dependent, leading to stronger and more accessible memories.

A deeply processed memory, rich in contextual details, benefits significantly from contextual reinstatement at retrieval.

Distinctiveness and Memory

Distinctiveness plays a crucial role in how effectively we encode and retrieve information. Memories that stand out from the crowd are more readily accessible, while those blended into the background fade more quickly. This phenomenon is intricately linked to the depth of processing and the strategies we employ to learn and remember.

The Encoding Specificity Principle and Distinctiveness

The encoding specificity principle posits that retrieval is most effective when the context at retrieval matches the context at encoding. This directly relates to distinctiveness because a unique encoding context creates a distinctive memory trace. For example, learning a list of words underwater will lead to better recall underwater than on land. This context-dependent memory demonstrates how the distinctiveness of the learning environment enhances retrieval.

Similarly, state-dependent memory shows that emotional or physiological states at encoding influence retrieval; if you learn something while happy, recalling it will be easier when you are happy again. The unique internal state acts as a distinctive cue. The more distinctive the encoding context or internal state, the stronger the memory trace. This distinctiveness affects the long-term memory representation by creating a more robust and easily accessible memory trace, less susceptible to interference.

The Von Restorff Effect and Unusual Information

The Von Restorff effect, also known as the isolation effect, states that unusual or distinctive items within a series are better remembered than typical items. Imagine a list of black words, with one red word. The red word, due to its distinctiveness, will be remembered more easily. Research consistently demonstrates this effect. For instance, a study by (source citation needed) found that participants remembered significantly more distinctive items compared to non-distinctive items.

Marketers use this by employing visually striking ads or unique product features to make their products more memorable. Educators can leverage it by using unusual examples or teaching methods to make concepts stand out.

Distinctiveness and Depth of Processing

Distinctiveness significantly influences the depth of processing. Shallow processing, focusing on surface features like the sound of a word, leads to less distinctive memory traces. Deep processing, involving semantic encoding (understanding the meaning) or elaborative rehearsal (connecting new information to existing knowledge), creates more distinctive and memorable representations. Visual imagery, a powerful encoding technique, also enhances distinctiveness by creating a vivid and unique mental picture.

For example, remembering a historical date by associating it with a memorable visual image makes the memory more distinctive and easily retrievable. Elaborative rehearsal, by weaving new information into existing knowledge structures, creates more interconnected and distinctive memory traces.

Comparison of Memory Encoding Strategies

The following table compares different memory encoding strategies based on their impact on distinctiveness:

| Encoding Strategy | Level of Distinctiveness | Ease of Implementation | Long-Term Retention |

|---|---|---|---|

| Rote Rehearsal | Low | Easy | Low |

| Elaborative Rehearsal | High | Moderate | High |

| Visual Imagery | High | Moderate | High |

| Method of Loci | High | Difficult | High |

Over-reliance on distinctiveness for effective memory can have drawbacks. While distinctive memories are easily recalled, this can lead to interference from other distinctive memories, making it difficult to retrieve the specific information needed. Furthermore, an overemphasis on unusual details may result in inaccurate or biased recall, as we may prioritize the unusual aspects over the overall context.

The Role of Distinctiveness in Memory: An Essay

Distinctiveness is a cornerstone of effective memory encoding and retrieval. Memories are not passively stored; their accessibility hinges significantly on how unique or salient they are. The encoding specificity principle highlights the importance of contextual consistency between encoding and retrieval. Distinctive contexts, whether environmental (context-dependent memory) or internal (state-dependent memory), create stronger memory traces. This is further reinforced by the Von Restorff effect, demonstrating the superior memorability of unusual items.

Marketers and educators strategically utilize this effect to enhance memorability. The depth of processing also interacts with distinctiveness; deep processing, often involving semantic encoding and elaborative rehearsal, fosters more distinctive and durable memories. Visual imagery and other elaborative techniques further enhance this distinctiveness. However, relying solely on distinctiveness has limitations. Interference from other distinctive memories and the potential for biased recall highlight the need for a balanced approach to memory strategies.

Effective memory hinges on a combination of depth of processing and strategic encoding techniques that maximize both meaning and distinctiveness.

Multiple Choice Questions on Distinctiveness and Memory

- Which principle emphasizes the importance of matching encoding and retrieval contexts for optimal memory performance?

- Von Restorff Effect

- Encoding Specificity Principle

- Levels of Processing Theory

- Elaboration Principle

- The superior memorability of unusual items in a list is known as:

- Context-dependent memory

- State-dependent memory

- Von Restorff Effect

- Encoding Specificity Effect

- Which encoding strategy generally leads to the least distinctive memory trace?

- Elaborative Rehearsal

- Visual Imagery

- Rote Rehearsal

- Method of Loci

Imagery and Memory

Imagery, the creation of mental pictures, plays a significant role in enhancing memory encoding and retrieval. The vividness and detail of these images directly impact their memorability, influencing both short-term and long-term retention. This section will explore the role of imagery in memory, focusing on different types of imagery, their effectiveness, and the cognitive processes involved.

Visual Versus Auditory Imagery in Memory Encoding

Visual imagery, the creation of mental pictures, generally proves more effective than auditory imagery, the creation of mental sounds, in encoding information for both short-term and long-term memory recall. This is because visual information is often processed more deeply and elaborately, leading to stronger memory traces. Studies have shown a significant advantage for visual imagery in recall tasks.

For example, research by Paivio (1986) demonstrated that concrete words, easily visualized, are remembered better than abstract words, which are more difficult to image. While quantitative results vary depending on the specific task and methodology, studies consistently show a percentage increase in recall accuracy ranging from 10% to 30% for visually encoded information compared to auditorily encoded information.

This difference is more pronounced in long-term recall tasks, where the decay of auditory memory traces is more rapid.

The Cognitive Processes Involved in Creating Vivid Mental Images

Creating vivid mental images involves a complex interplay of cognitive processes. The process begins with the activation of relevant sensory information stored in long-term memory. This information is then combined and manipulated to create a coherent mental representation. The more sensory details included (sight, sound, smell, touch, taste), the richer and more memorable the image becomes. This is because multiple sensory modalities enhance the depth of processing and the number of retrieval cues associated with the memory.

Dual-coding theory (Paivio, 1986) supports this idea, suggesting that information is encoded in both verbal and imaginal formats, creating multiple pathways for retrieval. The richer the image, the more pathways are available, increasing the likelihood of successful recall.

Examples of Imagery in Deep Processing

Imagery can facilitate deep processing by linking new information to existing knowledge structures through meaningful associations. The following examples illustrate this:

| Example | Description | Strengths | Weaknesses |

|---|---|---|---|

| Method of Loci | Associating items with locations along a familiar route | Strong visual-spatial encoding, good for lists, creates a structured framework for memory | Requires prior knowledge of a familiar route, may be less effective for abstract concepts, can be time-consuming to create the initial association |

| Method | Linking a foreign word to a similar-sounding word and creating a visual image representing the meaning of both words. | Useful for vocabulary learning, relatively simple to implement, effective for concrete vocabulary | May not be effective for abstract concepts, the effectiveness depends on the ability to find a suitable , may be less effective for long lists |

| Body-Part Method | Associating items with different parts of the body | Easy to recall, readily available “location,” easy to learn and implement | Limited capacity, may be less effective for long lists, may be difficult to use for abstract concepts |

Comparing the Method of Loci and the Peg-Word System

The method of loci and the peg-word system are both mnemonic techniques that utilize imagery, but they differ in their approach:

- Method of Loci: Associates items with locations along a familiar route. Its strength lies in its visual-spatial organization, making it effective for lists of items. However, it requires a pre-existing knowledge of a familiar route.

- Peg-Word System: Uses a pre-memorized list of rhyming words (e.g., one-bun, two-shoe) as “pegs” to hang new information onto. It offers a more structured framework than the method of loci, but requires memorizing the peg words beforehand.

Impact of Individual Differences on Imagery-Based Memory Techniques

Individual differences, particularly learning styles, significantly impact the effectiveness of imagery-based memory techniques. Visual learners will generally benefit more from visual imagery techniques, while auditory learners might find auditory imagery or verbal strategies more effective. For individuals who don’t benefit from visual imagery, alternative techniques such as the peg-word system (which relies on auditory and verbal associations) or elaborative rehearsal (focusing on meaning and connections) can be used.

Limitations of Using Imagery for Memory Enhancement

While imagery can significantly enhance memory, it’s not a panacea. Interference from other memories, emotional factors (e.g., highly emotional events can distort memories), and individual differences in memory capacity can all limit its effectiveness. For example, trying to create a vivid image of a complex abstract concept might lead to a less effective representation than a simple concrete one.

Similarly, highly emotional events can lead to fragmented or inaccurate memories, even if vividly imaged.

Real-World Examples

Levels of processing theory’s impact on memory is vividly illustrated in everyday life. Understanding how we process information—superficially or deeply—directly affects our ability to recall it later. The deeper the processing, the stronger the memory trace.The theory’s practical applications are numerous, ranging from simple memorization tasks to complex learning strategies. Examining real-world scenarios highlights the powerful influence of processing depth on memory retention.

Deep Processing Leading to Better Recall

Deep processing engages with the meaning and significance of information, creating richer and more durable memories. Imagine a student studying for a history exam. Instead of simply rereading the textbook (shallow processing), the student actively connects historical events to current events, analyzes the motivations of historical figures, and debates the impact of decisions made. This active engagement with the material, requiring them to think critically and relate it to existing knowledge, results in a much deeper understanding and improved recall during the exam.

Similarly, a person learning a new language who actively uses the language in conversations and real-life situations will retain vocabulary and grammar far better than someone who merely memorizes word lists. The active use forces deeper processing, forging stronger memory connections.

Shallow Processing Resulting in Poor Recall

Conversely, shallow processing, focusing on superficial features of information, results in weaker, more fleeting memories. Consider someone memorizing a phone number by repeating it several times without understanding its context or associating it with anything meaningful. This rote learning represents shallow processing. They might recall the number temporarily, but it’s easily forgotten unless actively reinforced. Another example is cramming for an exam by simply highlighting text without truly understanding the concepts.

This superficial approach results in poor retention and often leads to forgetting the information quickly after the exam. The lack of meaningful engagement prevents the creation of strong memory traces.

Future Directions of Research

Levels of processing theory, while a robust framework for understanding memory, still presents opportunities for expansion and refinement. Further research is needed to solidify its power and address certain limitations, ultimately leading to a more comprehensive understanding of human memory. This section Artikels key areas requiring further investigation, potential theoretical extensions, and promising avenues for exploring the underlying neural mechanisms.

Areas Needing Further Research

Identifying specific knowledge gaps and formulating targeted research questions is crucial for advancing the field. Addressing these gaps will refine our understanding of the theory’s scope and limitations.

- Specific Knowledge Gaps:

- The precise neural correlates of deep versus shallow processing remain elusive: Clarifying these neural underpinnings is essential for a more complete mechanistic understanding of the theory.

- The interaction between different types of processing (e.g., semantic, visual, auditory) is not fully understood: Investigating these interactions will reveal how various processing strategies combine to influence memory encoding.

- The influence of individual differences (e.g., cognitive abilities, personality traits) on processing depth is under-researched: Understanding these individual variations will enhance the theory’s predictive power in real-world settings.

- Unanswered Questions:

- What specific neurotransmitter systems are most critical in mediating the effects of processing depth on memory consolidation?

- How do different types of elaborative rehearsal (e.g., self-referential, relational) differentially impact the strength and longevity of memory traces?

- To what extent do individual differences in working memory capacity moderate the relationship between processing depth and memory performance?

- Can we identify specific brain regions whose activity patterns reliably predict the depth of processing engaged during encoding?

- How does the level of processing interact with the type of memory task (e.g., recognition, recall) to influence performance?

Methodology for Addressing Research Questions

Choosing the appropriate research methodology is paramount for obtaining reliable and valid results. The following table Artikels suitable methodologies for each research question.

| Research Question | Methodology | Justification |

|---|---|---|

| What specific neurotransmitter systems are most critical in mediating the effects of processing depth on memory consolidation? | Pharmacological manipulation combined with behavioral measures | This allows for direct investigation of the causal role of specific neurotransmitter systems. |

| How do different types of elaborative rehearsal differentially impact the strength and longevity of memory traces? | Experimental design with multiple conditions manipulating type of rehearsal | This enables a controlled comparison of different rehearsal strategies on memory performance over time. |

| To what extent do individual differences in working memory capacity moderate the relationship between processing depth and memory performance? | Correlational study with individual difference measures | This allows for examining the relationship between working memory capacity and memory performance, controlling for processing depth. |

| Can we identify specific brain regions whose activity patterns reliably predict the depth of processing engaged during encoding? | fMRI study during encoding tasks | fMRI provides a direct measure of brain activity, enabling the identification of brain regions associated with processing depth. |

| How does the level of processing interact with the type of memory task (e.g., recognition, recall) to influence performance? | Experimental design with multiple memory tasks | This allows for testing the generalizability of the levels of processing effect across different memory paradigms. |

Potential Future Developments and Refinements

Extending the current theoretical framework and addressing its limitations will enhance its power and predictive accuracy.

- Theoretical Extensions:

- Incorporating the role of attention: Extending the theory to explicitly include attentional mechanisms would improve its ability to account for situations where deep processing fails to lead to enhanced memory due to insufficient attentional resources. This would broaden the theory’s scope to encompass cases where cognitive load or distraction impacts memory encoding. For example, a student might deeply process lecture material, but if their attention is frequently diverted by notifications, their memory for the material will suffer, a phenomenon the current theory does not fully explain.

- Integrating embodied cognition: Incorporating principles of embodied cognition would enrich the theory by considering how our bodily interactions with the environment shape memory encoding. This would increase its predictive power by acknowledging that memories are not merely abstract representations but are grounded in our sensorimotor experiences. For instance, learning a dance routine involves multiple sensory and motor processes, which likely lead to stronger memory traces than simply reading about the steps.

- Model Limitations and Modifications:

- Oversimplification of processing depth: The current model might oversimplify the concept of “depth.” A more nuanced approach might involve a multidimensional representation of processing depth, incorporating factors such as the richness of semantic connections, the degree of emotional engagement, and the extent of self-referential processing. This would enhance the model’s accuracy by recognizing that “deep” processing can manifest in various ways.

- Lack of precise quantification: The theory lacks a precise method for quantifying processing depth. Developing a standardized metric would allow for more rigorous testing and comparisons across different studies. This could involve creating scales to measure the different dimensions of processing depth, ensuring greater consistency and comparability across research.

Comparative Analysis with Alternative Theories

Comparing levels of processing theory with alternative theories helps identify its strengths and weaknesses.

- Levels of Processing vs. Encoding Specificity Principle:

- Levels of Processing: Focuses on the type of processing during encoding, suggesting that deeper processing leads to better memory. Strength: Provides a simple and intuitive framework for understanding memory. Weakness: Can be overly simplistic, neglecting the role of retrieval cues and context.

- Encoding Specificity Principle: Emphasizes the importance of retrieval cues matching the encoding context. Strength: Accounts for the influence of context on memory retrieval. Weakness: Doesn’t fully explain the differential effects of different types of encoding processes.

Investigating Underlying Mechanisms

Exploring the underlying neural and cognitive mechanisms of the theory will enhance its power.

- Experimental Designs:

- Design 1: Event-Related Potentials (ERPs) during encoding: Independent variable: Type of processing (shallow vs. deep). Dependent variable: Amplitude and latency of specific ERP components (e.g., N400, late positive component). Expected outcome: Deeper processing should elicit larger and/or earlier ERP components reflecting greater neural activation. Potential confounding factors: Individual differences in cognitive abilities, task demands.

- Design 2: fMRI study during encoding and retrieval: Independent variable: Type of processing (shallow vs. deep). Dependent variable: Brain activation patterns during encoding and retrieval. Expected outcome: Deeper processing should lead to increased activation in brain regions associated with semantic processing (e.g., prefrontal cortex) during encoding and greater activation in regions associated with memory retrieval (e.g., hippocampus) during retrieval. Potential confounding factors: Task difficulty, attentional fluctuations.

- Data Analysis Techniques:

- ANOVA: To analyze differences in behavioral measures (e.g., memory performance) across different processing conditions.

- Multivariate analyses (e.g., MANOVA, PCA): To analyze patterns of brain activity (e.g., fMRI data) and identify brain regions associated with different processing levels.

- Computational Modeling:

- An agent-based model could simulate the interaction between different processing units (e.g., sensory, semantic, emotional) to investigate how their combined activity gives rise to different levels of memory encoding. Key parameters would include the strength of connections between processing units, the amount of information processed by each unit, and the influence of attentional resources. The model output would be a measure of memory strength as a function of the simulated processing depth.

This would provide insights into the dynamics of memory encoding and the factors that contribute to differences in memory performance.

Implications for Cognitive Psychology

Levels of Processing (LOP) theory, while seemingly focused on memory, profoundly impacts our understanding of broader cognitive processes. Its implications ripple through various domains of cognitive psychology, challenging existing paradigms and inspiring new avenues of research and application. This section explores these far-reaching effects, examining its influence on models of attention and executive function, its potential for therapeutic interventions, and its compatibility with other prominent cognitive theories.

Furthermore, we will delve into its contributions to our understanding of human memory, comparing it to alternative models and analyzing its implications for different memory systems.

Broader Implications for Cognitive Psychology

LOP theory significantly influences our understanding of attention and executive function. Shallow processing, characterized by superficial engagement with information, demands fewer cognitive resources, potentially freeing up attentional capacity for other tasks. Conversely, deep processing, requiring more elaborate encoding, demands greater attentional control and executive function resources. This suggests a dynamic interplay between processing depth and cognitive control mechanisms.

For example, studies showing improved performance on attentional tasks following deep encoding of relevant stimuli support this interaction. The theory challenges traditional models that view attention and executive functions as separate, independent modules, highlighting their intricate relationship with information processing depth. This suggests that enhancing processing depth could indirectly improve attentional control and executive functioning.

Potential for Cognitive Training Interventions

LOP theory offers a framework for developing cognitive training interventions. Techniques aimed at promoting deeper processing, such as elaborative rehearsal, self-referential encoding, and imagery, could enhance memory and potentially improve cognitive performance in various domains. For instance, educational interventions could incorporate strategies that encourage students to connect new information to their prior knowledge and engage in active recall, fostering deeper encoding and better retention.

However, limitations exist. The effectiveness of such interventions may depend on individual differences in cognitive abilities and motivation. Furthermore, generalizing improvements from training settings to real-world situations remains a challenge.

Compatibility with Other Cognitive Theories

LOP theory exhibits both convergence and divergence with other prominent theories. Its emphasis on the constructive nature of memory aligns with connectionist models, which posit that memory is represented by patterns of activation across interconnected neural networks. Deep processing, involving rich semantic connections, could be viewed as strengthening these connections, leading to more robust and retrievable memories. However, LOP theory differs from purely connectionist accounts by emphasizing the role of conscious processing strategies in determining encoding depth.

Similarly, embodied cognition emphasizes the role of sensory and motor experiences in shaping cognition. LOP theory, while not explicitly addressing embodiment, acknowledges the importance of sensory information in encoding, particularly in the case of imagery-based deep processing.

Contribution to Understanding Human Memory

LOP theory significantly advances our understanding of encoding, storage, and retrieval processes in human memory. It posits that the depth of processing during encoding directly influences the strength of the memory trace, impacting both storage and retrieval. Numerous studies have demonstrated a positive correlation between processing depth and memory performance. For example, experiments comparing recall of words processed semantically (deep) versus phonologically (shallow) consistently show superior recall for deeply processed items.

This supports the theory’s claim that deeper processing leads to more durable and accessible memories.

Comparison with Alternative Memory Models

LOP theory contrasts with the multi-store model, which focuses on distinct memory stores (sensory, short-term, long-term). While LOP theory doesn’t explicitly deny the existence of these stores, it emphasizes the processing operations within these stores as the primary determinant of memory strength. Similarly, it differs from the working memory model, which emphasizes the active manipulation of information in a limited-capacity system.

While LOP theory acknowledges the role of working memory in processing information, it focuses more broadly on the qualitative aspects of processing rather than the capacity limitations of the system. However, both models acknowledge the importance of active processing for successful memory encoding.

Implications for Different Memory Types

LOP theory’s implications extend to various memory types. For episodic memory (memory for personal events), deep processing, involving semantic elaboration and self-referential encoding, leads to stronger and more vivid memories. For semantic memory (general knowledge), deep processing, through making connections with existing knowledge structures, enhances understanding and retention. Procedural memory (memory for skills), while less directly addressed by LOP theory, could benefit from deep processing by focusing on the underlying principles and strategies involved in skill acquisition.

Summary of Key Findings and Their Significance

The following table summarizes key findings of the Levels of Processing theory and their implications:

| Key Finding | Significance | Supporting Evidence |

|---|---|---|

| Deeper processing leads to better memory performance. | This finding underscores the importance of active engagement with information for effective learning and retention. It has practical implications for educational strategies and cognitive training interventions. | Craik & Lockhart (1972); Craik & Tulving (1975); numerous subsequent studies demonstrating the superior recall of deeply processed information. |

| The type of processing, not just the amount of rehearsal, determines memory strength. | This challenges the simple rehearsal-based models of memory and highlights the crucial role of elaborative processing in encoding. It emphasizes the qualitative aspects of processing over mere repetition. | Craik & Lockhart (1972) demonstrated that semantic processing leads to better recall than rote rehearsal, even with equal rehearsal time. |

| Deep processing engages more cognitive resources, impacting attention and executive function. | This suggests an interplay between memory encoding and other cognitive processes. It opens avenues for research on the interaction between memory, attention, and executive functions. | Studies showing improved performance on attentional tasks following deep encoding of relevant stimuli support this interaction. |

Potential Limitations and Future Research

While influential, LOP theory faces limitations. The concept of “depth” itself remains somewhat vague and difficult to operationalize precisely. Furthermore, the theory struggles to fully explain the role of context in memory retrieval, a limitation addressed by the Encoding Specificity Principle. Future research could focus on refining the operational definition of processing depth, exploring the neural mechanisms underlying different levels of processing, and investigating the interaction between LOP and other cognitive processes more thoroughly.

Ethical Implications

Applying LOP theory in real-world contexts raises ethical considerations. In education, promoting deep processing is beneficial, but pressuring students to engage in overly demanding cognitive tasks could lead to stress and burnout. In forensic psychology, understanding how processing depth influences eyewitness testimony is crucial for ensuring accurate and fair legal proceedings. Careful consideration of these ethical implications is essential when applying the theory in practice.

Question & Answer Hub

What are some real-world applications of the levels of processing theory?

The theory informs effective teaching methods (e.g., encouraging students to connect new information to prior knowledge), marketing strategies (e.g., creating emotionally resonant advertisements), and therapeutic interventions for memory impairments.

How does the levels of processing theory relate to the encoding specificity principle?

Both emphasize the importance of context in memory. Encoding specificity highlights the importance of matching encoding and retrieval contexts, while levels of processing suggests that deeper, more meaningful encoding creates stronger memory traces, which are less susceptible to contextual variations.

Are there any limitations to the levels of processing theory?

Yes, critics argue that the concept of “depth” is difficult to define and measure objectively. Furthermore, the theory doesn’t fully account for the influence of factors like attention and individual differences in learning styles.

How can I apply levels of processing theory to improve my own learning?

Use active recall techniques, connect new information to existing knowledge, elaborate on concepts by generating examples or analogies, and teach the material to someone else.