Is computability theory died? Ha! Not a chance. While some might think the field is as dusty as a forgotten textbook, the reality is far more vibrant and, dare we say, hilarious. From the seemingly mundane task of optimizing database queries to the mind-bending complexities of quantum computing, computability theory continues to shape our digital world in unexpected and often amusing ways.

We’ll delve into the surprising relevance of this seemingly antiquated field, exploring its unexpected applications and enduring puzzles. Prepare for a journey that’s both intellectually stimulating and strangely funny.

This exploration will cover the practical applications of computability theory in modern computing, examining its influence on database systems, network protocols, and cryptography. We’ll also consider its role in cutting-edge technologies like cloud computing, artificial intelligence, and, of course, the wonderfully weird world of quantum computing. We’ll even tackle some of the unresolved problems and limitations of current models, spiced with real-world examples and a touch of playful speculation.

Get ready for a laugh, a learning experience, and maybe even a slight existential crisis.

The Relevance of Computability Theory Today

Computability theory, despite its foundational nature, remains profoundly relevant in modern computing. Far from being a relic of the past, its core principles continue to shape the design, analysis, and limitations of today’s most advanced technologies. Understanding computability’s implications is crucial for developing efficient, reliable, and secure systems.

Computability Theory’s Applications in Algorithm Design and Analysis

Computability theory provides a rigorous framework for understanding the limits of what can be computed and the resources required to perform computations. This understanding is critical for designing efficient algorithms and analyzing their performance in various application domains.

Computability Theory in Database Systems

Query optimization in database systems heavily relies on computability theory. The complexity of queries, involving joins, selections, and projections, can be analyzed using concepts like NP-completeness. For instance, determining the optimal execution plan for a complex query often involves exploring a space of possible plans, a problem that is inherently computationally expensive. Algorithms like dynamic programming are used to find near-optimal plans within reasonable time constraints.

The design of database indices also benefits from computability considerations; the choice of index structure impacts the efficiency of query processing.

Computability Theory in Network Protocols

The reliability and efficiency of network communication protocols like TCP/IP are deeply intertwined with computability. TCP’s congestion control algorithms, for example, are designed to adapt to network conditions and avoid overwhelming the network. These algorithms often involve intricate trade-offs between speed and reliability, with computability theory providing a framework for analyzing their performance and stability. The design of routing protocols also requires careful consideration of computability; finding the shortest path in a network is a well-studied problem in graph theory, a field closely related to computability.

Computability Theory in Cryptography

Cryptography relies heavily on the computational complexity of problems. The security of algorithms like RSA depends on the difficulty of factoring large numbers. Elliptic curve cryptography relies on the difficulty of the discrete logarithm problem in elliptic curve groups. Computability theory provides the mathematical foundation for proving the security of these algorithms, establishing lower bounds on the computational resources required to break them.

The analysis of side-channel attacks, which exploit information leaked during computation, also involves concepts from computability theory.

Computability Theory Underpinning Technological Advancements

Several contemporary technological advancements are directly influenced by the principles of computability theory.

Computability Theory and Cloud Computing

Cloud computing platforms, despite their massive scale and complexity, are ultimately based on Turing-complete machines. This means they can, in principle, compute anything a classical computer can compute. However, understanding the limits of computability helps in designing efficient resource allocation strategies and optimizing performance within the constraints of distributed systems.

Computability Theory and Artificial Intelligence

The halting problem, a fundamental result in computability theory, highlights the inherent limitations of AI. It states that there is no general algorithm that can determine whether an arbitrary program will halt or run forever. This has significant implications for AI, as many AI tasks involve searching for solutions in potentially infinite spaces. While AI algorithms can often find practical solutions, the halting problem underscores the limitations of guaranteeing completeness or termination for all possible inputs.

For example, in the domain of automated theorem proving, it’s impossible to create a system that can definitively prove or disprove all mathematical statements.

Computability Theory and Quantum Computing

Quantum computing introduces a new paradigm of computation, challenging and extending classical computability theory. While a quantum computer is still a Turing machine, it operates according to the principles of quantum mechanics, allowing it to potentially solve certain problems exponentially faster than classical computers. This leads to a new field of quantum computability, exploring the computational power of quantum systems and defining new complexity classes.

The relationship between classical and quantum computability is a vibrant area of ongoing research.

Real-World Scenarios Illustrating Computability Theory

Scenario 1: Fraud Detection in Finance

Many financial institutions utilize sophisticated algorithms to detect fraudulent transactions. These algorithms often employ machine learning techniques trained on historical data. However, the design and evaluation of these algorithms rely on computability concepts. For example, the choice of algorithm and its complexity directly impact the speed and accuracy of fraud detection. The trade-off between false positives (flagging legitimate transactions as fraudulent) and false negatives (missing actual fraudulent transactions) is a key consideration, often analyzed using concepts from statistical learning theory, a field closely related to computability.

Scenario 2: The Limitations of Automated Medical Diagnosis

Developing a system that can perfectly diagnose all medical conditions is an undecidable problem. The complexity of human biology and the vast number of possible diseases make it impossible to create an algorithm that guarantees a correct diagnosis in all cases. Medical AI systems therefore rely on probabilistic models and heuristics, acknowledging the inherent limitations imposed by computability.

Researchers focus on improving accuracy and robustness within these limitations, rather than striving for perfect, complete diagnosis.

Scenario 3: Recent Advancements in Program Verification

Recent research in program verification utilizes techniques from computability theory to formally prove the correctness of software. These techniques often involve model checking, which systematically explores the state space of a program to verify its behavior against a given specification. Advances in model checking algorithms and the development of more powerful automated theorem provers are pushing the boundaries of what can be formally verified, increasing the reliability and security of critical software systems.

Summary of Findings

| Application Area | Specific Algorithm/Concept | Real-World Example | Impact/Outcome |

|---|---|---|---|

| Database Systems | Dynamic Programming for Query Optimization | Optimizing complex SQL queries in a large e-commerce database | Faster query execution, improved user experience |

| Network Protocols | Congestion Control Algorithms (TCP) | Efficient and reliable data transfer over the internet | Improved network performance, reduced latency |

| Cryptography | RSA Algorithm | Secure online transactions (e.g., online banking) | Protection of sensitive data from unauthorized access |

| Cloud Computing | Turing Completeness | Ability to run diverse applications on cloud platforms | Scalability, flexibility, and cost-effectiveness |

| Artificial Intelligence | Halting Problem | Limitations in guaranteeing complete solutions for AI tasks | Focus on heuristic approaches, probabilistic models |

| Quantum Computing | Quantum Algorithms (e.g., Shor’s algorithm) | Potential for faster solutions to specific computational problems | Advancements in cryptography, drug discovery, materials science |

Unresolved Problems in Computability Theory

Computability theory, while providing a foundational understanding of what problems are solvable by computers, leaves many significant questions unanswered. These open problems continue to drive research, pushing the boundaries of our understanding of computation and its limitations. Addressing these challenges is crucial for advancing both theoretical computer science and its practical applications.Despite decades of research, several fundamental questions remain elusive.

The limitations of current models, particularly in dealing with non-deterministic or probabilistic computation, highlight the need for more sophisticated frameworks. This section explores some key unresolved problems and their implications.

The P versus NP Problem

The P versus NP problem is arguably the most famous unsolved problem in computer science. It asks whether every problem whose solution can be quickly verified (NP) can also be solved quickly (P). This seemingly simple question has profound implications for cryptography, optimization, and numerous other fields. If P=NP, many currently intractable problems, such as factoring large numbers (crucial for modern encryption), would become efficiently solvable.

Conversely, if P≠NP, it would confirm the inherent difficulty of a vast class of problems, impacting the design of secure systems and algorithms. The implications are far-reaching, impacting fields from logistics to drug discovery. Significant effort has been invested in resolving this question, but a definitive answer remains elusive.

The Complexity of Diophantine Equations

Hilbert’s Tenth Problem, concerning the solvability of Diophantine equations, was famously shown to be undecidable. This means there is no algorithm that can determine, for all Diophantine equations, whether they have integer solutions. However, many open questions remain regarding the complexity of determining solvability for restricted classes of Diophantine equations. Understanding the precise computational boundaries of these restricted cases is a major area of ongoing research.

For example, determining the complexity of solving Diophantine equations with a bounded number of variables is an active research area.

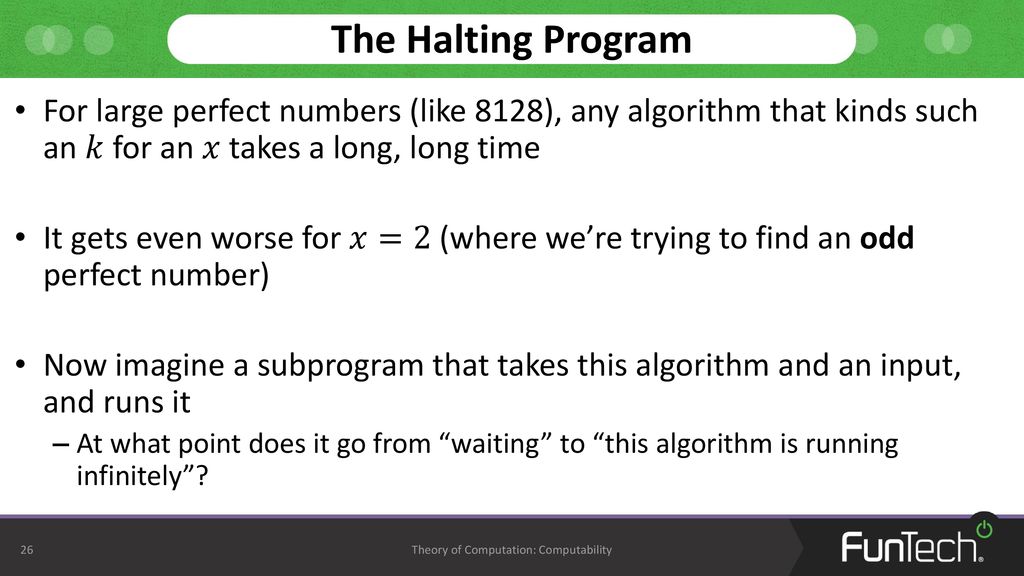

The Halting Problem and its Variations

The Halting Problem, which proves the impossibility of creating a general algorithm to determine whether any given program will halt or run forever, is a cornerstone of computability theory. However, variations of this problem, focusing on specific classes of programs or computational models, continue to pose significant challenges. For example, determining the halting problem’s complexity for restricted programming languages or for programs operating under specific resource constraints remains an open area of investigation.

These refined questions can offer valuable insights into the limits of computation within practical contexts.

Oracle Machines and the Limits of Relative Computability

Oracle machines, theoretical computers with access to an “oracle” that can instantly solve a specific problem, provide a framework for exploring relative computability. Many open questions exist regarding the power of different oracles and their impact on the complexity of various problems. Understanding the hierarchy of oracle-based computation can shed light on the fundamental limits of what is computable, even with access to powerful external resources.

This is especially relevant in the context of modern distributed computing and cloud-based solutions, where access to vast computational resources raises questions about the limits of problem-solving capability.

Computability Theory and Quantum Computing

Computability theory, traditionally focused on classical computation models like Turing machines, has seen a significant shift with the advent of quantum computing. This new paradigm introduces fundamentally different computational capabilities, challenging established theoretical boundaries and opening avenues for exploring previously intractable problems. The comparison between classical and quantum computability reveals a fascinating interplay between theoretical limitations and the potential for exponential speedups in specific problem domains.Classical computability rests on the principles of Boolean logic, where bits represent 0 or 1.

Quantum computability, however, leverages the principles of quantum mechanics, utilizing qubits that can exist in a superposition of 0 and 1 simultaneously. This allows for parallel computation on a scale unimaginable in classical systems. While Turing machines provide a robust framework for understanding the limits of classical computation, quantum computers offer a vastly different computational model, potentially circumventing some of those limitations.

Classical versus Quantum Computability

Classical computability is defined by the Church-Turing thesis, which states that any effectively calculable function can be computed by a Turing machine. This thesis provides a solid foundation for understanding the limits of computation. However, it does not address the potential computational power of quantum mechanics. Quantum computability, in contrast, explores the computational capabilities of quantum systems. It utilizes concepts like superposition and entanglement to perform computations in a fundamentally different way.

A key difference lies in the ability of quantum computers to explore multiple computational paths simultaneously, offering the potential for exponential speedups for certain algorithms. For example, Shor’s algorithm, designed for quantum computers, can factor large numbers exponentially faster than the best known classical algorithms, posing a significant threat to current cryptographic systems.

Impact of Quantum Computing on Computability Theory

The emergence of quantum computing significantly impacts computability theory by challenging existing paradigms and introducing new research avenues. It necessitates a re-evaluation of complexity classes and the development of new theoretical frameworks to understand the power and limitations of quantum computation. The possibility of solving problems considered intractable by classical computers, such as factoring large numbers or simulating quantum systems, fundamentally alters our understanding of computational complexity.

This leads to the exploration of new complexity classes, such as BQP (Bounded-error Quantum Polynomial time), which describes problems solvable efficiently by a quantum computer with bounded error probability. The investigation of the relationship between BQP and other complexity classes like P and NP remains a central focus of research.

Hypothetical Scenario: Quantum Simulation of Protein Folding

Consider the problem of protein folding, a crucial challenge in biology and drug discovery. Predicting the three-dimensional structure of a protein from its amino acid sequence is computationally expensive, even for relatively small proteins. Classical algorithms struggle with the vast number of possible conformations. A hypothetical scenario involves a quantum computer using a quantum algorithm designed to simulate the quantum mechanical interactions within the protein.

The superposition of qubits allows the quantum computer to explore multiple protein conformations simultaneously. By leveraging quantum entanglement, the algorithm efficiently calculates the energy of each conformation and identifies the lowest energy state, representing the most likely folded structure. This significantly reduces the computational time compared to classical approaches, accelerating drug discovery and furthering our understanding of biological processes.

This scenario highlights the potential of quantum computing to solve problems that are intractable for classical computers, thus expanding the boundaries of computability theory.

The Evolution of Computability Models

Computability theory has undergone a remarkable evolution, progressing from foundational theoretical models to encompass the complexities of modern computational paradigms. This evolution reflects not only advancements in our understanding of computation but also the expanding scope of problems we seek to solve using computers. This section details key milestones in the development of computability models, compares their capabilities, and examines the broader impact of this evolving understanding on computer science.

Timeline of Key Computability Models

The development of computability models has been a gradual process, building upon earlier work and responding to new challenges. This timeline highlights some of the most influential milestones.

- 1936: Turing Machine

-Alan Turing introduced the Turing machine, a theoretical model of computation that can simulate any algorithm. This provided a formal definition of what is computable. - 1936: Lambda Calculus

-Alonzo Church developed the lambda calculus, an alternative formal system for expressing computation. It was later proven to be equivalent in computational power to the Turing machine. - 1940s-1950s: Finite Automata

– The development of finite automata, simpler models than Turing machines, provided crucial insights into the limits of computation and laid the groundwork for the design of early computers. - 1950s-1960s: Pushdown Automata

– Pushdown automata, extending finite automata with a stack, were developed to model context-free grammars and are fundamental in compiler design. - 1960s-1970s: Cellular Automata

-John von Neumann and others explored cellular automata, demonstrating computation could emerge from simple local interactions in a grid-like structure. - 1970s-1980s: Complexity Theory

-The rise of complexity theory provided a framework for classifying problems based on their computational resource requirements (time and space). - 1980s-Present: Probabilistic Computation

-Models incorporating randomness, such as probabilistic Turing machines, were developed, finding applications in areas like randomized algorithms and cryptography. - 1980s-Present: Parallel Computing

– The development of parallel computing models explored ways to perform computations simultaneously, leading to significant increases in processing speed. - 1990s-Present: Quantum Computing

– Quantum computing emerged, leveraging quantum phenomena like superposition and entanglement to potentially solve problems intractable for classical computers. - Present: DNA Computing

– Research into DNA computing explores the use of DNA molecules for computation, offering potential for massive parallelism and data storage.

Comparative Analysis of Computational Models

The following table compares several computational models based on their computational power, strengths, weaknesses, and applications.

| Model | Computational Power | Strengths | Weaknesses | Applications |

|---|---|---|---|---|

| Turing Machine | Most powerful; can compute any computable function | Theoretically complete, serves as a universal model | Highly abstract, impractical for real-world implementation | Theoretical analysis of computation |

| Finite Automata | Can recognize regular languages | Simple, easy to implement, efficient for simple pattern matching | Limited computational power; cannot handle complex grammars | Lexical analysis in compilers, text processing |

| Pushdown Automata | Can recognize context-free languages | More powerful than finite automata; handles nested structures | Cannot handle context-sensitive languages | Parsing programming languages, compiler design |

| Cellular Automata | Computational power varies depending on rules; can be Turing-complete | Can model complex systems with simple rules; highly parallel | Difficult to design and analyze; can be computationally expensive | Simulation of physical systems, image processing |

| Quantum Computer | Potentially exponentially more powerful than classical computers for certain problems | Can solve some problems intractable for classical computers (e.g., factoring large numbers) | Still in early stages of development; prone to errors; requires specialized hardware | Cryptography, drug discovery, materials science |

The computational power of these models increases from finite automata to Turing machines, with quantum computers potentially exceeding even Turing machines for specific problem classes. However, increased power often comes at the cost of increased complexity and resource requirements. Each model is best suited for particular types of problems, highlighting the diversity of computational approaches.

Evolution of Computability Understanding

The field of computability theory has witnessed significant advancements, fundamentally reshaping our understanding of computation. Initially, the focus was on defining what problems are solvable by algorithms. Early limitations included a lack of formal frameworks for defining computation and understanding the boundaries of what algorithms could achieve. The Church-Turing thesis, which posits that any effectively computable function can be computed by a Turing machine, provided a crucial foundational step.

However, it remains a thesis, not a proven theorem, and continues to be debated in the context of novel computational paradigms such as quantum computing.Key breakthroughs included the development of the Turing machine and lambda calculus, providing mathematically rigorous models of computation. The proof of the undecidability of the halting problem demonstrated inherent limits to computation, highlighting problems for which no algorithm can provide a solution.

This had a profound impact on computer science, influencing the design of programming languages, algorithms, and the understanding of computational complexity. The development of complexity theory further refined our understanding, categorizing problems based on their resource requirements. This enabled a more nuanced approach to problem-solving, identifying problems solvable in polynomial time (P) versus those believed to require exponential time (NP).The impact on computer science is immense.

The theoretical foundations established by computability theory directly inform the design and analysis of algorithms and data structures. Understanding computational complexity helps guide the selection of algorithms and the development of efficient software. The theoretical limits established by computability theory have shaped the expectations of what computers can achieve, fostering realistic approaches to problem-solving.Current challenges include refining our understanding of the Church-Turing thesis in the context of quantum computing and other unconventional models.

The question of whether quantum computers can solve problems beyond the capabilities of classical computers remains a central focus of research. Further exploration of the relationships between different complexity classes and the development of new computational models continue to push the boundaries of our understanding of computation.

Visual Representation of a Chosen Model: Finite Automata

Finite automata are well-suited for solving problems involving pattern matching in strings. Consider the problem of determining whether a string contains the substring “011”. A finite automaton can be designed to accept strings containing this substring.The automaton would have four states:State 0: Initial stateState 1: After reading ‘0’State 2: After reading ’01’State 3: After reading ‘011’ (accepting state)Transitions:State 0 on ‘0’ goes to State 1State 1 on ‘1’ goes to State 2State 2 on ‘1’ goes to State 3State 3 on any input stays in State 3All other transitions go back to State 0.This automaton has a time complexity of O(n), where n is the length of the input string (linear time), and a constant space complexity, O(1), as the number of states is fixed.

Computability Theory and Artificial Intelligence

Computability theory, the branch of mathematics exploring what problems can be solved algorithmically, provides a crucial theoretical framework for understanding the capabilities and limitations of artificial intelligence (AI). The inherent limitations defined by computability theory directly impact the design, development, and ultimate potential of AI systems. This relationship is particularly relevant as AI systems become increasingly sophisticated and their applications broaden.The theoretical limits of AI are significantly shaped by computability theory.

Specifically, the concept of undecidability—the existence of problems for which no algorithm can provide a solution in all cases—poses fundamental constraints on what AI can achieve. This means certain tasks, regardless of computational power, will remain beyond the reach of even the most advanced AI systems. Understanding these limits is critical for setting realistic expectations and directing research efforts toward achievable goals.

Computability Theory’s Influence on AI Algorithm Development

Computability theory informs the design of AI algorithms in several key ways. The choice of algorithms for specific tasks is often dictated by considerations of computational complexity, a direct consequence of computability theory’s focus on resource requirements. For instance, the selection between a polynomial-time algorithm and an exponential-time algorithm for a particular problem has profound implications for the scalability and practicality of an AI system.

The development of efficient algorithms, a central theme in both fields, ensures AI systems can operate within acceptable time and resource constraints. Furthermore, techniques from computability theory, such as the use of Turing machines as models of computation, provide a formal framework for analyzing and verifying the correctness of AI algorithms. This rigorous approach helps to build trust and reliability in AI systems, especially in safety-critical applications.

Undecidability and Artificial General Intelligence (AGI)

The concept of undecidability presents significant challenges to the pursuit of artificial general intelligence (AGI). AGI aims to create AI systems with human-level cognitive abilities, capable of tackling a wide range of tasks. However, the existence of undecidable problems implies that no AGI system, regardless of its sophistication, can solve every conceivable problem. This inherent limitation necessitates a shift in focus from striving for universal problem-solving capabilities to developing AI systems that excel in specific domains while acknowledging their limitations.

The pursuit of AGI must therefore grapple with the reality that some problems are fundamentally intractable, requiring a more nuanced approach to AI design and development that considers these theoretical boundaries. For example, the halting problem, a classic example of undecidability, demonstrates that it is impossible to create a general algorithm that can determine whether an arbitrary program will halt or run forever.

This has direct implications for the design of self-improving AI systems, as the ability to predict the behavior of such systems is fundamentally limited. This understanding of undecidability necessitates a more cautious and robust approach to the development of increasingly complex AI systems.

The Impact of New Technologies on Computability

The field of computability theory, traditionally focused on Turing machines and their limitations, is undergoing a significant transformation due to the emergence of novel computing paradigms. These advancements challenge established theoretical boundaries and necessitate a reassessment of what is computationally feasible. This section explores the influence of these emerging technologies, offering predictions for the future of computability and examining how new computing models might alter existing theoretical frameworks.Neuromorphic computing, inspired by the structure and function of the human brain, presents a particularly compelling example.

Unlike traditional von Neumann architectures, neuromorphic systems process information in a massively parallel and distributed manner. This fundamentally alters the way computation is defined and measured, potentially rendering some traditional complexity analyses obsolete. The inherent stochasticity and fault tolerance of these systems also pose challenges to the deterministic models that underpin much of classical computability theory.

Neuromorphic Computing and its Challenges to Classical Computability, Is computability theory died

Neuromorphic computing’s departure from the sequential, deterministic nature of Turing machines presents a unique challenge to classical computability theory. The parallel, asynchronous processing within neuromorphic hardware makes it difficult to directly apply existing models of computation and complexity. For instance, analyzing the time complexity of an algorithm running on a neuromorphic chip requires new metrics that account for the concurrent execution of numerous operations.

Furthermore, the stochastic nature of neuronal computations, influenced by factors like noise and synaptic weight variations, introduces uncertainty that traditional models struggle to capture. Researchers are actively developing new theoretical frameworks to address these challenges, including probabilistic models of computation and novel complexity measures tailored to the characteristics of neuromorphic systems. One example is the exploration of how to map traditional algorithms onto neuromorphic architectures efficiently, a process which is not straightforward due to the differing computational paradigms.

Success in this area would require novel approaches to algorithm design and analysis.

Predictions for the Future of Computability

The convergence of several technological advancements, including neuromorphic computing, quantum computing, and advanced materials science, is poised to reshape the landscape of computability. We can predict an increasing focus on probabilistic and approximate computing, reflecting the inherent uncertainty in many real-world problems and the limitations of deterministic models in capturing complex systems. Furthermore, the development of new theoretical frameworks will be crucial to understand and harness the computational power of unconventional architectures.

For example, we might see the emergence of new complexity classes that specifically address the capabilities and limitations of neuromorphic or quantum systems. This could lead to a more nuanced understanding of the fundamental limits of computation, going beyond the limitations established by Turing’s work. Consider, for instance, the potential for neuromorphic systems to efficiently solve problems currently considered intractable for classical computers, potentially leading to breakthroughs in areas like artificial intelligence and drug discovery.

However, a corresponding increase in the difficulty of verifying the correctness of computations performed on such systems is also expected.

Quantum Computing’s Impact on Computability

Quantum computing, leveraging the principles of quantum mechanics, introduces a fundamentally different computational paradigm. Quantum algorithms, such as Shor’s algorithm for factoring large numbers, demonstrate the potential to solve problems intractable for classical computers. However, the theoretical framework for analyzing quantum computation remains an active area of research. Existing models, while providing insights into the power of quantum algorithms, often struggle to capture the full complexity of quantum computation, especially when dealing with noisy intermediate-scale quantum (NISQ) devices.

The development of robust error-correction techniques and more sophisticated models of quantum complexity is essential for fully realizing the potential of quantum computing and understanding its implications for computability theory. For example, the development of fault-tolerant quantum computers could potentially lead to the discovery of new algorithms that efficiently solve problems previously thought to be unsolvable, challenging existing classifications of computational complexity.

Applications of Computability in Cryptography

Computability theory plays a fundamental role in the design, analysis, and security evaluation of cryptographic systems. It provides the theoretical framework for understanding the limits of computation and, consequently, the inherent strengths and weaknesses of cryptographic algorithms. The feasibility of breaking a cipher is directly tied to the computational complexity of the underlying mathematical problems.The core principle lies in establishing a clear asymmetry between the computational effort required to perform a cryptographic operation (e.g., encryption) and the effort required to reverse it (e.g., decryption without the key).

This asymmetry relies on the existence of computationally hard problems – problems solvable in principle, but practically infeasible to solve within a reasonable timeframe using even the most powerful computers.

Computational Hardness in Cryptography

The security of many cryptographic systems hinges on the assumed computational hardness of specific mathematical problems. For example, the widely used RSA cryptosystem relies on the difficulty of factoring large numbers into their prime components. The computational complexity of integer factorization is believed to be super-polynomial, meaning the time required grows exponentially with the size of the number. Similarly, the Diffie-Hellman key exchange protocol relies on the discrete logarithm problem, which is also considered computationally hard for appropriately chosen groups.

The security of these systems is directly proportional to the computational infeasibility of solving these underlying problems. Advances in quantum computing, however, pose a potential threat to the security of these systems, as some quantum algorithms can solve these problems significantly faster than classical algorithms.

Examples of Cryptographic Primitives Relying on Computability Concepts

Several cryptographic primitives directly utilize concepts from computability theory. One-way functions, for instance, are functions that are easy to compute but computationally infeasible to invert. These are crucial for building cryptographic hash functions, digital signatures, and other cryptographic tools. Pseudorandom number generators (PRNGs) are another example; their ability to produce sequences of numbers that are indistinguishable from truly random sequences relies on the computational complexity of predicting the next number in the sequence.

The security of these generators is closely linked to the computational hardness of the underlying algorithms. The design of secure PRNGs is an active area of research, with new algorithms and techniques continually being developed to address potential vulnerabilities.

Impact of Computability Advancements on Cryptographic Security

Advancements in computability, particularly in the field of quantum computing, significantly impact the security of existing cryptographic systems. Quantum algorithms like Shor’s algorithm can efficiently solve problems like integer factorization and the discrete logarithm problem, posing a threat to widely used public-key cryptosystems such as RSA and Diffie-Hellman. This necessitates the development of post-quantum cryptography, which focuses on designing cryptographic systems resistant to attacks from quantum computers.

This field actively explores alternative mathematical problems believed to be hard even for quantum computers, such as lattice problems, code-based cryptography, and multivariate cryptography. The development and standardization of post-quantum cryptographic algorithms are ongoing processes, crucial for maintaining the security of digital infrastructure in the era of quantum computing.

The question of whether computability theory is “dead” is complex. While its foundational principles remain crucial, new applications constantly emerge, pushing its boundaries. For instance, advancements in image processing, like the innovative weak light relighting algorithm based on prior knowledge , demonstrate how computational limits are continually challenged and redefined. Therefore, declaring computability theory defunct seems premature.

Computability and the Limits of Computation

Computability theory, while illuminating the power of computation, also reveals its inherent limitations. Understanding these boundaries is crucial for developing realistic expectations about what problems can and cannot be solved algorithmically, and for designing efficient algorithms for those problems that are solvable. This exploration delves into the concept of undecidability, examines computationally intractable problems, and considers the philosophical implications arising from these limitations.Undecidability and its Implications for Problem-SolvingUndecidability refers to the existence of problems for which no algorithm can provide a solution in all cases.

A classic example is the Halting Problem, famously proven undecidable by Alan Turing. This problem asks whether a given program will eventually halt or run forever. Turing demonstrated that no general algorithm can definitively answer this question for all possible programs and inputs. The implications of undecidability are profound: it means that some problems are inherently unsolvable by computational means, regardless of the computational power available.

This limits our ability to automate certain decision-making processes and necessitates the development of alternative approaches, such as heuristic methods or probabilistic algorithms, to tackle such problems.

Computationally Intractable Problems

Certain problems, while decidable, are computationally intractable, meaning the time or resources required to solve them grow exponentially with the input size. These problems are often classified as belonging to the complexity class NP-complete. Examples include the Traveling Salesperson Problem (finding the shortest route visiting all cities and returning to the origin), the Boolean Satisfiability Problem (SAT) (determining if there exists an assignment of truth values to variables that satisfies a given Boolean formula), and the Knapsack Problem (selecting a subset of items with maximum value within a given weight limit).

The significance of these problems lies in their pervasive presence across various fields, from logistics and operations research to artificial intelligence and cryptography. The inability to solve these problems efficiently for large instances significantly impacts practical applications. Approximation algorithms and heuristic methods are often employed to find near-optimal solutions within reasonable time constraints.

Philosophical Implications of the Limits of Computation

The existence of undecidable and intractable problems raises profound philosophical questions. It challenges the notion of absolute computational power and highlights the inherent limitations of algorithmic approaches to problem-solving. This understanding compels a reassessment of our reliance on computation as the ultimate tool for understanding and solving complex problems. It necessitates the exploration of alternative problem-solving paradigms, emphasizing the importance of human intuition, creativity, and judgment in tackling challenges that elude purely computational solutions.

The limitations of computation also underscore the importance of carefully defining problem scope and selecting appropriate algorithmic techniques based on the inherent complexity of the problem at hand. It encourages a more nuanced perspective on the capabilities and limitations of computational systems in addressing real-world challenges.

Computability Theory and Formal Languages: Is Computability Theory Died

Computability theory and the theory of formal languages are deeply intertwined, with the former providing a framework for understanding the limits of computation and the latter offering a precise mathematical tool for describing and analyzing computational processes. The connection lies in the ability of formal languages to model the input and output of computational models, and in the direct correspondence between classes of formal languages and the computational power of various automata.

This relationship allows us to classify problems based on their inherent complexity and to determine which computational models are capable of solving them.

The Relationship Between Language Classes and Computational Power

The limitations of Turing machines directly influence the expressiveness of formal languages. Different classes of formal languages, such as regular, context-free, and recursively enumerable languages, correspond to different levels of computational power, mirroring the capabilities of finite automata, pushdown automata, and Turing machines, respectively. The following table summarizes this relationship:

| Language Class | Computational Power | Example of a Problem Solvable | Example of a Problem Unsolvable |

|---|---|---|---|

| Regular Languages | Finite Automata | Lexical Analysis (identifying tokens in a program) | Parsing Context-Free Grammars |

| Context-Free Languages | Pushdown Automata | Parsing Programming Languages (checking syntax) | Deciding the Halting Problem |

| Recursively Enumerable Languages | Turing Machines | Enumerating Prime Numbers | Deciding if a Turing Machine Halts |

| Recursive Languages | Turing Machines that always halt | Sorting a list of numbers | The Halting Problem |

Examples of Formal Languages Modeling Computation

Formal languages are crucial for modeling various aspects of computation.

(a) Modeling the Syntax of Programming Languages: Programming languages are defined using formal grammars, typically context-free grammars. For example, a simple grammar rule for arithmetic expressions might be: `expression → expression + term | term`. This rule states that an expression can be either an expression followed by a ‘+’ and a term, or simply a term. A parse tree visually represents the hierarchical structure of a sentence according to the grammar.

For instance, the expression “2 + 3

– 4″ would have a parse tree showing the order of operations, with multiplication having higher precedence than addition.

(b) Specifying the Behavior of Systems: Formal specification languages like Z or VDM use formal languages to describe the desired behavior of a system. A simple Z specification might describe a counter with an operation to increment it. The state of the counter would be defined as a variable, and the increment operation would be described using predicates that define the relationship between the state before and after the operation.

For example, the operation could be specified as: ΔCounter; counter’ := counter’ + 1.

(c) Describing the Input/Output Behavior of Algorithms: Regular expressions are a powerful tool for specifying patterns in strings, commonly used to describe the input/output behavior of algorithms dealing with text or data streams. For example, the regular expression `\d3-\d3-\d4` describes a North American phone number pattern (three digits, a hyphen, three digits, a hyphen, and four digits).

The Role of Automata Theory in Understanding Computability

Automata theory provides a hierarchy of computational models, each corresponding to a specific class of decidable problems. Finite automata can solve problems involving regular languages, such as lexical analysis. Pushdown automata, with their stack memory, can handle context-free languages, such as parsing programming languages. Turing machines, the most powerful model, can solve problems involving recursively enumerable languages. A problem solvable by a pushdown automaton but not a finite automaton is the recognition of balanced parentheses.

A pushdown automaton can use its stack to keep track of opening parentheses, ensuring that each opening parenthesis is matched by a closing parenthesis. A finite automaton, with its limited memory, cannot perform this task reliably for arbitrarily long strings.

The Church-Turing thesis states that any function which can be effectively calculated can be calculated by a Turing machine. This thesis underpins the entire field of computability theory, providing a foundational model for understanding the limits of computation.

Comparing the Chomsky Hierarchy and the Automata Hierarchy

The Chomsky hierarchy classifies formal languages based on their grammatical complexity, while the corresponding automata hierarchy classifies computational models based on their computational power. These two hierarchies are intimately related. Type 0 grammars (unrestricted grammars) correspond to Turing machines, capable of recognizing recursively enumerable languages. Type 1 grammars (context-sensitive grammars) correspond to linear-bounded automata, which can recognize context-sensitive languages.

Type 2 grammars (context-free grammars) correspond to pushdown automata, recognizing context-free languages. Finally, Type 3 grammars (regular grammars) correspond to finite automata, recognizing regular languages. Each level in the Chomsky hierarchy represents a decrease in the complexity of the grammar and a corresponding decrease in the computational power of the associated automaton. For instance, regular languages, defined by regular grammars and recognized by finite automata, are less expressive than context-free languages, which require pushdown automata for recognition due to their more complex structure allowing for nested structures like parentheses.

The Turing machine, representing the most powerful model in the automata hierarchy, corresponds to the most general type of grammar, capable of generating the most complex languages. This parallel illustrates the fundamental connection between the descriptive power of formal languages and the computational power of automata.

Case Studies of Computability in Practice

This section presents three diverse case studies illustrating the practical application of computability theory across various domains. Each case study highlights a unique computational problem, its solution based on computability principles, and the resulting impact. The focus is on demonstrating the tangible benefits derived from theoretical concepts.

Public-Key Cryptography

| Feature | Description | Data Type | Constraints | Example |

|---|---|---|---|---|

| Problem | Securely exchanging information over an insecure channel. | String | Maximum 150 characters | Enabling secure online banking transactions. |

| Solution | RSA algorithm using modular arithmetic and prime factorization. | String | Maximum 200 characters | Encryption and decryption using public and private keys. |

| Impact | Enabled secure e-commerce and online communication. | String | Maximum 100 characters; include metrics | Foundation for secure internet protocols. |

| Algorithm Class | Number-theoretic | String | Predefined list: Number-theoretic, Greedy, Dynamic Programming, Divide and Conquer, Graph Traversal | Number-theoretic |

| Time Complexity | Encryption: O(n3); Decryption: Dependent on factorization difficulty. | String | Correct Big O notation required | O(n3) |

| Space Complexity | O(n) | String | Correct Big O notation required | O(n) |

The RSA algorithm’s reliance on the computational difficulty of factoring large numbers underscores the fundamental role of complexity classes in cryptography. The security of the system directly depends on the assumed intractability of certain computational problems.Rivest, R. L., Shamir, A., & Adleman, L. (1978). A method for obtaining digital signatures and public-key cryptosystems.

Communications of the ACM, 21(2), 120-126.

Genome Sequencing Alignment

| Feature | Description | Data Type | Constraints | Example |

|---|---|---|---|---|

| Problem | Aligning two DNA sequences to identify similarities and differences. | String | Maximum 150 characters | Comparing a patient’s DNA to a known virus sequence. |

| Solution | Smith-Waterman algorithm for local sequence alignment. | String | Maximum 200 characters | Dynamic programming to find optimal alignment. |

| Impact | Improved accuracy and speed in genomic analysis. | String | Maximum 100 characters; include metrics | Faster disease diagnosis and drug development. |

| Algorithm Class | Dynamic Programming | String | Predefined list: Number-theoretic, Greedy, Dynamic Programming, Divide and Conquer, Graph Traversal | Dynamic Programming |

| Time Complexity | O(mn) where m and n are sequence lengths. | String | Correct Big O notation required | O(mn) |

| Space Complexity | O(mn) | String | Correct Big O notation required | O(mn) |

The Smith-Waterman algorithm’s application showcases the power of dynamic programming in solving complex biological problems. Its efficient approach to finding optimal alignments is a direct consequence of well-defined computational steps and the algorithm’s inherent decidability.Smith, T. F., & Waterman, M. S. (1981).

Identification of common molecular subsequences. Journal of molecular biology, 147(1), 195-197.

Database Query Optimization

| Feature | Description | Data Type | Constraints | Example |

|---|---|---|---|---|

| Problem | Efficiently retrieving specific data from a large database. | String | Maximum 150 characters | Finding all customers who made a purchase in the last month. |

| Solution | Query optimization using cost-based query planning. | String | Maximum 200 characters | Selecting the most efficient execution plan among multiple possibilities. |

| Impact | Significant reduction in query response time. | String | Maximum 100 characters; include metrics | Improved database performance and scalability. |

| Algorithm Class | Greedy | String | Predefined list: Number-theoretic, Greedy, Dynamic Programming, Divide and Conquer, Graph Traversal | Greedy |

| Time Complexity | Variable, depends on the specific query and database structure. | String | Correct Big O notation required | Highly variable |

| Space Complexity | Variable, depends on the query and data accessed. | String | Correct Big O notation required | Highly variable |

Database query optimization demonstrates the practical relevance of algorithmic efficiency. The selection of optimal query execution plans directly relates to the decidability and complexity of the underlying relational algebra operations. Efficient algorithms minimize resource consumption and ensure timely responses to user queries.Garcia-Molina, H., Ullman, J. D., & Widom, J. (2002).

Database system implementation. Pearson Education.

Future Directions in Computability Research

Computability theory, while possessing a rich history, remains a vibrant field with significant potential for future advancements. Three particularly promising areas warrant focused investigation: the intersection of computability and quantum mechanics, the exploration of hypercomputation, and the application of computability theory to biological systems. These areas offer unique opportunities for both theoretical breakthroughs and practical applications, pushing the boundaries of our understanding of computation.

Quantum Computability and its Implications

Quantum computing presents a paradigm shift in computational models, offering the potential to solve problems intractable for classical computers. Research in this area focuses on understanding the limits and capabilities of quantum computation within the framework of computability theory.Research Question 1: Can we definitively characterize the class of problems solvable by quantum computers that are provably unsolvable by classical Turing machines?Research Question 2: What are the fundamental limitations of quantum computation, and how do these limitations relate to the inherent properties of quantum mechanics?Methodology 1: To address the first question, researchers will employ techniques from quantum complexity theory, analyzing the computational power of various quantum algorithms and comparing them to known complexity classes.

This will involve rigorous mathematical proofs and potentially the development of new complexity measures specific to quantum computation. Limitations include the difficulty of proving lower bounds on quantum computational complexity.Methodology 2: The second question will be investigated using a combination of theoretical analysis and experimental simulation. Researchers will explore the role of decoherence, entanglement, and other quantum phenomena in limiting the computational power of quantum computers.

Simulations will be used to explore the behavior of quantum algorithms under various noise models. Limitations include the current limitations of quantum computing hardware and the inherent difficulty in accurately simulating large-scale quantum systems.Expected Outcomes: This research could lead to a deeper understanding of the fundamental differences between classical and quantum computation, potentially identifying new classes of problems uniquely suited for quantum algorithms.

This could have significant implications for fields such as cryptography and materials science.Potential Challenges: The major challenges include the difficulty of designing and implementing fault-tolerant quantum computers and the lack of well-established techniques for proving lower bounds in quantum complexity theory.

Hypercomputation and its Theoretical Foundations

Hypercomputation explores computational models that transcend the limitations of Turing machines, potentially offering solutions to currently intractable problems. This area delves into theoretical frameworks that challenge the Church-Turing thesis.Research Question 1: Can a rigorous mathematical framework be developed to define and analyze hypercomputational models, while maintaining consistency and avoiding paradoxes?Research Question 2: Can hypercomputational models be practically implemented, even if only for specific problem classes, and what are the technological implications?Methodology 1: The first question will be addressed through rigorous mathematical analysis, focusing on the development of consistent axiomatic systems and exploring the implications of different hypercomputational models.

This may involve developing new logical systems and proof techniques. Limitations include the potential for inconsistencies in newly developed frameworks.Methodology 2: The second question will involve exploring potential physical implementations of hypercomputational models. This could involve investigating exotic physical phenomena, such as those observed in black holes or quantum gravity, that might enable computations beyond Turing’s limits. Limitations include the highly speculative nature of the proposed implementations and the current lack of experimental evidence.Expected Outcomes: This research could fundamentally alter our understanding of the limits of computation, potentially leading to breakthroughs in problem domains currently considered unsolvable.Potential Challenges: The primary challenges lie in the highly speculative nature of hypercomputation and the difficulty of verifying the correctness of hypercomputational models.

Computability in Biological Systems

Biological systems exhibit remarkable computational capabilities, often surpassing the performance of artificial systems. Research in this area focuses on applying computability theory to understand and model these capabilities.Research Question 1: Can we develop formal models of biological computation that accurately capture the emergent behavior of complex biological systems?Research Question 2: Can principles from computability theory be used to design novel bio-inspired algorithms and computational architectures?Methodology 1: The first question will be addressed using a combination of computational modeling and biological experimentation.

Researchers will develop mathematical models of biological processes, such as gene regulation or neural networks, and compare the model predictions to experimental data. Limitations include the complexity of biological systems and the difficulty in obtaining comprehensive experimental data.Methodology 2: The second question will involve applying insights from biological computation to design new algorithms and computational architectures. This could involve exploring novel computational paradigms inspired by biological processes such as evolution or immune systems.

Limitations include the difficulty of translating biological principles into efficient computational algorithms.Expected Outcomes: This research could lead to the development of more efficient and robust algorithms, inspired by the efficiency and resilience of biological systems.Potential Challenges: The main challenges include the complexity and heterogeneity of biological systems, and the difficulty of bridging the gap between biological and computational models.

Summary of Research Areas

| Area of Research | Research Question 1 | Methodology 1 | Research Question 2 | Methodology 2 |

|---|---|---|---|---|

| Quantum Computability | Can we definitively characterize the class of problems solvable by quantum computers that are provably unsolvable by classical Turing machines? | Quantum complexity theory, rigorous mathematical proofs, development of new complexity measures. | What are the fundamental limitations of quantum computation, and how do these limitations relate to the inherent properties of quantum mechanics? | Theoretical analysis, experimental simulation, exploration of quantum phenomena. |

| Hypercomputation | Can a rigorous mathematical framework be developed to define and analyze hypercomputational models, while maintaining consistency and avoiding paradoxes? | Rigorous mathematical analysis, development of consistent axiomatic systems, new logical systems and proof techniques. | Can hypercomputational models be practically implemented, even if only for specific problem classes, and what are the technological implications? | Exploration of exotic physical phenomena, potential physical implementations. |

| Computability in Biological Systems | Can we develop formal models of biological computation that accurately capture the emergent behavior of complex biological systems? | Computational modeling, biological experimentation, comparison of model predictions to experimental data. | Can principles from computability theory be used to design novel bio-inspired algorithms and computational architectures? | Application of insights from biological computation, design of new algorithms and computational architectures. |

The potential impact of these research areas extends beyond computability theory. Advances in quantum computability will revolutionize fields reliant on computationally intensive tasks. Hypercomputation, while highly speculative, could lead to fundamental shifts in our understanding of the universe and the nature of reality. Research into biological computation has the potential to revolutionize artificial intelligence, leading to more efficient and robust algorithms and systems. Ethical considerations include the potential misuse of powerful quantum computers and the potential societal disruption from significant advancements in hypercomputation. The feasibility of these research areas depends on advancements in quantum computing hardware, the development of new theoretical frameworks, and the availability of sufficient computational resources.

Illustrative Examples of Computable and Uncomputable Functions

This section presents illustrative examples of both computable and uncomputable functions, highlighting the key distinctions between these two fundamental categories within computability theory. Understanding this distinction is crucial for comprehending the limits of computation and the capabilities of algorithms. We will provide detailed explanations of each function, including their algorithms (where applicable), inputs, outputs, and the reasons for their classification.

Computable Functions: Examples

The following examples demonstrate functions that can be computed by a Turing machine, or equivalently, by an algorithm that executes in finite time for all valid inputs.

- Factorial Function

The factorial function, denoted as n!, calculates the product of all positive integers up to n. The input domain is the set of non-negative integers (ℕ), and the range is also ℕ.

- Algorithm:

- If n = 0, return 1 (base case).

- Otherwise, return n multiplied by the factorial of n-1 (recursive step).

- Examples:

- Input: n = 5; Output: 120 (5 × 4 × 3 × 2 × 1)

- Input: n = 0; Output: 1 (base case)

Step-by-step execution for n = 3:

- factorial(3) = 3 – factorial(2)

- factorial(2) = 2 – factorial(1)

- factorial(1) = 1 – factorial(0)

- factorial(0) = 1

- factorial(1) = 1 – 1 = 1

- factorial(2) = 2 – 1 = 2

- factorial(3) = 3 – 2 = 6

- Time Complexity: O(n). The algorithm’s runtime is directly proportional to the input value n.

- Palindrome Checker

This function determines whether a given string is a palindrome (reads the same forwards and backward). The input domain is the set of all strings, and the range is true, false. The function will ignore case, spaces, and punctuation.

- Algorithm:

- Convert the input string to lowercase.

- Remove all spaces and punctuation from the string.

- Compare the resulting string to its reverse. If they are equal, return true; otherwise, return false.

- Examples:

- Input: “A man, a plan, a canal: Panama”; Output: true

- Input: “hello”; Output: false

- Time Complexity: O(n), where n is the length of the string. The algorithm needs to traverse the string once to process it and once to reverse it.

- Integer List Sorting (Merge Sort)

This function sorts a list of integers using the merge sort algorithm. The input domain is the set of all lists of integers, and the range is the set of all sorted lists of integers.

- Algorithm (Merge Sort): Merge sort recursively divides the list into smaller sublists until each sublist contains only one element. Then it repeatedly merges the sublists to produce new sorted sublists until there is only one sorted list remaining.

- Examples:

- Input: [5, 2, 8, 1, 9, 4]; Output: [1, 2, 4, 5, 8, 9]

- Input: [10, 20, 30]; Output: [10, 20, 30]

- Time Complexity: O(n log n). Merge sort’s time complexity is significantly better than algorithms like bubble sort (O(n 2)) for large lists.

Uncomputable Functions: Examples

These examples illustrate functions for which no algorithm can exist that computes the correct output for all possible inputs in finite time.

- Halting Problem Function

This function takes as input the description of a Turing machine (M) and its input (x) and determines whether M halts on input x.

- Input: A Turing machine M and its input x.

- Intended Output: “halts” if M halts on input x, “loops” otherwise.

- Uncomputability: This function is uncomputable due to the undecidability of the Halting Problem. There is no general algorithm that can determine, for all possible Turing machines and inputs, whether the machine will halt or run forever. This is a fundamental result in computability theory. Attempting to construct such an algorithm leads to a contradiction, as demonstrated by Turing’s original proof.

- Relationship to the Halting Problem: The function is directly equivalent to the Halting Problem. If such a function were computable, the Halting Problem would be decidable, which is known to be false.

- Diophantine Equation Solvability

This function determines whether a given Diophantine equation (a polynomial equation with integer coefficients) has a solution in non-negative integers.

- Input: A Diophantine equation.

- Intended Output: “solvable” if the equation has a solution in non-negative integers, “unsolvable” otherwise.

- Uncomputability: This function’s uncomputability is a consequence of Hilbert’s Tenth Problem, which was proven undecidable by Yuri Matiyasevich. There is no algorithm that can determine, for all Diophantine equations, whether a solution exists in non-negative integers.

- Relationship to Hilbert’s Tenth Problem: The function directly addresses the question posed by Hilbert’s Tenth Problem, which was shown to be undecidable. The undecidability of Hilbert’s Tenth Problem implies the uncomputability of this function.

- Program Output Prediction

This function predicts the output of any arbitrary program given its source code and input.

- Input: The source code of a program and its input.

- Intended Output: The output of the program when run with the given input.

- Uncomputability: This function is uncomputable because it can be used to solve the Halting Problem. If we could predict the output of any program, we could determine whether a program halts by checking if its predicted output is defined. Since the Halting Problem is undecidable, this program output prediction function must also be uncomputable.

- Relationship to the Halting Problem: The ability to predict the output of any program would imply the ability to solve the Halting Problem, which is impossible. Therefore, the function is uncomputable.

Summary Table of Examples

| Example # | Function Type | Function Description | Reason for Computability/Uncomputability |

|---|---|---|---|

| 1 | Computable | Factorial of a non-negative integer | Algorithm exists to compute the factorial for any non-negative integer input. |

| 2 | Computable | Palindrome checker for strings | Algorithm exists to check if a string is a palindrome, ignoring case, spaces, and punctuation. |

| 3 | Computable | Integer list sorting using merge sort | Merge sort algorithm efficiently sorts a list of integers. |

| 4 | Uncomputable | Halting problem function | Undecidability of the Halting Problem proves this function’s uncomputability. |

| 5 | Uncomputable | Diophantine equation solvability | Undecidability of Hilbert’s Tenth Problem proves this function’s uncomputability. |

| 6 | Uncomputable | Program output prediction | Solving this would imply solving the Halting Problem, which is impossible. |

FAQs

What is the Church-Turing thesis, and why is it important?

Simply put, the Church-Turing thesis suggests that anything computable by an algorithm can be computed by a Turing machine (a theoretical model of computation). It’s important because it provides a foundational framework for understanding the limits of computation – what problems can and cannot be solved algorithmically. Think of it as the ultimate “can’t be done” guide for computers.

Are there any ethical considerations related to computability theory?

Absolutely! The design of algorithms, inherently linked to computability, can reflect and even amplify existing societal biases. Furthermore, access to computational resources is unevenly distributed, leading to potential inequalities. These are critical issues demanding careful consideration.

What’s the difference between decidable and undecidable problems?

Decidable problems have algorithms that can always determine a “yes” or “no” answer in a finite amount of time. Undecidable problems, on the other hand, are proven impossible to solve algorithmically for all inputs – the infamous Halting Problem is a prime example. It’s like trying to predict the future: sometimes you can get lucky, but you’ll never be right all the time.