Is BU Computer Science Theory a fascinating exploration into the fundamental principles governing the digital world. This journey delves into the core concepts of abstraction, algorithms, data structures, and complexity, revealing their intricate interrelationships and practical applications. We’ll examine how theoretical models like Turing machines and lambda calculus shape our understanding of computation, while also acknowledging their inherent limitations.

The exploration will extend to the realms of computability, complexity classes, and the ever-evolving landscape of cryptography and security in the digital age.

This comprehensive overview will unpack the theoretical underpinnings of various computer science domains, including database theory, parallel and distributed computing, artificial intelligence, programming language theory, and formal verification. By examining these core elements, we aim to provide a solid foundation for understanding the theoretical basis of modern computing and its future trajectory. The discussion will emphasize clarity and accessibility, minimizing technical jargon and focusing on practical implications.

The Nature of Computer Science Theory: Is Bu Computer Science Theory

Computer science theory forms the bedrock upon which the digital world is built. It’s not just about writing code; it’s about understanding the fundamental limits and possibilities of computation, providing a rigorous framework for designing efficient and reliable systems. This exploration delves into the core principles, applications, and limitations of this crucial field.

Core Principles of Computer Science Theory

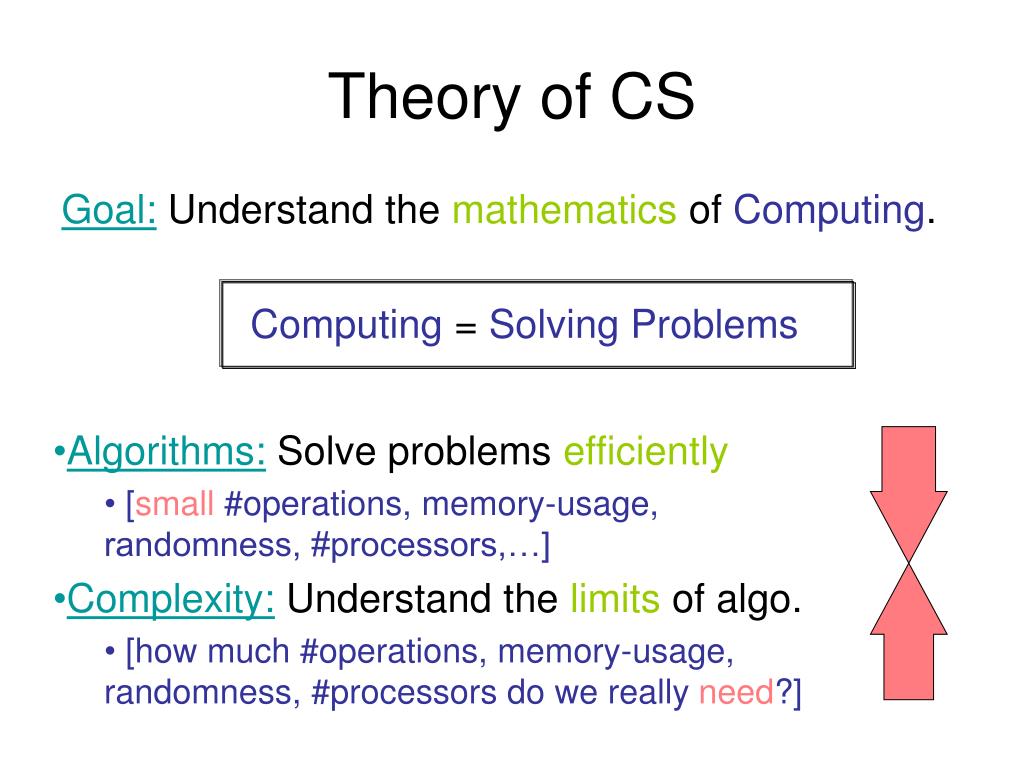

Computer science theory rests on four fundamental pillars: abstraction, algorithms, data structures, and complexity. Abstraction allows us to manage complexity by focusing on essential features and ignoring irrelevant details. Algorithms are step-by-step procedures for solving problems. Data structures organize and manage data efficiently, while complexity theory analyzes the resources (time and space) required by algorithms. These principles are deeply intertwined.

For example, a well-chosen data structure (like a hash table) can significantly improve the efficiency of an algorithm (like searching).

The interrelation can be visualized as follows: Imagine a flowchart. The starting point is a problem. Abstraction helps us define the problem’s core components. We then design an algorithm to solve it, selecting an appropriate data structure to store and manipulate the data. Finally, complexity analysis assesses the algorithm’s efficiency.

The process is iterative; the choice of data structure might influence algorithm design, and complexity analysis may lead to refinements in both algorithm and data structure.

Relationship Between Theoretical Computer Science and Practical Applications

Theoretical concepts are not just abstract musings; they are essential for building real-world systems. The following table illustrates this relationship:

| Theoretical Concept | Real-world Application | Explanation of Application |

|---|---|---|

| Graph Theory | Social Network Analysis | Social networks are modeled as graphs, where nodes represent users and edges represent connections. Graph algorithms are used to identify communities, recommend friends, and analyze network dynamics. |

| Automata Theory | Compiler Design | Compilers use finite automata to perform lexical analysis (breaking down code into tokens) and parsing (checking grammatical correctness). |

| Computability Theory | Database Query Optimization | Understanding computability limits helps database systems design efficient query processing strategies, recognizing inherent limitations in certain query types. |

Implementation of Theoretical Concepts in Real-World Systems

Let’s examine how theoretical concepts are implemented in practice:

Search Engines:

- Graph Theory: The web is modeled as a directed graph, with pages as nodes and links as edges. PageRank, a crucial ranking algorithm, relies on graph traversal and analysis.

- Information Retrieval: Efficient search relies on techniques from information retrieval, using data structures like inverted indexes to quickly locate relevant documents.

Compilers:

- Automata Theory: As mentioned earlier, finite automata are used for lexical analysis and parsing. Context-free grammars are used to define the language’s syntax.

- Formal Language Theory: Formal language theory provides the mathematical framework for understanding and manipulating programming languages.

Database Management Systems:

- Relational Algebra: Relational algebra provides a formal framework for querying relational databases. SQL queries are essentially expressions in relational algebra.

- Data Structures: Efficient data structures like B-trees are crucial for indexing and quickly accessing data in large databases.

Comparison of Theoretical Models of Computation

Different models capture different aspects of computation.

| Model | Computational Power | Limitations | Suitable Problem Types |

|---|---|---|---|

| Turing Machine | Turing-complete (can compute anything a modern computer can) | Abstract and not directly implementable; analysis of runtime can be difficult | Modeling any computation; proving undecidability |

| Lambda Calculus | Turing-complete | Can be difficult to understand and implement; less intuitive than Turing Machines for some problems | Functional programming; theoretical studies of computation |

| Finite Automata | Less powerful than Turing Machines (cannot handle recursive problems); regular languages only | Limited computational power | Lexical analysis; pattern matching in text; simple state machines |

Limitations of Current Theoretical Models of Computation

- Difficulty in modeling quantum computation: Current models struggle to accurately represent the unique characteristics of quantum computers and algorithms.

- Inherent limitations in handling uncertainty and randomness: While probabilistic models exist, they often don’t fully capture the complexities of real-world systems with inherent uncertainty.

Summary Essay: The Indispensable Role of Computer Science Theory

Computer science theory, far from being a purely academic pursuit, is the lifeblood of technological advancement. Its core principles—abstraction, algorithms, data structures, and complexity—provide a rigorous framework for designing efficient, reliable, and scalable software systems. The interplay between these principles is dynamic, with choices in one area influencing others. For instance, selecting an appropriate data structure directly impacts the efficiency of an algorithm.

Complexity analysis, in turn, guides refinements in both algorithm and data structure design.The practical implications of theoretical concepts are profound. Graph theory underpins social network analysis, search engine algorithms, and network routing. Automata theory is fundamental to compiler design, allowing us to translate human-readable code into machine-executable instructions. Computability theory, while highlighting the inherent limits of computation, guides the design of efficient algorithms and databases by identifying solvable and unsolvable problems.

Real-world systems like search engines, compilers, and database management systems rely heavily on these theoretical foundations. Search engines utilize graph theory and information retrieval techniques for efficient indexing and ranking. Compilers employ automata theory and formal language theory for parsing and code generation. Database systems leverage relational algebra and sophisticated data structures for efficient data management.However, current theoretical models have limitations.

The complexities of quantum computation challenge existing models, and the handling of uncertainty and randomness remains an area for improvement. These limitations highlight the ongoing need for further research and development in computer science theory. The continuous evolution of theoretical models is crucial for pushing the boundaries of computational power and addressing the ever-increasing demands of the digital age.

In conclusion, the theoretical underpinnings of computer science are not merely academic exercises; they are essential for the creation and advancement of the practical technologies that shape our world. A strong theoretical foundation ensures the development of robust, efficient, and reliable systems, capable of handling the complexities of modern computation.

Algorithms and Data Structures

Algorithms and data structures are the bedrock of computer science, forming the very foundation upon which efficient and scalable software is built. Understanding these core concepts is crucial for any aspiring programmer, allowing them to craft elegant solutions to complex problems and optimize software performance. Think of them as the architect’s blueprint and the construction materials for a digital skyscraper.

Without a well-thought-out plan (algorithm) and robust materials (data structures), the building will crumble.

Algorithm Design: Finding the Shortest Path

Let’s consider the classic problem of finding the shortest path between two points on a graph, a common task in GPS navigation systems and network routing. Dijkstra’s algorithm provides an efficient solution. It works by iteratively exploring nodes, assigning them distances from the starting point, and updating these distances as shorter paths are discovered. The algorithm terminates when the destination node’s shortest distance is found.

Dijkstra’s algorithm has a time complexity of O(E log V), where E is the number of edges and V is the number of vertices in the graph. This means the time taken increases proportionally to the number of edges multiplied by the logarithm of the number of vertices. The space complexity is O(V), as it needs to store distances for each vertex.

For a large graph representing a city’s road network, this efficient algorithm ensures quick route calculation.

Data Structure Comparison: Arrays vs. Linked Lists vs. Trees

Different data structures excel in different scenarios. Arrays provide fast access to elements using their index (O(1) time complexity), but adding or deleting elements in the middle can be slow (O(n)). Linked lists, on the other hand, allow efficient insertion and deletion at any point (O(1) if you have a pointer to the insertion/deletion point), but accessing an element requires traversing the list (O(n)).

Trees, such as binary search trees, offer a balance, providing logarithmic time complexity (O(log n)) for search, insertion, and deletion in the average case, though worst-case scenarios can degrade to linear time. The choice depends on the specific application’s needs; if frequent element access is crucial, an array might be preferable; if frequent insertions and deletions are needed, a linked list might be better suited; while for searching and sorting, a balanced tree often offers the best performance.

The Role of Algorithms and Data Structures in Software Development

Algorithms and data structures are fundamental to all aspects of software development. They determine the efficiency, scalability, and maintainability of software. Choosing the right algorithm and data structure can significantly impact performance, reducing execution time and memory consumption. For example, using a hash table to store and retrieve data can drastically improve the speed of a search engine compared to using a linear search on a large dataset.

Similarly, choosing an appropriate sorting algorithm can affect the overall performance of a data processing application. The selection of these core components is a critical design decision that directly impacts the software’s overall quality and user experience.

Common Sorting Algorithm Comparison

The following table compares the time complexities of several common sorting algorithms:

| Algorithm | Best-Case | Average-Case | Worst-Case |

|---|---|---|---|

| Bubble Sort | O(n) | O(n²) | O(n²) |

| Insertion Sort | O(n) | O(n²) | O(n²) |

| Merge Sort | O(n log n) | O(n log n) | O(n log n) |

| Quick Sort | O(n log n) | O(n log n) | O(n²) |

Automata Theory and Formal Languages

Stepping beyond the realm of algorithms and data structures, we delve into the fascinating world of Automata Theory and Formal Languages – the theoretical bedrock upon which much of computer science is built. This area explores the power and limitations of computational models, providing a framework for understanding what problems computers can and cannot solve. It’s like learning the grammar of computation itself.Automata theory uses mathematical models, called automata, to represent computational processes.

These models are abstract machines with specific capabilities, allowing us to analyze the complexity of computations and the types of problems they can solve. Understanding these models helps us design efficient algorithms and understand the limits of computation.

Finite Automata

Finite automata (FA) are the simplest type of automata. They are essentially state machines with a finite number of states. An FA reads an input string and transitions between states based on the input symbols. If the FA reaches an accepting state after reading the entire input string, the string is accepted; otherwise, it’s rejected. Finite automata are used in lexical analysis (the first phase of compiling a program), text searching, and pattern matching.

For instance, a simple FA could be designed to recognize strings containing only the letters “a” and “b”, ensuring that no “b” comes before an “a”. The states would represent the current sequence of ‘a’s and ‘b’s encountered.

Pushdown Automata

Pushdown automata (PDA) extend finite automata by adding a stack. This stack allows the PDA to remember information about the input string, giving it more computational power than an FA. PDAs can recognize context-free languages, which are more complex than the regular languages recognized by FAs. A classic example of a problem solvable by a PDA is parsing arithmetic expressions, where the stack helps to keep track of operator precedence and parentheses.

The stack’s ability to store and retrieve information enables the PDA to handle nested structures efficiently.

Turing Machines

Turing machines (TM) are the most powerful model of computation. They consist of an infinitely long tape, a read/write head, and a finite control unit. The TM can read, write, and move the head along the tape, allowing it to perform any computation that can be performed by a computer. Turing machines are theoretical models, but they are crucial for understanding the limits of computation and the concept of computability.

The Halting Problem, famously proven undecidable by Alan Turing, demonstrates the inherent limitations even of Turing machines. This problem asks whether it’s possible to create an algorithm that can determine whether any given program will eventually halt or run forever. The undecidability of this problem highlights the fundamental limits of what can be computed.

Formal Languages and Their Relationship to Computation

A formal language is a set of strings over a given alphabet. The strings in the language follow specific rules or grammar. Formal languages are closely tied to automata because each type of automaton recognizes a specific class of formal languages. Regular languages are recognized by finite automata, context-free languages by pushdown automata, and recursively enumerable languages by Turing machines.

This relationship allows us to use automata as tools for analyzing and manipulating formal languages. For example, designing a compiler requires specifying the formal language of the programming language and then building an automaton (often a combination of several automata) to parse and interpret the code.

Regular Expressions, Is bu computer science theory

Regular expressions are a powerful tool for defining and manipulating regular languages. They provide a concise way to describe patterns in strings. They’re used extensively in text processing, searching, and pattern matching in various programming languages and tools. For example, the regular expression `^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]2,$` is commonly used to validate email addresses, checking if a given string conforms to the standard email format.

This illustrates how regular expressions effectively capture patterns within strings. The flexibility and conciseness of regular expressions make them invaluable for practical applications involving string manipulation.

Computability and Complexity

The realm of computer science extends beyond simply writing code; it delves into the fundamental limits of what computers can and cannot do. This involves exploring computability, which examines what problems are solvable by computers, and complexity, which investigates how efficiently these problems can be solved. Understanding these concepts is crucial for designing effective and efficient algorithms.

Computability and its Limitations

Computability, at its core, is about determining whether a problem can be solved algorithmically. A formal definition often uses the Turing machine model: a problem is computable if there exists a Turing machine that halts and produces the correct output for all valid inputs. The Church-Turing thesis, a widely accepted hypothesis, states that any function computable by a reasonable model of computation is also computable by a Turing machine.

This implies a universal standard for computability. However, not all functions are computable. A prime example is the halting problem, which asks whether a given program will halt (finish executing) or run indefinitely. It’s been proven that no algorithm can solve the halting problem for all possible programs and inputs. This highlights a fundamental limitation: some problems are inherently unsolvable by any computer.

Partial functions, which may not produce an output for all inputs (like division by zero), are relevant because even for computable problems, algorithms might not provide a solution for every possible input. These limitations directly impact practical applications, as certain tasks are simply beyond the reach of even the most powerful computers.

Decidable vs. Undecidable Problems

A decidable problem is one for which an algorithm exists that can determine its solution for all possible inputs; the algorithm will always halt with a definitive yes or no answer. Conversely, an undecidable problem lacks such an algorithm; no algorithm can correctly solve it for all inputs. Determining if a number is prime is a decidable problem; efficient algorithms exist to solve it.

The halting problem, as previously mentioned, is undecidable. Another example of a decidable problem is checking if a graph is connected. An undecidable problem besides the halting problem is determining whether two arbitrary Turing machines are equivalent (do they produce the same output for all inputs?).

| Feature | Decidable Problem | Undecidable Problem |

|---|---|---|

| Definition | An algorithm exists to determine its solution. | No algorithm exists to determine its solution. |

| Halting Behavior | Always halts (finishes execution) | May not halt (runs indefinitely) |

| Example | Determining if a number is prime. | The Halting Problem |

Computational Complexity Classes (P, NP, NP-complete)

Complexity theory focuses on the resources (primarily time and space) required to solve a problem. The class P contains problems solvable in polynomial time by a deterministic algorithm; the runtime grows polynomially with the input size. The class NP encompasses problems whose solutions can be

verified* in polynomial time; if someone provides a potential solution, we can check its correctness quickly. The P vs. NP problem is a central unsolved question in computer science

does P = NP? In other words, can every problem whose solution can be quickly verified also be quickly solved? Most computer scientists believe P ≠ NP. NP-complete problems are the hardest problems in NP; if any NP-complete problem can be solved in polynomial time, then all problems in NP can be. NP-hard problems are at least as hard as the hardest problems in NP; they may or may not be in NP themselves.

Examples of NP-complete Problems and Their Significance

Several notorious problems belong to the NP-complete class. The Boolean satisfiability problem (SAT) determines if there exists an assignment of truth values to variables that satisfies a given Boolean formula. The traveling salesman problem seeks the shortest route visiting all cities and returning to the origin. The knapsack problem involves selecting a subset of items with maximum total value within a weight limit.

These problems have broad practical applications in areas like logistics, circuit design, and resource allocation. While no known polynomial-time algorithms exist for these problems, approximation algorithms and heuristics are used to find near-optimal solutions within reasonable timeframes. For example, greedy algorithms or genetic algorithms are commonly used to find good solutions to the traveling salesman problem, even though they may not be guaranteed to find the absolute best solution.

Cryptography and Security

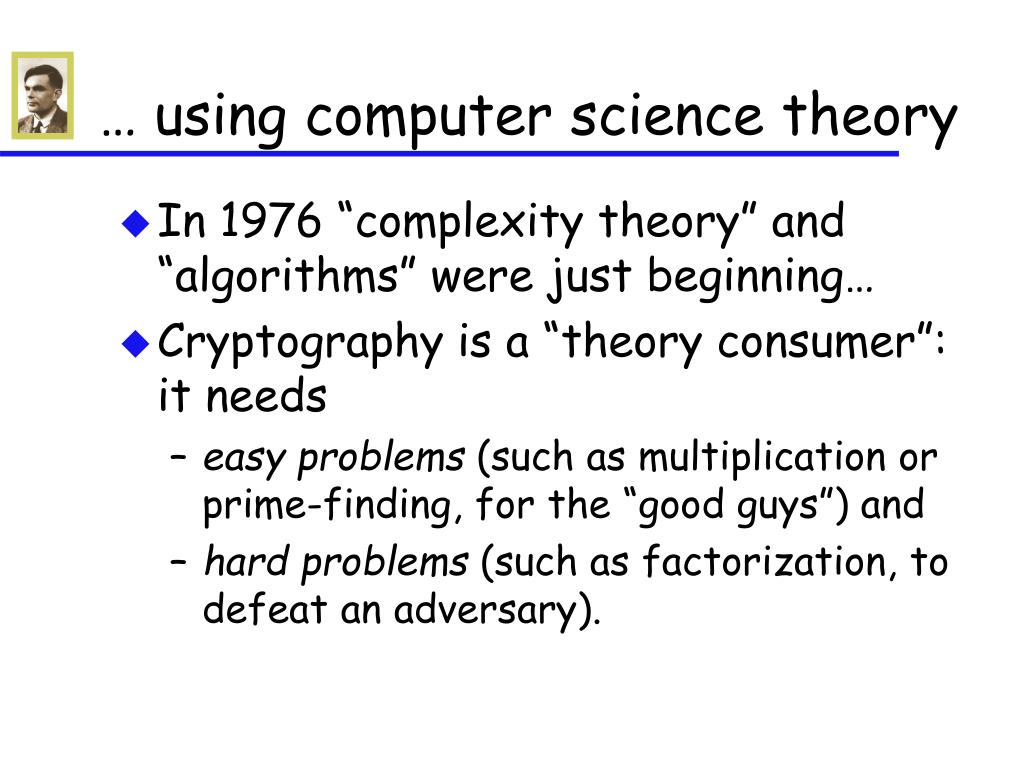

Cryptography, the art of secure communication in the presence of adversaries, is deeply intertwined with computer science theory. Its development and application rely heavily on mathematical foundations and computational complexity analysis, making it a fascinating and crucial area of study in the digital age. This section delves into the theoretical underpinnings of cryptography, explores various techniques, examines contemporary challenges, and presents a simplified encryption algorithm for illustrative purposes.

The Role of Computer Science Theory in Cryptography

Computer science theory plays a pivotal role in shaping the landscape of cryptography. Number theory, with its focus on prime numbers and modular arithmetic, forms the bedrock of many public-key cryptosystems. For instance, the RSA algorithm relies on the difficulty of factoring large numbers into their prime components. Abstract algebra, specifically the study of groups, rings, and fields, provides the mathematical structures for designing and analyzing cryptographic algorithms, ensuring their security and efficiency.

Computational complexity theory dictates the selection of secure cryptographic primitives by identifying problems that are computationally infeasible to solve within a reasonable timeframe, even with significant computing resources. A problem is deemed “computationally infeasible” if the time or resources required to solve it grow exponentially with the input size, making it practically impossible to crack within a realistic timeframe.

Information theory, through Shannon’s theorem, establishes fundamental limits on cryptographic security, highlighting the trade-off between secrecy and redundancy. Symmetric-key cryptography, like AES, relies on a shared secret key for both encryption and decryption, offering high speed but requiring secure key exchange. In contrast, asymmetric-key cryptography, exemplified by RSA, uses separate public and private keys, enabling secure communication without prior key exchange, but typically at a slower speed.

Cryptographic Techniques and Underlying Principles

Several cryptographic techniques are employed to achieve data security. Symmetric-key cryptography uses the same key for encryption and decryption. AES (Advanced Encryption Standard), DES (Data Encryption Standard), and 3DES (Triple DES) are prominent examples. They utilize various modes of operation, such as Cipher Block Chaining (CBC), Counter (CTR), and Galois/Counter Mode (GCM), to enhance security.

| Algorithm | Key Size (bits) | Block Size (bits) | Security Strength |

|---|---|---|---|

| AES | 128, 192, 256 | 128 | High |

| DES | 56 | 64 | Low (deprecated) |

| 3DES | 112 or 168 | 64 | Medium (considered weak) |

Asymmetric-key cryptography employs separate keys for encryption (public key) and decryption (private key). RSA (Rivest-Shamir-Adleman) relies on the difficulty of factoring large numbers. Elliptic Curve Cryptography (ECC) uses elliptic curves over finite fields. Diffie-Hellman key exchange allows two parties to establish a shared secret key over an insecure channel.

| Algorithm | Mathematical Principle |

|---|---|

| RSA | Number theory (factoring large numbers) |

| ECC | Elliptic curve arithmetic over finite fields |

| Diffie-Hellman | Discrete logarithm problem |

Hash functions, such as SHA-256, SHA-3, and MD5, produce fixed-size outputs (hashes) from variable-size inputs. Collision resistance ensures that it’s computationally infeasible to find two different inputs that produce the same hash. Pre-image resistance guarantees that it’s difficult to find an input that produces a given hash.

| Algorithm | Strengths | Weaknesses |

|---|---|---|

| SHA-256 | Widely used, considered secure | Susceptible to length extension attacks (mitigated in newer versions) |

| SHA-3 | Designed to be resistant to various attacks | Relatively new, less widely deployed |

| MD5 | Fast | Cryptographically broken, not recommended for security-sensitive applications |

Digital signatures use public-key cryptography to verify the authenticity and integrity of digital messages. The sender signs a message using their private key, and the recipient verifies the signature using the sender’s public key.

Challenges in Ensuring Data Security

Ensuring data security in modern computing systems presents significant challenges. Quantum computing poses a threat to current cryptographic techniques, as it can efficiently solve problems currently considered computationally infeasible, such as factoring large numbers. Network protocols like TLS/SSL and SSH, while vital for secure communication, are vulnerable to various attacks if not properly implemented and maintained. Side-channel attacks, such as timing attacks and power analysis, exploit information leaked during cryptographic operations.

Secure coding practices are crucial for preventing vulnerabilities, reducing the attack surface, and mitigating the impact of potential exploits.

A Simple Encryption Algorithm: -Based Substitution Cipher

This algorithm uses a to create a substitution table for a simple substitution cipher.

1. Key Generation

Choose a (e.g., “”). Remove duplicate letters.

2. Table Creation

Write the , followed by the remaining letters of the alphabet in order, omitting those already in the . This forms the substitution table.

Understanding BU’s computer science theory requires examining how software pricing models influence development. A key factor is the prevalence of usage-based pricing, as detailed in this insightful resource on what software is sold on usage-based theory , which directly impacts the design choices made by developers. This ultimately feeds back into the theoretical frameworks within BU’s computer science curriculum, highlighting the practical applications of theoretical concepts.

3. Encryption

Replace each letter in the plaintext with its corresponding letter from the substitution table.

4. Decryption

Reverse the process. Example:Substitution Table: K E Y W O R D A B C F G H I J L M N P Q S T U V X ZPlaintext: HELLOCiphertext: KEDDUThis cipher is vulnerable to frequency analysis and known-plaintext attacks. Its security can be improved by incorporating a keystream or using a more complex substitution scheme.

Security Implications of Cloud-Based vs. On-Premise Solutions

Cloud-based solutions offer scalability and cost-effectiveness but raise concerns about data breaches, especially concerning third-party access and data sovereignty. On-premise solutions offer greater control but require significant investment in infrastructure and security expertise. Regulatory compliance, such as GDPR and HIPAA, adds another layer of complexity, demanding specific security controls regardless of the chosen solution. Access control mechanisms must be robust in both scenarios, with strong authentication and authorization policies.

Best practices for cloud security include data encryption at rest and in transit, robust access control lists, regular security audits, and proactive threat monitoring. For on-premise solutions, physical security, regular software updates, intrusion detection systems, and comprehensive security policies are crucial. The choice between cloud and on-premise solutions depends on the specific needs of an organization, weighing the trade-offs between cost, security, and control.

Database Theory

Database theory forms the bedrock of how we manage and interact with vast amounts of information. Understanding its core principles is crucial for anyone working with data, from designing efficient applications to optimizing complex systems. This section delves into the fundamental concepts, models, and challenges inherent in database management.Relational Database Theory FundamentalsRelational database theory centers around the concept of representing data in tables with rows (records) and columns (attributes).

These tables are interconnected through relationships, enabling efficient data retrieval and manipulation. The relational model, championed by E.F. Codd, emphasizes data integrity and consistency through constraints like primary and foreign keys. These keys enforce referential integrity, ensuring that relationships between tables are accurately maintained and preventing inconsistencies. Normalization, a process of organizing data to reduce redundancy and improve data integrity, is a key aspect of relational database design.

For example, separating customer information from order details into distinct tables prevents data duplication and simplifies updates.

Relational Database Models and Applications

Various database models exist beyond the relational model, each with specific strengths and weaknesses. The relational model, while dominant, is not always the best fit for all applications. NoSQL databases, for example, offer flexibility and scalability advantages when dealing with unstructured or semi-structured data. These are often employed in applications requiring high availability and horizontal scalability, such as social media platforms or large-scale e-commerce systems.

Graph databases, on the other hand, excel at representing and querying relationships between entities, making them ideal for social networks or recommendation systems. Document databases are well-suited for storing and retrieving JSON or XML documents, often used in content management systems.

Relational Database Schema Design

Designing a relational database schema involves carefully planning the tables, attributes, and relationships to accurately reflect the data requirements of an application. This process typically begins with identifying entities and their attributes, then establishing relationships between entities based on business rules. Consider a simple e-commerce system. Entities might include Customers, Products, and Orders. Attributes for Customers could be CustomerID, Name, Address, etc.

Relationships would link Customers to Orders (one customer can place many orders) and Orders to Products (one order can contain many products). Properly designing the schema ensures data integrity, efficient querying, and ease of maintenance. Key considerations include normalization to minimize redundancy and selecting appropriate data types for each attribute.

Challenges of Managing Large Datasets

Managing large datasets presents significant challenges. Performance becomes a major concern as the volume of data grows. Efficient query processing requires careful indexing and optimization techniques. Data warehousing and distributed database systems are often employed to handle the scale and complexity of big data. Furthermore, ensuring data consistency and availability across multiple nodes in a distributed system requires sophisticated techniques like replication and consensus algorithms.

Data cleaning and preprocessing also become increasingly crucial, as large datasets often contain inconsistencies and errors. Real-world examples include large-scale data analytics performed by companies like Google or Amazon, where managing petabytes of data requires highly specialized infrastructure and algorithms.

Parallel and Distributed Computing

Parallel and distributed computing represent a fundamental shift in how we approach computationally intensive problems. Instead of relying on a single, powerful processor, these approaches leverage multiple processors working concurrently to achieve significant speedups. This allows us to tackle challenges previously deemed intractable, opening doors to breakthroughs in various fields. However, this power comes with its own set of complexities, including managing data distribution, ensuring fault tolerance, and designing efficient parallel algorithms.

Challenges and Opportunities

Data partitioning and load balancing are crucial in parallel computing. Uneven distribution leads to performance bottlenecks, where some processors are idle while others are overloaded. For instance, consider processing a large dataset for image recognition. If the data isn’t evenly split, some processors might finish quickly while others are bogged down, resulting in a significant performance slowdown—potentially a 30-50% reduction in overall processing speed depending on the imbalance.

Opportunities abound, however. Genomics, for example, benefits immensely from parallel processing for analyzing massive genomic datasets, accelerating the identification of disease genes and personalized medicine development. Weather forecasting models, often involving complex simulations, leverage distributed computing to process vast amounts of atmospheric data, leading to more accurate and timely predictions. Similarly, financial modeling, which requires handling massive datasets and complex calculations, sees substantial acceleration through parallel and distributed computing techniques.

The ability to run sophisticated risk assessments and portfolio optimizations much faster gives financial institutions a significant competitive edge. Fault tolerance presents different challenges in parallel versus distributed systems. In parallel systems, a single node failure can cripple the entire computation. Distributed systems, however, offer redundancy, allowing computations to continue even with multiple node failures. Strategies include data replication and checkpointing.

Parallel Programming Paradigms and Models

Shared memory and message-passing are two dominant parallel programming models. Shared memory involves multiple processors accessing a common memory space, simplifying data sharing but increasing complexity in managing concurrent access. Message-passing, on the other hand, relies on processors communicating through explicit message exchanges, making it more scalable but increasing programming complexity. Consider matrix multiplication:Shared Memory (pseudocode):“`for i = 0 to n-1 for j = 0 to n-1 c[i][j] = 0; for k = 0 to n-1 c[i][j] += a[i][k]

b[k][j];

“`Message-Passing (pseudocode):“`// Distribute matrix partitions to processors// Processors compute their partition// Processors exchange results to assemble final matrix“`Three key parallel programming paradigms are data parallelism, task parallelism, and pipeline parallelism. Data parallelism focuses on applying the same operation to different data elements concurrently (e.g., image processing). Task parallelism involves dividing a problem into independent tasks executed concurrently (e.g., searching a database).

Pipeline parallelism breaks a task into sequential stages, with each stage processed concurrently on different data elements (e.g., video encoding).| Paradigm | Description | Example Application | Suitability ||——————–|————————————————–|—————————————–|——————————————-|| Data Parallelism | Applying the same operation to multiple data simultaneously.

| Image processing (applying a filter to each pixel) | Embarrassingly parallel problems; highly suitable for GPUs. || Task Parallelism | Dividing a problem into independent tasks. | Web server handling multiple requests. | Problems with independent sub-tasks; good for multi-core CPUs. || Pipeline Parallelism | Breaking a task into stages, processing each stage concurrently.

| Video encoding (encoding, compression, and streaming) | Problems with sequential stages; suitable for specialized hardware. |

Understanding whether BU is a computer science theory requires exploring its foundational elements. A key aspect involves examining how knowledge is represented and manipulated, which directly relates to the core concepts of what is knowledge-based theory. Therefore, analyzing knowledge-based systems helps clarify the theoretical underpinnings of BU within the broader field of computer science.

Distributed Consensus

Distributed consensus ensures agreement among multiple processors in a distributed system, vital for data consistency. Paxos is a well-known algorithm achieving this. It involves multiple rounds of proposal and acceptance, ensuring a single value is chosen even with node failures. A simplified flowchart might show: Proposer proposes a value, Acceptors respond with promises, Proposer sends accept requests, Acceptors accept the value.

Network partitions and Byzantine failures (malicious nodes) pose significant challenges. Paxos addresses these by requiring a quorum of acceptors to agree. Raft, another consensus protocol, simplifies the process by introducing roles like leader, follower, and candidate, making it easier to understand and implement.

Real-World Applications

Google File System (GFS) is a large-scale distributed file system employing parallel and distributed computing techniques. Its architecture involves master servers managing metadata and chunkservers storing data in parallel. A diagram would show the master server coordinating access to data chunks distributed across multiple chunkservers. Financial institutions utilize parallel and distributed computing for high-frequency trading, achieving millisecond response times for executing trades.

Healthcare uses parallel computing for medical image analysis, accelerating diagnosis and treatment planning. Ethical considerations include data privacy—ensuring data security in distributed systems—and energy consumption—minimizing the environmental impact of large-scale deployments. Strategies include employing energy-efficient hardware and algorithms, and implementing robust data encryption and access control mechanisms.

Artificial Intelligence and Machine Learning

Artificial intelligence (AI) and machine learning (ML) have rapidly evolved from theoretical concepts to transformative technologies impacting numerous aspects of modern life. Understanding their theoretical underpinnings is crucial to harnessing their potential responsibly and effectively. This section delves into the foundational principles, explores key concepts, and examines the limitations and ethical implications of these powerful technologies.The theoretical foundations of AI and ML are rooted in several disciplines, including mathematics, statistics, computer science, and cognitive science.

Probabilistic models, statistical inference, and optimization techniques form the core mathematical framework. Algorithms are designed to mimic aspects of human intelligence, such as learning, reasoning, and problem-solving. Different paradigms, like symbolic AI (rule-based systems) and connectionist AI (neural networks), offer distinct approaches to achieving these goals. The field continually evolves, driven by advancements in computing power and the availability of massive datasets.

Learning, Reasoning, and Decision-Making in AI Systems

Learning in AI involves acquiring knowledge or skills through experience, without explicit programming. Supervised learning uses labeled data to train models to predict outcomes; unsupervised learning identifies patterns in unlabeled data; and reinforcement learning involves agents learning through trial and error by interacting with an environment. Reasoning in AI systems involves using acquired knowledge to draw inferences and make deductions.

This can range from simple logical deductions to complex probabilistic reasoning under uncertainty. Decision-making in AI involves selecting the best course of action from a set of options, often using optimization techniques to maximize expected utility or minimize risk. For example, a self-driving car uses sensor data, learned models, and reasoning to make decisions about navigation and obstacle avoidance.

Limitations and Ethical Considerations of AI

Despite their remarkable capabilities, AI systems have limitations. They can be biased if trained on biased data, leading to unfair or discriminatory outcomes. The “black box” nature of some AI models, particularly deep neural networks, makes it difficult to understand their decision-making processes, raising concerns about transparency and accountability. Furthermore, the potential for misuse of AI in areas such as autonomous weapons systems and surveillance raises significant ethical concerns.

Robust mechanisms for fairness, transparency, and accountability are essential to mitigate these risks. For instance, algorithmic bias in loan applications can lead to discriminatory lending practices, disproportionately affecting certain demographics.

Machine Learning Algorithms and Their Applications

Several machine learning algorithms are widely used across various applications. The choice of algorithm depends on the specific problem and the nature of the data.

The following table summarizes some key algorithms and their typical applications:

| Algorithm | Description | Applications |

|---|---|---|

| Linear Regression | Predicts a continuous output variable based on a linear relationship with input variables. | Sales forecasting, stock price prediction. |

| Logistic Regression | Predicts a categorical output variable (e.g., binary classification). | Spam detection, medical diagnosis. |

| Support Vector Machines (SVM) | Finds an optimal hyperplane to separate data points into different classes. | Image classification, text categorization. |

| Decision Trees | Builds a tree-like model to classify or regress data based on a series of decisions. | Risk assessment, customer segmentation. |

| Random Forest | An ensemble method that combines multiple decision trees to improve prediction accuracy. | Fraud detection, medical diagnosis. |

| Neural Networks | A complex model inspired by the structure and function of the human brain. | Image recognition, natural language processing, speech recognition. |

| K-Means Clustering | Groups data points into clusters based on similarity. | Customer segmentation, anomaly detection. |

Programming Language Theory

Programming languages are the crucial link between human intentions and the execution capabilities of computers. Understanding their design principles, inherent paradigms, and the role of type systems is fundamental to crafting efficient, reliable, and maintainable software. This section delves into the core concepts of programming language theory.Programming Language Design PrinciplesEffective programming languages are built upon several key design principles.

Abstraction allows programmers to manage complexity by hiding implementation details and focusing on higher-level concepts. Orthogonality ensures that language features interact in predictable and consistent ways, minimizing unexpected side effects. Data types and type checking provide a framework for ensuring that operations are performed on compatible data, preventing many common programming errors. Readability, a crucial aspect of maintainability, emphasizes the clarity and understandability of the code.

Finally, efficiency, encompassing both compilation speed and runtime performance, is a constant consideration in language design. These principles, often intertwined and interdependent, guide the creation of languages suited to specific tasks and programming styles.

Programming Paradigms

Programming paradigms represent fundamental styles of programming. Imperative programming focuses on describinghow* a program achieves its goal through a sequence of commands that modify the program’s state. Object-oriented programming (OOP) organizes code around “objects” that encapsulate data and methods, promoting modularity and reusability through concepts like inheritance and polymorphism. Functional programming emphasizes the evaluation of mathematical functions and avoids mutable state, leading to more predictable and easier-to-reason-about code.

Each paradigm offers distinct advantages and is best suited for different types of problems. For example, imperative programming is well-suited for systems programming where precise control over hardware is necessary, while functional programming excels in areas requiring high concurrency and mathematical precision. OOP’s strength lies in its ability to manage complexity in large software projects.

Type Systems

Type systems are a critical component of programming languages, classifying data into different types (e.g., integer, float, string) and enforcing rules about how these types can interact. Static type systems perform type checking during compilation, catching type errors before runtime. Dynamic type systems perform type checking during execution, offering greater flexibility but potentially leading to runtime errors. Strong type systems enforce strict rules about type compatibility, reducing the likelihood of errors.

Weak type systems allow for more implicit type conversions, potentially leading to unexpected behavior. The choice of type system significantly impacts a language’s safety and performance characteristics. For instance, languages like Java and C# utilize static and strong type systems, contributing to their robustness, while languages like Python and JavaScript employ dynamic typing, offering more flexibility but requiring careful attention to avoid runtime errors.

Implementation of Programming Language Features

The implementation of programming language features involves translating the source code written by the programmer into a form that the computer can execute. This process can involve several stages, including lexical analysis (breaking the code into tokens), parsing (building a syntax tree), semantic analysis (checking for type errors and other semantic issues), and code generation (producing machine code or intermediate representation).

Consider the implementation of function calls. When a function is called, the program’s execution jumps to the function’s code, parameters are passed, and the function’s return value is handled. This involves managing the program stack and registers to store function parameters, local variables, and return addresses. Another example is the implementation of object-oriented features like inheritance.

This often involves creating a class hierarchy where subclasses inherit properties and methods from their superclasses, potentially overriding or extending existing functionality. This requires efficient mechanisms for resolving method calls and accessing inherited members.

Formal Verification

Formal verification is a mathematically rigorous approach to ensuring the correctness of software and hardware systems. Unlike testing, which only examines a limited set of inputs, formal verification aims to prove the correctness of a system forall* possible inputs and behaviors. This provides a significantly higher level of confidence in the system’s reliability and security, especially crucial in safety-critical applications.Formal verification employs various methods to analyze a system’s design and implementation.

These methods leverage mathematical logic and model-checking techniques to systematically explore the system’s state space and prove or disprove properties of interest. The ultimate goal is to guarantee that the system behaves as intended, avoiding unexpected failures or security vulnerabilities.

Model Checking

Model checking is a powerful automated technique used in formal verification. It involves building a formal model of the system under consideration, typically using a state-transition system or a similar representation. This model captures the system’s behavior and interactions with its environment. The model checker then systematically explores the state space of the model, verifying whether a specified property holds true in all reachable states.

If a violation of the property is found, the model checker provides a counterexample – a sequence of events leading to the violation – which aids in debugging and system improvement. For instance, a model checker could verify that a traffic light controller never allows conflicting signals to be active simultaneously.

Theorem Proving

Theorem proving, unlike model checking, is an interactive process that relies on mathematical reasoning to prove the correctness of a system. It involves formulating the system’s properties as mathematical theorems and then using logical inference rules and axioms to derive a proof. Theorem proving is particularly well-suited for verifying complex systems or properties that are difficult to express in the formalisms used by model checkers.

This method requires significant expertise in formal logic and mathematical proof techniques. A classic example would be proving the correctness of a sorting algorithm using inductive reasoning.

Formal Verification Tools and Use Cases

Several tools support formal verification, each with its strengths and weaknesses. For example, SPIN is a widely used model checker for verifying concurrent systems, often used in the development of communication protocols. It excels at detecting deadlocks and race conditions. On the other hand, Coq is a powerful interactive theorem prover used for verifying complex algorithms and software components.

It’s been employed in the verification of critical parts of operating systems and compilers. Another prominent tool is Z3, a satisfiability modulo theories (SMT) solver frequently integrated into other formal verification tools to solve complex logical constraints encountered during the verification process. These tools are used in various domains, including hardware design (verifying the correctness of microprocessors), software engineering (verifying the absence of buffer overflows), and even in the financial industry (verifying the correctness of trading algorithms).

Information Theory

Information theory, at its core, is the mathematical study of information quantification, storage, and communication. It provides a framework for understanding the limits of data compression, reliable communication over noisy channels, and the fundamental nature of information itself. This field, pioneered by Claude Shannon, has profoundly impacted various disciplines, from computer science and telecommunications to biology and cryptography.

Fundamental Concepts of Information Theory

Entropy, mutual information, and channel capacity are foundational concepts in information theory. Entropy (H) measures the uncertainty or randomness of a random variable. For a discrete random variable X with possible values x i and probabilities p(x i), entropy is defined as:

H(X) =

Σ p(xi) log 2 p(x i)

This formula quantifies the average amount of information needed to describe the outcome of X. Mutual information (I(X;Y)) quantifies the amount of information that one random variable X reveals about another random variable Y. It’s calculated as

I(X;Y) = H(X)

- H(X|Y) = H(Y)

- H(Y|X)

where H(X|Y) is the conditional entropy of X given Y. Channel capacity (C) represents the maximum rate at which information can be reliably transmitted over a noisy channel. The precise formula depends on the channel model, but for a discrete memoryless channel, it’s related to the mutual information between the input and output. Shannon’s source coding theorem states that the average code length for a source can be arbitrarily close to its entropy, providing a theoretical limit for data compression.

Different information sources exist; a discrete memoryless source emits independent and identically distributed symbols, while a Markov source exhibits dependencies between successive symbols. Shannon’s theorems, while powerful, assume ideal conditions often not met in practice, such as perfect knowledge of the source statistics and error-free encoding/decoding.

Information Theory and Data Compression

Huffman coding and Lempel-Ziv coding are prominent examples of data compression algorithms leveraging information theory. Huffman coding assigns shorter codes to more frequent symbols, directly reflecting the probability distribution of the source. Lempel-Ziv coding exploits redundancies in data by creating a dictionary of frequently occurring sequences. Lossless compression techniques, like Huffman and Lempel-Ziv, guarantee perfect reconstruction of the original data, while lossy compression techniques, such as JPEG and MP3, achieve higher compression ratios by discarding some information deemed less important.

The trade-off between compression ratio and computational complexity is crucial; algorithms achieving high compression might require significant processing power. Data distributions significantly influence compression efficiency. A highly skewed distribution (e.g., many repetitions of a single symbol) will compress much better than a uniform distribution. For example, consider compressing text (skewed distribution) versus random binary data (uniform distribution).

The text will have a much higher compression ratio.

Information Theory in Communication Systems

Channel coding is vital for reliable communication in the presence of noise and interference. It adds redundancy to the transmitted data to enable error detection and correction at the receiver. Hamming codes and Reed-Solomon codes are examples of channel coding techniques offering varying levels of error-correcting capability. Channel capacity, the maximum achievable reliable transmission rate, is intimately linked to the signal-to-noise ratio (SNR).

A higher SNR allows for higher channel capacity. The performance of communication systems is often assessed using metrics like bit error rate (BER) and signal-to-interference-plus-noise ratio (SINR). A graph of BER versus SNR would typically show an exponential decrease in BER as SNR increases, illustrating the improved reliability with better signal quality.

Applications of Information Theory

Information theory finds applications in diverse fields. In cryptography, it helps analyze the security of cryptosystems by quantifying the information leaked about the plaintext given the ciphertext. For instance, analyzing the entropy of a key determines its strength against brute-force attacks. In bioinformatics, information theory helps analyze biological sequences like DNA and protein sequences by identifying patterns and conserved regions, assisting in genomic analysis and phylogenetic studies.

For example, mutual information can be used to identify co-occurring patterns in DNA sequences. In machine learning, information theory principles aid feature selection by choosing features that maximize mutual information with the target variable, and model evaluation through metrics like cross-entropy. For example, minimizing cross-entropy is a common objective in training many machine learning models.

Network Theory

Network theory, a cornerstone of computer science, provides the mathematical framework for understanding and analyzing complex systems composed of interconnected entities. It’s not just about the internet; its principles underpin diverse fields, from social networks to biological systems. This exploration delves into the fundamental concepts, topologies, protocols, and applications of network theory.

Fundamental Concepts of Network Theory

Network theory uses graph theory to represent systems. A network is modeled as a graph, where entities are represented as

- nodes* (or vertices) and the connections between them are

- edges* (or arcs). A

- path* is a sequence of edges connecting two nodes. A

- cycle* is a path that starts and ends at the same node, without repeating edges.

- Connectivity* refers to the ability to reach one node from another via a path. Graphs can be represented using an

- adjacency matrix*, a square matrix where entry (i,j) indicates the presence (or weight) of an edge between node i and node j, or an

- adjacency list*, where each node has a list of its neighbors.

For example, consider a simple network of four computers: A, B, C, and D. If A is connected to B and C, B is connected to C and D, and C is connected to D, we can represent this as a graph. Node A is connected to B and C (edges AB and AC), B to C and D (edges BC and BD), and C to D (edge CD).

The path from A to D would be A-B-D. The adjacency matrix would show 1s at positions (1,2), (1,3), (2,3), (2,4), and (3,4), representing the edges.

Network Topologies and Their Properties

Different network topologies offer various advantages and disadvantages. The choice depends on factors such as cost, performance requirements, and scalability needs.

| Topology Name | Diagram | Advantages | Disadvantages | Scalability | Fault Tolerance |

|---|---|---|---|---|---|

| Star | Imagine a central node with several nodes branching out directly from it like spokes on a wheel. | Simple to implement, easy to manage, central point for monitoring. | Single point of failure, if the central node fails, the whole network fails. | Poor, adding nodes requires modifying the central node. | Low |

| Bus | Imagine a single cable (the bus) with all nodes connected to it. | Simple and inexpensive. | Single point of failure, if the bus fails, the whole network fails. Performance degrades with increased nodes. | Poor | Low |

| Ring | Imagine nodes connected in a closed loop. | Equal access to the network, simple routing. | Failure of one node can bring down the entire network. Adding or removing nodes can disrupt the network. | Poor | Low |

| Mesh | Imagine a network where multiple paths exist between nodes. | High reliability, multiple paths allow for redundancy. | Complex to implement and manage, expensive. | Good | High |

| Tree | Imagine a hierarchical structure with a root node and branches. | Hierarchical structure facilitates organization, scalable. | Can be susceptible to failures at higher levels of the hierarchy. | Good | Moderate |

The Role of Network Protocols in Communication

Network protocols govern how data is transmitted across a network. The TCP/IP model is a widely used layered architecture.

| Layer | Protocol | Function |

|---|---|---|

| Network Access Layer | Ethernet | Defines how data is physically transmitted over a network medium (e.g., cables, Wi-Fi). |

| Network Access Layer | Wi-Fi (802.11) | Provides wireless network access. |

| Internet Layer | IP (Internet Protocol) | Handles the addressing and routing of data packets across networks. |

| Internet Layer | ICMP (Internet Control Message Protocol) | Used for error reporting and network diagnostics (e.g., ping). |

| Transport Layer | TCP (Transmission Control Protocol) | Provides reliable, ordered, and error-checked delivery of data. |

| Transport Layer | UDP (User Datagram Protocol) | Provides connectionless, unreliable data transmission; suitable for applications where speed is prioritized over reliability. |

Real-World Network Applications

Network theory finds applications in diverse domains.

- The Internet: A massive interconnected network using a combination of topologies (including mesh and star at various levels) and protocols (TCP/IP). Its design emphasizes scalability, fault tolerance, and efficient data routing. Benefits include global connectivity and information sharing.

- Wireless Sensor Networks: These networks consist of small, low-power devices that collect and transmit data. They often employ star or tree topologies and utilize low-power protocols like Zigbee or 6LoWPAN. Benefits include environmental monitoring and industrial automation.

- Social Network Analysis: Social networks are analyzed using graph theory to understand relationships, influence, and information spread. Algorithms are used to identify communities, key influencers, and patterns of communication. The topology is typically a complex, dynamic graph. Benefits include targeted advertising, trend prediction, and understanding social dynamics.

Comparison of Network Topologies in a Geographically Distributed System

Consider a geographically distributed system requiring high-volume data transfer, such as a multinational corporation’s network. A mesh topology offers high bandwidth and fault tolerance due to redundant paths. However, its complexity and cost are significant. A star topology, with strategically placed central servers in different geographic locations, offers simpler management but may suffer from bottlenecks at central points and reduced fault tolerance if a central server fails.

The choice depends on the balance between cost, performance, and reliability requirements. For this scenario, a hybrid approach combining aspects of both topologies—a mesh of central servers interconnected with star-like connections to local offices—might be the most effective solution. This offers the benefits of redundancy inherent in a mesh while maintaining relative simplicity for local network management. While initial setup costs would be higher than a simple star topology, the increased fault tolerance and scalability make it a more robust and long-term cost-effective solution for a high-volume, geographically distributed system.

Python Code Snippet (NetworkX)

“`python# Import necessary librariesimport networkx as nximport matplotlib.pyplot as plt# Define parametersnum_nodes = 100num_edges = 200# Generate Barabási–Albert modelgraph = nx.barabasi_albert_graph(num_nodes, num_edges)# Visualize the graphpos = nx.spring_layout(graph) # positions for all nodesnx.draw(graph, pos, with_labels=True, node_size=150, node_color=”skyblue”, font_size=8)plt.title(“Barabási-Albert Model”)plt.show()“`

Logic and Reasoning in Computer Science

Logic and reasoning are the bedrock upon which the entire edifice of computer science is built. From the design of efficient algorithms to the verification of complex software systems, the principles of formal logic provide the tools and frameworks for creating reliable and predictable computational systems. Without a firm grasp of logical structures and reasoning techniques, the field would be adrift in a sea of unpredictable and unreliable code.The application of logic in computer science is multifaceted, extending far beyond simple “if-then” statements.

It underpins the development of programming languages, the design of databases, and the creation of artificial intelligence systems. Understanding different logical systems and their inherent strengths and weaknesses is crucial for choosing the appropriate tools for a given task. The ability to reason effectively is paramount in problem-solving and algorithm design, enabling programmers to develop elegant and efficient solutions to complex computational challenges.

Furthermore, rigorous logical methods are employed in program verification to ensure the correctness and reliability of software systems, preventing costly errors and ensuring the safety of applications.

Propositional Logic and its Applications

Propositional logic, the simplest form of logic, deals with propositions – statements that can be either true or false. These propositions are combined using logical connectives such as AND, OR, and NOT to create more complex expressions. Truth tables are used to systematically determine the truth value of these expressions for all possible combinations of the input propositions.

Propositional logic finds application in digital circuit design, where logic gates (AND, OR, NOT gates) directly implement these logical connectives. For example, a simple AND gate outputs a high signal (true) only if both of its inputs are high (true); otherwise, it outputs a low signal (false). This forms the foundation of Boolean algebra, crucial in digital electronics and computer architecture.

Predicate Logic and its Use in Knowledge Representation

Predicate logic extends propositional logic by introducing predicates, which represent properties or relationships. For example, the predicate “IsGreaterThan(x, y)” represents the relationship “x is greater than y.” Quantifiers, such as “for all” (∀) and “there exists” (∃), allow us to express statements about sets of objects. Predicate logic is widely used in knowledge representation and reasoning in artificial intelligence, enabling the creation of sophisticated systems capable of drawing inferences from large knowledge bases.

For example, a knowledge base containing the facts “All men are mortal” and “Socrates is a man” can use predicate logic to infer that “Socrates is mortal.”

Program Verification using Hoare Logic

Hoare logic provides a formal system for reasoning about the correctness of computer programs. It uses assertions, statements about the program’s state, to specify preconditions (conditions that must hold before a program segment executes) and postconditions (conditions that must hold after a program segment executes). Hoare triples, written as P C Q, denote that if precondition P is true before executing the program segment C, then postcondition Q will be true after execution.

This formal framework allows for rigorous mathematical proof of a program’s correctness, reducing the likelihood of runtime errors and improving software reliability. For example, a simple program segment that increments a counter could be verified using Hoare logic to guarantee that the counter’s value increases by one after execution.

Deductive Reasoning and Algorithm Design

Deductive reasoning, the process of drawing logical conclusions from premises, plays a crucial role in algorithm design. By starting with known facts and applying logical rules, programmers can derive new facts and develop algorithms that solve specific problems. For example, the development of a sorting algorithm can be guided by deductive reasoning, starting with the goal of ordering elements and using logical rules to derive steps that achieve this goal.

This process ensures that the algorithm is both correct and efficient. Algorithms are, at their core, formalizations of logical reasoning steps designed to achieve a desired outcome.

Quantum Computing Theory

Quantum computing represents a paradigm shift in computation, leveraging the bizarre yet powerful principles of quantum mechanics to solve problems intractable for even the most powerful classical computers. This leap into the quantum realm promises breakthroughs across various fields, from drug discovery to cryptography.

Fundamental Concepts of Quantum Computing

Quantum computing relies on three key concepts: superposition, entanglement, and quantum interference. Superposition allows a qubit to exist in a combination of 0 and 1 simultaneously, unlike a classical bit which is either 0 or 1. Entanglement links two or more qubits such that their fates are intertwined, regardless of the distance separating them. Quantum interference, similar to wave interference, allows for constructive and destructive interference of quantum amplitudes, influencing the outcome of computations.

Superposition: A qubit can be in a superposition state, represented as |ψ⟩ = α|0⟩ + β|1⟩, where α and β are complex amplitudes and |α|² + |β|² = 1. Imagine a coin spinning in the air – it’s neither heads nor tails until it lands.

Entanglement: Two entangled qubits share a combined state, like |Φ⟩ = (|00⟩ + |11⟩)/√

2. Think of two coins magically linked

if one lands heads, the other

always* lands tails, instantly, no matter how far apart they are.

Quantum Interference: The probabilities of different computational paths interfere, leading to either amplification or cancellation. Imagine waves on the ocean; constructive interference creates larger waves, while destructive interference cancels them out.

Classical vs. Quantum Computation

The core differences between classical and quantum computation are summarized below:

| Feature | Classical Computation | Quantum Computation |

|---|---|---|

| Basic Unit | Bit (0 or 1) | Qubit (0, 1, or superposition) |

| Computation Type | Deterministic, sequential | Probabilistic, parallel |

| Problem Solving | Limited in certain complex problems | Potential for solving intractable problems |

| Scalability | Limited by physical constraints | Significant challenges in scalability |

Potential Applications of Quantum Computing

Quantum computing holds immense potential across diverse fields.

Drug Discovery

Quantum simulation can model the behavior of molecules with unprecedented accuracy, accelerating drug design and discovery. By simulating molecular interactions, researchers can identify potential drug candidates and optimize their efficacy, reducing the time and cost associated with traditional drug development methods. While still in its early stages, several companies are actively pursuing this application, with promising results in simulating small molecules.

Materials Science

Quantum algorithms can accelerate the discovery of new materials with specific properties, such as high-temperature superconductors or novel catalysts. By simulating the electronic structure and properties of materials, researchers can predict the behavior of materials under various conditions, leading to the design of materials with tailored properties. This could revolutionize various industries, including energy, electronics, and manufacturing. Initial successes in simulating small material systems demonstrate the potential of this approach.

Cryptography

Quantum computers pose a significant threat to current encryption methods based on the difficulty of factoring large numbers (like RSA). However, quantum computing also enables the development of quantum-resistant cryptography, which relies on quantum-mechanical principles for security. The transition to quantum-resistant cryptography is a crucial step in ensuring future data security. Standardization efforts are underway to define and implement these new cryptographic techniques.

Challenges of Building and Programming Quantum Computers

Building and programming quantum computers present significant challenges.

Qubit Coherence

Maintaining the delicate quantum states of qubits is crucial. Environmental noise can cause decoherence, leading to errors. Research focuses on improving qubit coherence times through various techniques such as improved qubit designs and advanced control methods, like using superconducting circuits or trapped ions.

Scalability

Building large-scale, fault-tolerant quantum computers is extremely difficult. Quantum error correction techniques are essential to mitigate errors caused by decoherence and other noise sources. The challenge lies in developing efficient and scalable error correction codes and implementing them in physical quantum hardware.

Programming Paradigms

Quantum programming differs significantly from classical programming. Developing quantum algorithms requires a deep understanding of quantum mechanics and specialized programming languages and tools like Qiskit or Cirq.

Example (Qiskit): A simple Hadamard gate operation.“`pythonfrom qiskit import QuantumCircuit, Aer, executeqc = QuantumCircuit(1)qc.h(0) simulator = Aer.get_backend(‘statevector_simulator’)job = execute(qc, simulator)result = job.result()print(result.get_statevector())“`

Current State and Future Prospects of Quantum Computing

Quantum computing is still in its nascent stages, but the progress is remarkable. While building fault-tolerant, large-scale quantum computers remains a significant challenge, substantial advancements have been made in qubit technology, quantum algorithms, and error correction. The potential societal impact is immense, promising breakthroughs in medicine, materials science, and information technology. However, ethical considerations surrounding the potential misuse of quantum computing, particularly in cryptography and data security, need careful consideration.

The next decade will likely witness a transition from proof-of-concept experiments to more practical applications, gradually transforming various aspects of our lives. The development of quantum-resistant cryptography, for instance, is crucial to ensure the continued security of our digital infrastructure in the quantum era. This will require substantial investment in research and development, as well as international collaboration to establish global standards and guidelines for the responsible development and deployment of this transformative technology.

Software Engineering Principles

Software engineering, often seen as the practical application of computer science, bridges the gap between theoretical concepts and the creation of functional, reliable, and maintainable software systems. It’s a discipline that encompasses a broad range of activities, from initial design and development to testing, deployment, and maintenance. Understanding its core principles is vital for building robust and successful software projects.

Theoretical Underpinnings of Software Engineering

Software engineering and computer science share a significant overlap, with computer science providing the foundational theoretical knowledge – algorithms, data structures, and computational theory – upon which software engineering builds. However, software engineering focuses on the practical aspects of software development, emphasizing teamwork, project management, and the entire software lifecycle, aspects less central to core computer science. The difference can be likened to the difference between knowing the theory of flight and actually building and piloting an airplane.Theoretical models significantly impact software development practices.

The waterfall model, with its linear, sequential approach, provides a structured framework, but often struggles with adapting to changing requirements. Agile methodologies, in contrast, embrace iterative development and flexibility, better accommodating evolving needs. The choice of methodology depends heavily on the project’s nature and constraints.

| Framework | Strengths | Weaknesses | Applicability |

|---|---|---|---|

| Waterfall Model | Simple to understand and manage; well-defined stages; easy to track progress in early stages. | Inflexible; difficult to accommodate changing requirements; testing happens late in the process, leading to potential costly rework. | Suitable for projects with well-defined requirements and minimal anticipated changes, such as embedded systems or large-scale infrastructure projects where changes are expensive. |

| Agile (Scrum/Kanban) | Flexible and adaptable to changing requirements; frequent feedback loops; faster time to market; improved collaboration. | Requires experienced and self-managing teams; can be challenging to manage in large, complex projects; requires strong communication and collaboration. | Suitable for projects with evolving requirements, where frequent feedback is crucial, and rapid iteration is desired, such as web applications or mobile apps. |

| Spiral Model | Risk management is integrated throughout the process; allows for early detection and mitigation of risks; accommodates changing requirements. | Can be complex to manage; requires significant expertise in risk assessment; may be overkill for simpler projects. | Suitable for large, complex projects with high risk and uncertainty, where iterative development and risk mitigation are critical, such as aerospace or defense systems. |

Principles of Software Design and Architecture

The SOLID principles are crucial for creating maintainable and extensible object-oriented software. These principles guide developers in designing classes and modules that are independent, reusable, and easy to understand.Here’s an illustration of the Single Responsibility Principle (SRP) in Java:“`java// Violates SRP: handles both user authentication and database interactionclass UserAuthenticator public boolean authenticate(String username, String password) // …

authentication logic … // … database interaction to check user credentials … // Follows SRP: separates authentication and database interactionclass UserAuthenticator public boolean authenticate(String username, String password) // … authentication logic …

class UserDatabase public boolean isValidUser(String username, String password) // … database interaction … “`Architectural patterns provide blueprints for structuring software systems. Microservices decompose applications into small, independent services, enhancing scalability and maintainability. Layered architecture organizes the system into distinct layers (presentation, business logic, data access), promoting modularity.

Event-driven architecture uses events to trigger actions, facilitating asynchronous communication. The choice of pattern depends on the application’s requirements and scalability needs.A UML class diagram for a simple e-commerce system would show classes like `Product`, `Customer`, `Order`, `ShoppingCart`, and their relationships (e.g., a `Customer` can place many `Orders`, an `Order` contains many `Products`). A sequence diagram would illustrate the interactions between these classes during a typical purchase flow.

Software Testing and Verification