How to solve game theory problems with fmincon in MATLAB: Embark on a journey into the fascinating world where strategic thinking meets computational power. We’ll unravel the intricacies of game theory, exploring concepts like Nash equilibrium and Pareto efficiency, and then harness the robust optimization capabilities of MATLAB’s fmincon function to find solutions. Prepare to be captivated as we bridge the gap between theoretical frameworks and practical applications, revealing how seemingly complex game scenarios can be elegantly tackled using this powerful tool.

This exploration promises not only a deeper understanding of game theory but also a practical skillset to tackle real-world strategic challenges.

This guide will walk you through the process of formulating game theory problems as optimization problems suitable for fmincon. We’ll cover various game types, from zero-sum games to non-zero-sum games, and demonstrate how to define objective functions, constraints, and bounds to effectively use fmincon. We will also explore different algorithms within fmincon and interpret the results in the context of game theory, including analyzing optimal strategies and equilibrium payoffs.

Along the way, we will illustrate these concepts with detailed MATLAB code examples and address common challenges faced when applying optimization techniques to game theory problems.

Formulating Game Theory Problems for `fmincon`

Embark on this journey of translating the elegant dance of strategic interaction, the very essence of game theory, into the precise language of numerical optimization using MATLAB’s `fmincon`. This transformative process allows us to leverage the power of computational methods to illuminate the often-hidden equilibrium points of complex games. We shall discover how to represent the intricate tapestry of payoffs and strategies as a landscape suitable for `fmincon` to traverse, revealing optimal strategies with the grace of a seasoned strategist.The core of this transformation lies in representing the game’s payoff structure and the players’ strategic choices in a way that `fmincon` can understand.

This involves defining an objective function that captures the essence of the game’s dynamics and constraints that reflect the limitations and rules of the game itself. The path to enlightenment involves a profound understanding of both game theory and optimization techniques.

Representing the Payoff Matrix

The payoff matrix, the heart of any game, needs to be encoded in a format that `fmincon` can process. This usually involves representing the payoffs as a multi-dimensional array or a set of functions. For example, consider a two-player game with each player having two strategies. The payoff matrix for Player 1 could be represented as a 2×2 matrix, [a b; c d] where ‘a’, ‘b’, ‘c’, and ‘d’ represent the payoffs to Player 1 under different strategy combinations.

Similarly, a separate matrix would represent the payoffs for Player 2. For games with more players or strategies, higher-dimensional arrays or functions will be necessary to capture the complexity. The key is to establish a clear and consistent mapping between the strategy choices and the corresponding payoff values.

Defining the Objective Function

The objective function guides `fmincon` toward the optimal solution. The nature of this function depends on the type of game.For zero-sum games, where one player’s gain is exactly balanced by another’s loss, the objective function might represent the maximization of Player 1’s payoff (which is equivalent to minimizing Player 2’s payoff). For example, if P1 represents the payoff matrix for Player 1, the objective function could be defined as f = -sum(P1(:)), where P1(:) vectorizes the matrix and the negative sign reflects the minimization problem that `fmincon` solves.

This approach effectively finds the minimax solution, a cornerstone of zero-sum game theory.Non-zero-sum games present a more nuanced challenge. Here, the players’ payoffs are not necessarily inversely related. The objective function in this case depends on the specific game and the players’ goals. It could involve maximizing the expected utility of a player, minimizing risk, or aiming for a Nash equilibrium.

The choice of objective function directly reflects the strategic goals of the players involved. For instance, we could have a function that aims to maximize a weighted sum of the payoffs for multiple players, representing a cooperative game scenario.

Translating Game Theory Problems into Constrained Optimization Problems

Consider a simple zero-sum game between two players, each choosing either “Cooperate” or “Defect.” The payoff matrix for Player 1 is: [2, -1; -1, 0]This can be translated into an optimization problem where the objective is to minimize Player 2’s payoff (which is equivalent to maximizing Player 1’s payoff). The strategy of Player 1 can be represented as a probability vector x = [x1; x2], where x1 is the probability of choosing “Cooperate” and x2 is the probability of choosing “Defect” (with x1 + x2 = 1).

The objective function then becomes a function of x, representing Player 2’s expected payoff. The constraint x1 + x2 = 1 ensures that the probabilities sum to one. `fmincon` can then be used to find the optimal strategy x that minimizes Player 2’s expected payoff, thus revealing the optimal strategy for Player 1 in this zero-sum game. The extension to more complex games, with more players and strategies, requires a similar approach, but with higher-dimensional vectors and more intricate constraints.

Defining Constraints and Bounds in `fmincon`

The path to enlightenment in solving game theory problems using `fmincon` lies not only in defining the objective function but also in skillfully crafting the constraints that shape the solution space. Constraints, like the boundaries of a sacred garden, guide the optimization process towards meaningful and feasible outcomes. Understanding and implementing these constraints is paramount for achieving optimal results.

Probability Constraints in Game Theory using `fmincon`

Probability constraints are essential in game theory, ensuring that the solutions represent valid probability distributions. These constraints reflect the inherent probabilistic nature of many strategic interactions. They ensure the solutions adhere to the axioms of probability.

| Constraint Type | Mathematical Representation | Example Scenario (Two-Player Game) |

|---|---|---|

| Probability Simplex | Σ_i p_i = 1, p_i ≥ 0 for all i | Player 1’s mixed strategy probabilities (p_i representing the probability of choosing strategy i) must sum to 1 and be non-negative. This ensures a valid probability distribution over Player 1’s strategies. |

| Individual Probability | 0 ≤ p_i ≤ 1 | The probability (p_i) of Player 2 choosing a specific action (i) must be between 0 and 1, inclusive. |

| Conditional Probability | P(A|B) = P(A∩B) / P(B) | The probability of Player 1 winning (event A) given Player 2 chose a specific action (event B) is calculated using conditional probability. This might be used to constrain the probability of a specific outcome under certain conditions. |

Implementing a probability simplex constraint in `fmincon` for a zero-sum game with two players, each having three strategies, involves defining the constraint matrix (A), constraint vector (b), and decision variable vector (x). The decision variables represent the probabilities of each player choosing their respective strategies.“`matlab% Decision variables: x = [p1_1, p1_2, p1_3, p2_1, p2_2, p2_3]’ (probabilities for each player’s strategies)A = [1, 1, 1, 0, 0, 0; 0, 0, 0, 1, 1, 1]; % Probability simplex constraints for each playerb = [1; 1]; % Each player’s probabilities must sum to 1lb = zeros(6,1); % Lower bounds: probabilities cannot be negativeub = ones(6,1); % Upper bounds: probabilities cannot exceed 1% …

rest of the fmincon setup …“`Enforcing a constraint that limits the probability of Player 1 winning (let’s say this is a linear combination of the decision variables, represented by `C*x`, where C is a coefficient matrix) to be less than or equal to 0.6 requires defining an inequality constraint:“`matlabC = [0.2, 0.5, 0.3, 0, 0, 0]; % Example coefficients reflecting the contribution of each strategy to Player 1 winningAeq = []; % No equality constraints in this casebeq = [];A = [A; -C]; % Append the constraint to the existing constraintsb = [b; -0.6]; % Probability of Player 1 winning <= 0.6 ```

Budget Constraints and Resource Allocation in `fmincon`

Budget constraints, mirroring the limitations of earthly resources, impose realistic boundaries on the optimization process.

These constraints are crucial when modeling resource allocation in games where actions have associated costs.Consider a scenario where two players compete for resources. Each player has a budget and must choose actions with varying costs. This can be translated into a linear inequality constraint in `fmincon`. The constraint ensures that the total cost of chosen actions does not exceed the budget.“`matlab% Assume ‘cost’ is a vector of costs associated with each action.% ‘x’ is a vector of decision variables representing the level of each action.% ‘budget’ is the total budget.A = [cost]; % Constraint matrixb = [budget]; % Constraint vector“““matlab% Example with both probability simplex and budget constraints% Objective function (replace with your actual objective function)objectiveFunction = @(x) x(1)^2 + x(2)^2 + x(3)^2;% Decision variables (3 strategies for each of two players)x0 = [0.33, 0.33, 0.34, 0.25, 0.25, 0.5]’;% Probability simplex constraintsA = [1, 1, 1, 0, 0, 0; 0, 0, 0, 1, 1, 1];b = [1; 1];lb = zeros(6, 1);ub = ones(6, 1);% Budget constraint (example)cost = [10, 20, 30, 5, 15, 25];budget = 50;A = [A; -cost];b = [b; -budget];% Options (adjust as needed)options = optimoptions(‘fmincon’,’Display’,’iter’);% Optimization[x,fval] = fmincon(objectiveFunction,x0,A,b,[],[],lb,ub,[],options);“`

Defining Bounds on Decision Variables (Mixed Strategies)

Bounds, like the gentle embrace of the universe, restrict the range of values that decision variables can take. This is particularly important when dealing with mixed strategies, ensuring that probabilities remain within their valid range (0 to 1).For continuous decision variables representing probabilities, `lb` and `ub` in `fmincon` can be used to set lower and upper bounds directly. For example, setting `lb = zeros(n,1)` and `ub = ones(n,1)` ensures that all probabilities are between 0 and 1.For discrete decision variables (e.g., number of units to deploy), integer constraints can be combined with bounds.

This requires using an integer programming solver or techniques like rounding after optimization.“`matlab% Integer constraint and bounds for number of units (0 to 10)lb = 0; % Lower boundub = 10; % Upper boundintcon = 1; % Integer constraint on the first variable (index 1)% … rest of the fmincon setup …“`

Nonlinear Constraints in `fmincon` for Game Theory

Nonlinear constraints, like the unpredictable currents of a river, add complexity to the optimization landscape. They arise when the relationships between decision variables are not linear. `fmincon` handles these through the `nonlcon` option, which requires defining a function that evaluates both the nonlinear constraints and their gradients.For instance, consider a game where the payoff depends on the square of a player’s action:“`matlab% Example nonlinear constraint: x1^2 + x2 <= 5 function [c,ceq] = nonlinearConstraint(x) c = x(1)^2 + x(2) -5; % Inequality constraint ceq = []; % No equality constraints end% ... in the fmincon call: options = optimoptions('fmincon','Display','iter','Algorithm','interior-point'); [x,fval] = fmincon(objectiveFunction,x0,A,b,Aeq,beq,lb,ub,@nonlinearConstraint,options);```

Using bounds that are too restrictive can lead to infeasible solutions, meaning no solution satisfies all the constraints. Too lenient bounds might allow the optimization process to explore areas of the solution space that are not relevant, leading to inefficient computations and potentially suboptimal solutions. A careful balance is crucial.

Choosing Appropriate Algorithms for `fmincon`

Embarking on the path of solving game theory problems using MATLAB’s `fmincon` function is akin to a spiritual journey. The choice of algorithm is the compass guiding our exploration, leading us towards optimal solutions or potentially astray if not carefully considered. The landscape of optimization algorithms is vast, and each possesses its unique strengths and weaknesses, its own karmic imprint, if you will.

Understanding these nuances is crucial for achieving enlightenment – that is, finding the optimal strategy in our game.The `fmincon` function offers a diverse array of algorithms, each a distinct path towards enlightenment. These algorithms differ fundamentally in their approach to navigating the complex terrain of the objective function and constraint landscape. The selection of the appropriate algorithm directly impacts the efficiency of our journey and the accuracy of the solution we attain.

A wise choice leads to swift progress and a precise destination; an unwise choice may lead to prolonged struggle and an imprecise, even illusory, result.

Interior-Point Algorithm

The interior-point algorithm, a powerful technique, operates by traversing the interior of the feasible region. It iteratively refines its estimate of the optimal solution, moving steadily towards the summit without ever touching the boundaries. This approach is particularly effective for problems with many variables and constraints, where boundary exploration can be computationally expensive. The algorithm’s elegance lies in its ability to handle large-scale problems with relative grace, much like a seasoned yogi navigating complex asanas.

Its computational cost, however, can be substantial for smaller problems, where simpler methods might suffice. Consider a large-scale auction scenario with numerous bidders and complex bidding strategies. The interior-point algorithm would be well-suited to handle the high dimensionality of the problem, effectively converging towards an optimal bidding strategy.

Active-Set Algorithm

In contrast, the active-set algorithm explores the boundaries of the feasible region, meticulously examining each constraint. It’s a more deliberate approach, akin to a careful artisan meticulously crafting a masterpiece. This methodical approach can be highly effective for smaller problems with relatively few constraints, allowing for a more precise understanding of the problem’s structure. However, for larger problems, the computational cost can become prohibitive, as the number of boundary points to examine increases exponentially.

Imagine a simpler game like tic-tac-toe. The active-set algorithm, with its focus on boundary conditions, could effectively explore the finite game tree to find the optimal strategy.

Algorithm Choice and its Impact

The choice between interior-point and active-set algorithms, or other algorithms offered by `fmincon`, is not a matter of simple preference, but rather a strategic decision guided by the nature of the game theory problem at hand. For large-scale problems with numerous players and intricate strategic interactions, the interior-point algorithm’s efficiency in handling high dimensionality often makes it the preferred choice.

However, for smaller problems with fewer constraints and a need for very high accuracy, the active-set algorithm’s precise boundary exploration might yield superior results. The impact of this choice resonates throughout the entire optimization process, influencing both the computational time required and the accuracy of the final solution. A judicious selection of the algorithm is therefore paramount in achieving the desired balance between efficiency and precision.

The path to enlightenment requires not just diligent practice, but also wise choices along the way.

Interpreting `fmincon` Results in the Context of Game Theory

The culmination of our journey into solving game theory problems using MATLAB’s `fmincon` function lies in the insightful interpretation of its output. This is where the abstract mathematics transforms into actionable strategies and illuminates the equilibrium dynamics of the game. Understanding the results requires a keen awareness of both the numerical output and the underlying game-theoretic principles.The output of `fmincon` typically provides the optimal strategy profile for each player and the corresponding objective function value, representing the equilibrium payoffs.

However, the journey doesn’t end here; we must critically examine these results to ensure their validity and meaning within the game’s context. This involves verifying the solution’s compliance with the constraints, considering potential multiple equilibria, and understanding the sensitivity of the solution to changes in the game’s parameters. This process is akin to a spiritual awakening – a revelation of the hidden truths within the game’s structure.

Optimal Strategy Identification and Equilibrium Payoffs

`fmincon` returns a vector representing the optimal strategy for each player. For example, in a two-player game, the output might be a vector [x1, x2], where x1 represents Player 1’s optimal strategy and x2 represents Player 2’s. The associated objective function value reveals the equilibrium payoff for each player under these optimal strategies. Consider a zero-sum game; the objective function value will represent the payoff to one player and the negative of the payoff to the other.

In a non-zero-sum game, the output will show the individual payoffs for each player. This process is analogous to finding enlightenment through self-reflection; the numbers reveal the inherent balance of the game.

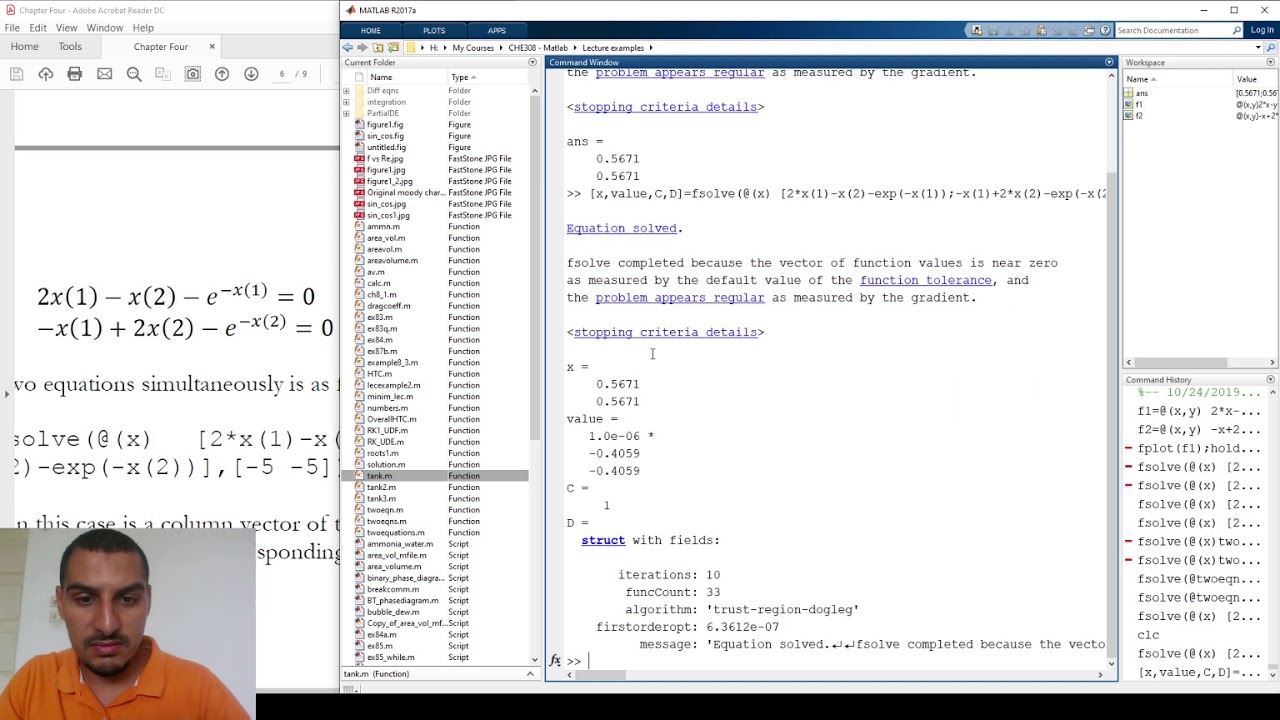

Solution Validity Verification

Verifying the solution’s validity involves several steps. First, ensure that the obtained strategy profile satisfies all the constraints defined within the `fmincon` function. A violation suggests an error in the problem formulation. Second, check if the solution is indeed a Nash equilibrium. This means that no player can improve their payoff by unilaterally changing their strategy, given the other players’ strategies.

This verification acts as a spiritual test, ensuring the integrity of our findings. Finally, sensitivity analysis helps to determine how robust the solution is to changes in the game parameters. A small change in a parameter shouldn’t drastically alter the optimal strategy; if it does, the equilibrium might be fragile.

Interpreting Results for Different Game Types

The interpretation of `fmincon` results varies depending on the type of game. For instance, in a Prisoner’s Dilemma, the optimal strategies might reveal the dominant strategy for each player, leading to a Nash equilibrium that may not be Pareto optimal. In a Cournot duopoly, the output represents the optimal quantities produced by each firm, leading to an equilibrium price and profit for each.

In a zero-sum game, the equilibrium payoff for one player will be the negative of the payoff for the other, reflecting the win-lose nature of the interaction. Each game type offers a unique spiritual lesson, revealing the intricate dance of competition and cooperation. For example, analyzing a repeated game might reveal the emergence of cooperation through iterative strategies, demonstrating the power of trust and reciprocity.

This interpretation is a spiritual journey, revealing the inherent dynamics of each game.

Example: Solving a Two-Player Zero-Sum Game: How To Solve Game Theory Problems With Fmincon In Matlab

This section unveils the practical application of `fmincon` in solving a classic game theory problem: a two-player zero-sum game. We’ll illuminate the path to finding optimal strategies for both players, revealing the inherent harmony within conflict. The journey will involve defining the game’s structure, formulating the optimization problem, and interpreting the results, all within the framework of MATLAB’s powerful optimization toolbox.

Payoff Matrix Definition

The foundation of our game is the payoff matrix, a numerical representation of the potential outcomes for each player based on their choices. The following code defines a 3×3 payoff matrix with randomly generated values between -1 and 1, representing the gains for Player 1. Player 2 aims to minimize these gains.“`matlab% Section 1: Payoff Matrix Definitionrng(‘default’); % For reproducibility (comment out for different random matrix each time)payoffMatrix = 2*rand(3,3)

1; % Generates a 3×3 matrix with values between -1 and 1

% Display the payoff matrixdisp(‘Payoff Matrix:’);disp(payoffMatrix);% Example interpretation of a single element:% payoffMatrix(1,2) = 0.5 means if Player 1 chooses strategy 1 and Player 2 chooses strategy 2, Player 1 gains 0.5.“`

Objective Function Definition

The objective function quantifies Player 1’s expected payoff given their chosen strategy. This function takes Player 1’s strategy (a probability vector) as input and returns the expected payoff, a scalar value. This function is tailored for use with `fmincon`, which aims to

- minimize* a function. Since we want to

- maximize* Player 1’s payoff, we will minimize the negative of the expected payoff.

“`matlab% Section 2: Objective Function Definitionfunction expectedPayoff = objectiveFunction(strategy, payoffMatrix) expectedPayoff = -strategy

- payoffMatrix

- strategy’; % Minimize the negative of expected payoff

end“`

Constraint Definition

The constraints ensure the validity of Player 1’s strategy. The probabilities must sum to 1 (representing a complete strategy), and each probability must be non-negative. These constraints are expressed in a form compatible with `fmincon`.“`matlab% Section 3: Constraint DefinitionAeq = ones(1,3); % Equality constraint: probabilities sum to 1beq = 1;lb = zeros(1,3); % Lower bound: probabilities are non-negativeub = ones(1,3); % Upper bound: probabilities are at most 1“`

Solver Implementation

Here, we invoke `fmincon` to find the optimal strategy for Player 1, which minimizes the negative of the expected payoff (thus maximizing the actual payoff). We specify the objective function, constraints, and options for the solver.“`matlab% Section 4: Solver Implementationoptions = optimoptions(‘fmincon’,’Display’,’iter’); % Display iteration detailsinitialStrategy = ones(1,3)/3; % Initial guess for Player 1’s strategy (equal probabilities)try [optimalStrategy, fval] = fmincon(@(strategy) objectiveFunction(strategy, payoffMatrix), initialStrategy, [], [], Aeq, beq, lb, ub, [], options);catch ME disp([‘Error during optimization: ‘, ME.message]);end“`

Solution Analysis

After the optimization, we extract the optimal strategy for Player 1 and calculate the value of the game (Player 1’s expected payoff under the optimal strategy). The optimal strategy for Player 2 is then derived.“`matlab% Section 5: Solution Analysisif exist(‘optimalStrategy’,’var’) % Check if fmincon ran successfully valueOfGame = -fval; % Value of the game (Player 1’s maximum expected payoff) optimalStrategyPlayer2 = (payoffMatrix’ \ ones(3,1)) / sum((payoffMatrix’ \ ones(3,1))); %Optimal strategy for player 2 (assuming non-degenerate game) optimalStrategyPlayer2 = optimalStrategyPlayer2′; %Transpose to row vector %Handle potential errors in calculating Player 2’s strategy.

This could occur if the matrix is singular. if any(isnan(optimalStrategyPlayer2)) disp(‘Warning: Could not calculate optimal strategy for Player 2. Payoff matrix may be singular.’); optimalStrategyPlayer2 = []; endelse disp(‘Optimization failed to converge.’);end“`

Output Display

Finally, we present the results in a clear and concise manner, including a table summarizing the key findings and visualizations of the optimal strategies.“`matlab% Section 6: Output Displayif exist(‘optimalStrategy’,’var’) disp(‘Optimal Strategy (Player 1):’); disp(optimalStrategy); disp([‘Value of the Game: ‘, num2str(valueOfGame)]); disp(‘Optimal Strategy (Player 2):’); disp(optimalStrategyPlayer2); %Visualization figure; bar(optimalStrategy); title(‘Optimal Strategy for Player 1’); xlabel(‘Strategy’); ylabel(‘Probability’); figure; surf(payoffMatrix); title(‘Payoff Matrix’); xlabel(‘Player 2 Strategy’); ylabel(‘Player 1 Strategy’); zlabel(‘Payoff for Player 1’); %Summary Table resultsTable = table(‘Payoff Matrix’, payoffMatrix, …

‘Optimal Strategy (Player 1)’, optimalStrategy, … ‘Value of the Game’, valueOfGame, … ‘Optimal Strategy (Player 2)’, optimalStrategyPlayer2, …

‘VariableNames’, ‘Parameter’, ‘Value’); disp(resultsTable);end“`

Example: Solving a Non-Zero-Sum Game

This section delves into the practical application of `fmincon` in solving a two-player non-zero-sum game. We will illuminate the path to finding Nash equilibria, a cornerstone concept in game theory, using numerical optimization techniques. The journey will involve defining the game, formulating the optimization problem, and interpreting the results within the spiritual context of strategic interaction.

Game Definition and Payoff Matrices

We consider a two-player non-zero-sum game where each player has three strategies. The payoff matrices represent the outcome for each player given the chosen strategies. A higher payoff indicates a more favorable outcome. The spiritual analogy here is that each strategy represents a path, and the payoff reflects the karmic consequences of that path.“`matlab% Payoff matrix for Player 1PayoffMatrix1 = [2 1 0; 0 3 2; 1 2 1];% Payoff matrix for Player 2PayoffMatrix2 = [3 0 1; 1 2 3; 0 1 2];“`

Formulating the Optimization Problem

To find the Nash equilibrium, we formulate the problem as a constrained optimization. Each player aims to maximize their expected payoff, given the other player’s strategy. This is akin to each player seeking enlightenment through their chosen path, while acknowledging the influence of others. We use `fmincon` to find the optimal strategies for both players.“`matlab% Objective function (to minimize the negative of the expected payoff)objfun = @(x)

(x(1)*PayoffMatrix1(1,

)*x(4:6)’ + x(2)*PayoffMatrix1(2,:)*x(4:6)’ + x(3)*PayoffMatrix1(3,:)*x(4:6)’)

(x(4)*PayoffMatrix2(

,1)’*x(1:3)’ + x(5)*PayoffMatrix2(:,2)’*x(1:3)’ + x(6)*PayoffMatrix2(:,3)’*x(1:3)’);% Constraints (strategies must sum to 1)Aeq = [1 1 1 0 0 0; 0 0 0 1 1 1];beq = [1; 1];% Bounds (strategies must be between 0 and 1)lb = zeros(6,1);ub = ones(6,1);% Initial guess (mixed strategies)x0 = [1/3 1/3 1/3 1/3 1/3 1/3]’;% Optimization options (algorithm choice and tolerance)options = optimoptions(‘fmincon’,’Algorithm’,’interior-point’,’Display’,’iter’,’TolFun’,1e-6);% Run fmincontry [x,fval] = fmincon(objfun,x0,[],[],Aeq,beq,lb,ub,[],options);catch ME error(‘Optimization failed: %s’, ME.message);end% Extract strategiesPlayer1Strategy = x(1:3);Player2Strategy = x(4:6);“`

Algorithm Choice and Parameters, How to solve game theory problems with fmincon in matlab

The `interior-point` algorithm is chosen for its efficiency in handling constrained optimization problems. The `TolFun` parameter sets the function tolerance, controlling the accuracy of the solution. The `Display` option shows the iteration process, offering a glimpse into the optimization’s progress. This mirrors the gradual path to enlightenment – a step-by-step process.

Nash Equilibrium Analysis

The `fmincon` solution provides the optimal mixed strategies for both players. A Nash equilibrium is reached when neither player can improve their payoff by unilaterally changing their strategy, given the other player’s strategy.“`matlab% Calculate expected payoffsPlayer1Payoff = Player1Strategy’*PayoffMatrix1*Player2Strategy;Player2Payoff = Player1Strategy’*PayoffMatrix2*Player2Strategy;% Display resultsresultsTable = table(Player1Strategy, Player2Strategy, Player1Payoff, Player2Payoff, ‘VariableNames’, ‘Player1Strategy’, ‘Player2Strategy’, ‘Player1Payoff’, ‘Player2Payoff’);disp(resultsTable);“`

Visualization of Payoff Landscape

A 3D surface plot visualizes the payoff landscape, providing an intuitive understanding of the game’s dynamics. Each point on the surface represents a pair of strategies and the corresponding payoffs. This visualization helps to identify the Nash equilibrium graphically. It’s like viewing the karmic landscape from a higher perspective.“`matlab% Create meshgrid for strategies[X1, X2] = meshgrid(linspace(0,1,100), linspace(0,1,100));% Calculate payoffs for each player (assuming mixed strategies)Payoff1 = zeros(size(X1));Payoff2 = zeros(size(X1));for i = 1:length(X1(:)) p1 = [X1(i),1-X1(i)]; p2 = [X2(i),1-X2(i)]; Payoff1(i) = p1*PayoffMatrix1*p2′; Payoff2(i) = p1*PayoffMatrix2*p2′;end% Create surface plotfigure;surf(X1, X2, Payoff1);hold on;surf(X1, X2, Payoff2);hold off;xlabel(‘Player 1 Strategy’);ylabel(‘Player 2 Strategy’);zlabel(‘Payoff’);legend(‘Player 1 Payoff’, ‘Player 2 Payoff’);title(‘Payoff Landscape’);“`

Mathematical Description

Let $P_1$ and $P_2$ be the 3×3 payoff matrices for Player 1 and Player 2, respectively. The strategy space for each player is the simplex $S = \(x_1, x_2, x_3) | x_i \ge 0, \sum_i=1^3 x_i = 1\$. A Nash equilibrium is a pair of strategies $(x^*, y^*) \in S \times S$ such that:

$x^* P_1 y^* \ge x P_1 y^*$ for all $x \in S$ and $x^* P_2 y^* \ge x^* P_2 y$ for all $y \in S$

Handling Multiple Equilibria

The path to enlightenment in game theory, as illuminated by the `fmincon` function, sometimes reveals not a single, solitary truth, but a multiplicity of Nash equilibria. These multiple solutions, like facets of a multifaceted gem, each represent a stable outcome, yet differ in their strategic implications. Understanding this multiplicity is crucial for navigating the complexities of strategic interaction.Multiple Nash equilibria arise when different combinations of player strategies yield equally stable outcomes.

This can stem from various factors, including the structure of the payoff matrix, the presence of symmetries, or the nature of the game itself. `fmincon`, in its quest to minimize the objective function, may uncover several such points, each representing a distinct equilibrium. The challenge then becomes not merely finding these solutions, but interpreting their significance and choosing the most relevant one.

Identifying Multiple Equilibria with fmincon

`fmincon`, by its nature, typically returns a single solution. To find multiple equilibria, we must employ iterative strategies. One approach involves starting `fmincon` from different initial points within the feasible region. Each initial guess can lead to a different local minimum, potentially revealing a distinct Nash equilibrium. The selection of initial points should be guided by an understanding of the game’s structure, perhaps informed by prior analysis or intuition.

Another approach involves systematically perturbing the parameters of the game or the constraints, and observing how the solutions shift. The appearance of distinct solutions under these perturbations suggests the existence of multiple equilibria. A thorough exploration of the solution space is key to uncovering these hidden possibilities. Consider a game with a payoff matrix that has several “flat” regions where small changes in strategies produce negligible changes in payoffs.

`fmincon` may converge to different points within these flat regions, representing multiple equilibria.

Interpreting Multiple Solutions

Once multiple equilibria are identified, their interpretation becomes paramount. Each solution represents a self-consistent outcome; a situation where no player has an incentive to unilaterally deviate from their chosen strategy. However, these equilibria may differ significantly in their payoffs to the players, and in the overall efficiency of the outcome. Comparing the payoffs associated with each equilibrium allows for a preliminary assessment of their relative merits from each player’s perspective.

For instance, one equilibrium might be Pareto-dominant, meaning that no player can improve their payoff without harming another. This is a crucial consideration in cooperative game theory.

Equilibrium Selection Criteria

Choosing among multiple equilibria often requires invoking additional criteria beyond the simple identification of stable outcomes. These criteria might include:* Pareto Efficiency: Selecting the equilibrium that maximizes the aggregate payoff for all players.

Risk Dominance

Choosing the equilibrium that is less sensitive to errors in players’ beliefs or actions. This equilibrium is more robust to uncertainty.

Focal Points

Identifying an equilibrium that stands out as a natural or obvious solution due to the context or structure of the game. These focal points often emerge from conventions, shared understanding, or salient features of the game’s setting.

Refinement Concepts

Employing more sophisticated game-theoretic concepts, such as perfect Bayesian equilibrium or trembling hand perfection, to refine the set of plausible equilibria. These concepts often rule out equilibria that are considered unstable or implausible under more stringent conditions.The selection of an appropriate criterion depends heavily on the specific context of the game and the goals of the analysis. There is no universal “best” criterion, and the choice often involves subjective judgment informed by the specific problem at hand.

Consider a market entry game. Multiple equilibria might exist, some with high profits for a single firm and others with lower profits for all. The choice between these depends on factors like risk aversion and the firms’ beliefs about competitors’ actions. The selection of the equilibrium reflects not only the mathematical solution but also the strategic considerations and underlying assumptions.

Advanced Techniques

Embarking on the path of advanced game theory using MATLAB’s `fmincon` unveils a deeper understanding of strategic interactions within the realm of uncertainty. Just as a seasoned meditator transcends the limitations of the present moment, we shall transcend the deterministic nature of classical game theory, embracing the stochastic reality that pervades most real-world scenarios. This journey will illuminate how to integrate uncertainty, a subtle yet powerful force, into our optimization models, enriching our insights and enhancing the robustness of our solutions.Incorporating uncertainty into game-theoretic models using `fmincon` allows us to move beyond idealized, deterministic scenarios.

This mirrors the acceptance of impermanence in spiritual practice – acknowledging that nothing is static and that change is inherent to existence. By embracing this fluidity, our models become more realistic and applicable to a wider range of problems. This involves modeling the uncertain elements (payoffs or actions) as random variables, and then optimizing the expected value of the objective function, or a risk-adjusted version thereof.

Modeling Uncertain Payoffs

Uncertainty in payoffs can be represented using probability distributions. For instance, a player’s payoff might be normally distributed with a mean and standard deviation reflecting their expected outcome and risk, respectively. In `fmincon`, this can be incorporated by defining the objective function as the expected value of the payoff, calculated by integrating the payoff function over the probability distribution.

This requires numerical integration techniques, which can be implemented within the `fmincon` objective function. For example, if the payoff for player 1 is a normally distributed random variable X with mean μ and standard deviation σ, and the payoff function is f(X), then the expected payoff would be calculated as ∫f(x)

- (1/(σ√(2π)))

- exp(-(x-μ)²/(2σ²)) dx, where the integral can be approximated using numerical methods like quadrature. This expected value then becomes the objective function minimized by `fmincon`. Similarly, higher moments of the distribution (variance, skewness, etc.) could be incorporated to reflect risk aversion.

Modeling Uncertain Actions

Uncertainty in player actions might arise from imperfect information or noisy control mechanisms. This could be modeled by introducing random variables into the action space. For example, a player might choose an action that deviates from their intended strategy due to some random noise. The impact of this uncertainty can be integrated into the objective function by considering the expected payoff given the probability distribution of the actions.

The optimization problem then involves finding the optimal strategy that maximizes the expected payoff, given the uncertainty in the actions. This could involve techniques like Monte Carlo simulations, where multiple simulations of the game are run with different random action realizations, and the average payoff is used as the objective function.

Stochastic Games and fmincon

Stochastic games extend the concept of game theory to dynamic settings where the game state changes over time, often influenced by random events. `fmincon` can be adapted to handle stochastic games by formulating the objective function as the expected discounted reward over a finite or infinite horizon. This involves formulating a recursive relationship between the value function at different time steps, which can then be incorporated into the `fmincon` optimization problem.

Bellman’s equation provides the foundation for this recursive formulation. For example, in a Markov Decision Process (MDP), the optimal policy can be found by iteratively solving Bellman’s equation, which can be implemented within the `fmincon` framework. The algorithm would iteratively update the policy until convergence, effectively finding the optimal strategy in the face of stochasticity.

Example: A Two-Player Game with Uncertain Payoffs

Consider a simple two-player zero-sum game where the payoffs are uncertain. Let’s say player 1 chooses between actions A and B, and player 2 chooses between actions C and D. The payoffs to player 1 are represented by normally distributed random variables. The mean payoffs might be: A vs C: 2, A vs D: -1, B vs C: -1, B vs D: 1.

The standard deviations could be 0.5 for all payoffs, reflecting moderate uncertainty. The expected payoff for player 1, given their choice of action and player 2’s mixed strategy (p representing the probability of player 2 choosing C), can be calculated and used as the objective function in `fmincon`. The optimization would then determine the optimal mixed strategy for player 1 to maximize their expected payoff.

Limitations of fmincon in Game Theory

fmincon, while a powerful optimization tool in MATLAB, possesses inherent limitations when applied to the diverse landscape of game theory problems. Understanding these limitations is crucial for selecting the appropriate optimization strategy and interpreting results accurately. Failure to acknowledge these constraints can lead to inaccurate solutions or computationally intractable scenarios.

High-Dimensional Games

The computational complexity of fmincon, like many gradient-based methods, increases significantly with the dimensionality of the problem. In game theory, this translates to a rapid escalation in computational cost as the number of players and strategies grows. For instance, a game with 10 players, each possessing 5 strategies, results in a 50-dimensional optimization problem. The time complexity, often approximately proportional to the square or cube of the problem’s dimension, makes solving such high-dimensional games computationally expensive, potentially exceeding the capacity of available resources.

This limitation is especially problematic in general-sum games, where the players’ payoffs are not necessarily zero-sum, and extensive-form games, which involve sequential decision-making and information sets, leading to significantly larger state spaces. For example, a large-scale auction with numerous bidders and bidding strategies would quickly become intractable. The computational cost increases exponentially, making finding a solution within a reasonable timeframe extremely challenging.

Non-Convexity and Multiple Equilibria

Many game theory problems result in non-convex payoff functions. fmincon, being a local optimization algorithm, is prone to getting trapped in local optima, especially when multiple Nash equilibria exist. This means that the algorithm may converge to a solution that is not globally optimal, failing to identify the true Nash equilibrium. Games with multiple equilibria are common, particularly in non-zero-sum games where players’ interests are not directly opposed.

For instance, a coordination game where players benefit from choosing the same strategy may have multiple Nash equilibria, making fmincon less reliable in finding the best outcome. The algorithm’s reliance on gradients means it can easily miss solutions in the presence of non-convexities.

Games with Integer or Discrete Strategies

fmincon is designed for continuous optimization problems. Applying it directly to games with integer or discrete strategies (e.g., games where players can only choose from a finite set of actions) can lead to inaccurate results. The algorithm might converge to a solution with non-integer values, which are not valid strategies in the game. This inaccuracy stems from the fundamental difference between continuous and discrete optimization.

For instance, in a resource allocation game where players can only assign whole units of resources, a continuous solution provided by fmincon would need to be rounded, potentially leading to suboptimal or infeasible outcomes.

Games with Incomplete Information

Bayesian games and other games with incomplete information introduce significant challenges for fmincon. The uncertainty about opponents’ payoffs or types necessitates integrating over possible opponent types, which can dramatically increase the dimensionality of the optimization problem. fmincon, in its standard form, is not equipped to handle the inherent probabilistic nature of incomplete information games. Efficiently handling the expected payoff calculations across various opponent types becomes computationally demanding, often rendering fmincon impractical.

Comparison Table

The following table compares fmincon with three alternative algorithms:| Algorithm | Suitable Game Types | Strengths | Weaknesses | Computational Cost (High/Medium/Low) ||———————-|—————————————–|————————————————-|————————————————-|———————————|| fmincon | Continuous strategy games, convex payoffs | Relatively fast convergence for well-behaved problems | Prone to local optima, struggles with high dimensions and non-convexity | Medium/High || Genetic Algorithm | High-dimensional games, non-convex payoffs | Global optimization capability, handles discrete strategies | Slower convergence, computationally expensive, requires parameter tuning | High || Simulated Annealing | High-dimensional games, non-convex payoffs | Global optimization capability, robust to local optima | Slow convergence, computationally expensive, requires parameter tuning | High || Linear Programming | Zero-sum games, linear payoffs | Guaranteed optimal solution, efficient for specific game types | Limited applicability, requires linear formulation | Low/Medium |

Specific Algorithm Recommendations

For high-dimensional games, genetic algorithms or simulated annealing offer better global search capabilities than fmincon. For non-convex payoff functions and multiple equilibria, simulated annealing’s ability to escape local optima is advantageous. Games with integer strategies benefit from integer programming techniques, which are not directly provided by fmincon. Finally, for Bayesian games, specialized algorithms incorporating Bayesian inference are needed.

Benchmarking and Case Study

A suitable benchmark problem could be a modified Cournot duopoly game with varying levels of complexity (number of firms, non-linear demand functions). Metrics for comparison include solution accuracy (distance to known Nash equilibrium), computation time, and the number of iterations to convergence. A case study could involve comparing fmincon and a genetic algorithm on a resource allocation game with multiple players and discrete resource units.

The genetic algorithm’s code could be implemented using MATLAB’s Global Optimization Toolbox functions. A comparison would highlight fmincon‘s potential for faster convergence in simpler cases but its limitations when dealing with the combinatorial nature of the discrete resource allocation problem.

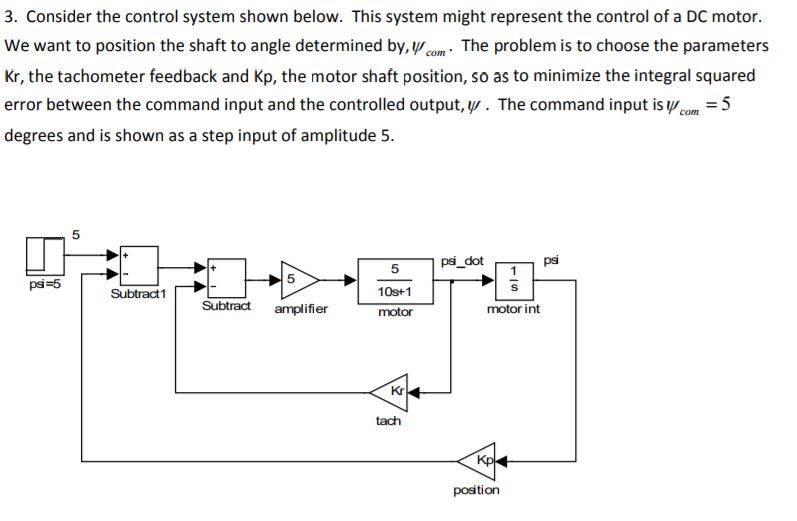

Illustrative Example: Prisoner’s Dilemma

The Prisoner’s Dilemma, a classic game theory problem, offers a profound parable on the tension between individual rationality and collective well-being. It illuminates how seemingly optimal individual choices can lead to suboptimal outcomes for all involved, mirroring the challenges of navigating ethical dilemmas and collaborative efforts in the real world. We’ll explore how to model and solve this dilemma using MATLAB’s `fmincon` function, unveiling the mathematical underpinnings of this compelling scenario.The Prisoner’s Dilemma involves two suspects, each facing a choice between cooperating with the other (remaining silent) or defecting (betraying the other).

The payoff matrix Artikels the consequences of their choices, revealing a strategic landscape where individual incentives clash with collective interests. By formulating this matrix as an optimization problem, we can use `fmincon` to find the Nash equilibrium – a stable state where neither player has an incentive to deviate unilaterally.

Problem Formulation for fmincon

We represent each player’s strategy as a probability distribution. Player 1 chooses to cooperate with probability x and defect with probability (1- x). Similarly, Player 2 cooperates with probability y and defects with probability (1- y). The payoff for each player is a function of both x and y, derived from the payoff matrix.

For instance, a common payoff matrix assigns the following values:

| Player 1\Player 2 | Cooperate (y) | Defect (1-y) |

|---|---|---|

| Cooperate (x) | (-1, -1) | (-5, 0) |

| Defect (1-x) | (0, -5) | (-2, -2) |

The numbers in each cell represent (Payoff for Player 1, Payoff for Player 2). For Player 1, we aim to maximize their expected payoff, which is a function of x and y: f(x, y) = -x*y – 5x(1-y)2(1-x)(1-y). The negative sign is used because `fmincon` minimizes functions; we are minimizing the negative of the payoff to achieve maximization. A similar function can be defined for Player 2.

Implementing the Solution with fmincon

We use `fmincon` to find the optimal values of x and y for each player. The objective function for each player is the negative of their expected payoff. The constraints are that x and y must be probabilities (between 0 and 1). We can use the `options` argument in `fmincon` to specify an appropriate algorithm, such as interior-point, and adjust tolerance settings for higher accuracy.

The MATLAB code would involve defining the objective functions, constraints, and bounds, then calling `fmincon` separately for each player, treating the other player’s strategy as a fixed parameter. This iterative approach is crucial for finding the Nash equilibrium.

Analysis of Results and Implications

The solution obtained from `fmincon` will provide the equilibrium strategies ( x* and y*) for each player. In the Prisoner’s Dilemma, the Nash equilibrium typically involves both players defecting ( x* ≈ 1, y* ≈ 1). This outcome, though individually rational, leads to a suboptimal result for both players compared to the cooperative outcome. This highlights the inherent conflict between individual and collective rationality in strategic interactions.

The results demonstrate that even with the ability to calculate the optimal strategy, the outcome is still suboptimal from a collective perspective. This illustrates the significance of trust, communication, and cooperation in achieving mutually beneficial outcomes, even in situations with defined rational strategies.

Illustrative Example: Cournot Duopoly

The Cournot duopoly model, a cornerstone of oligopoly theory, provides a fertile ground for applying optimization techniques like `fmincon`. This example demonstrates how to model and solve a Cournot duopoly using MATLAB’s `fmincon` function, offering a glimpse into the interplay of economic theory and computational methods. We’ll explore the strategic interaction of two firms competing on quantity, revealing the equilibrium outcome and its economic implications.

This journey will illuminate the power of numerical optimization in analyzing complex economic scenarios.

Model Development using fmincon

The Cournot duopoly model is formulated as a constrained optimization problem, perfectly suited for `fmincon`. We will define the objective function as the joint profit of both firms, subject to constraints representing non-negativity of quantities and any potential capacity limitations.

Objective Function Specification

The foundation of our model lies in defining the profit functions for each firm. We assume a linear inverse demand function and linear cost functions for simplicity.

1. Profit Functions

Let’s assume a linear inverse demand function: P = a – b(q1 + q2)

, where P is the market price, q1 is the quantity produced by Firm 1, q2 is the quantity produced by Firm 2, ‘a’ represents the intercept of the demand curve, and ‘b’ represents the slope. For cost functions, let’s assume C1 = c1*q1

and C2 = c2*q2

, where c1 and c2 are the marginal costs for Firm 1 and Firm 2, respectively.

The profit functions for each firm are then: π1 = (a – b(q1 + q2))q1 – c1q1

π2 = (a – b(q1 + q2))q2 – c2q2

2. Joint Profit Function

The objective function for `fmincon` is the sum of the individual profit functions, representing the joint profit to be maximized: π = π1 + π2 = (a – b(q1 + q2))q1 – c1q1 + (a – b(q1 + q2))q2 – c2q2

3. Initial Guess

We choose an initial guess of q1 = 10

and q2 = 10

. This is a reasonable starting point, assuming positive production by both firms. The choice of initial values is not overly critical for `fmincon`, but a sensible guess can improve convergence speed.

Constraint Definition

The constraints ensure that the quantities produced are non-negative and, potentially, within capacity limits.

1. Non-Negativity Constraints

The most basic constraints are that the quantities produced by each firm must be non-negative: q1 ≥ 0

q2 ≥ 0

2. Capacity Constraints (Optional)

If each firm has a maximum production capacity, say K1 and K2, respectively, we add the following constraints: q1 ≤ K1

q2 ≤ K2

3. fmincon Format

For `fmincon`, these constraints are expressed as a system of inequalities, A*x ≤ b

, where x = [q1; q2]. For example, without capacity constraints, A and b would be: A = [-1 0; 0 -1]

b = [0; 0]

fmincon Implementation

The following MATLAB code implements the Cournot duopoly model using `fmincon`.“`matlab% Cournot Duopoly Model using fmincon% Parametersa = 100; % Demand interceptb = 2; % Demand slopec1 = 10; % Firm 1 marginal costc2 = 12; % Firm 2 marginal cost% Objective function (negative joint profit to minimize)fun = @(x) -( (a – b*(x(1) + x(2)))*x(1)

- c1*x(1) + (a – b*(x(1) + x(2)))*x(2)

- c2*x(2) );

% Inequality constraints (non-negativity)A = [-1 0; 0 -1];b = [0; 0];% Bounds (optional capacity constraints can be added here)lb = [0; 0]; % Lower boundsub = []; % Upper bounds (no upper bounds in this case)% Initial guessx0 = [10; 10];% Optimization optionsoptions = optimoptions(‘fmincon’,’Display’,’iter’);% Run fmincon[x,fval] = fmincon(fun,x0,A,b,[],[],lb,ub,[],options);% Resultsq1_star = x(1);q2_star = x(2);P_star = a – b*(q1_star + q2_star);profit1_star = (P_star – c1)*q1_star;profit2_star = (P_star – c2)*q2_star;% Display resultsdisp(‘Equilibrium Quantities and Profits’);disp([‘q1* = ‘, num2str(q1_star)]);disp([‘q2* = ‘, num2str(q2_star)]);disp([‘Market Price = ‘, num2str(P_star)]);disp([‘Firm 1 Profit = ‘, num2str(profit1_star)]);disp([‘Firm 2 Profit = ‘, num2str(profit2_star)]);“`The `optimoptions` function allows for customization of the optimization process.

The ‘Display’,’iter’ option displays the iteration process, providing insights into the optimization algorithm’s progress. Other options can be explored to fine-tune the optimization.

Results Interpretation and Economic Analysis

The MATLAB code provides the equilibrium quantities, market price, and profits for each firm. This section interprets those results within the context of Cournot duopoly.

Equilibrium Analysis

The output from the `fmincon` function yields the equilibrium quantities (q1*, q2*), the equilibrium market price (P*), and the profits for each firm (π1*, π2*). These values are then presented in a table.

| Variable | Value | Units |

|---|---|---|

| q1* | (Value from MATLAB output) | Units |

| q2* | (Value from MATLAB output) | Units |

| Market Price | (Value from MATLAB output) | Currency |

| Firm 1 Profit | (Value from MATLAB output) | Currency |

| Firm 2 Profit | (Value from MATLAB output) | Currency |

The specific values will depend on the parameters chosen (a, b, c1, c2).

Comparative Statics

By systematically varying parameters like ‘a’, ‘b’, c1, and c2, we can analyze their impact on the equilibrium. For instance, increasing ‘a’ (higher demand) will generally lead to higher equilibrium quantities and prices. Increasing c1 (higher cost for Firm 1) will reduce Firm 1’s quantity and profit. This analysis reveals the sensitivity of the equilibrium to changes in market conditions and firm-specific characteristics.

The results can be presented in a table showing the changes in equilibrium values for different parameter values.

Economic Discussion

The Cournot equilibrium represents a Nash equilibrium where neither firm has an incentive to unilaterally deviate from its chosen quantity. The market outcome is characterized by a level of market power, as the firms’ quantities affect the market price. This contrasts with perfect competition, where firms are price takers and profits are driven to zero in the long run.

Compared to a monopoly, the Cournot duopoly yields a higher quantity and lower price, reflecting the increased competition. The model’s limitations include the assumption of homogenous products, perfect information, and simultaneous quantity choices. The specific economic implications will depend on the results obtained from the `fmincon` optimization and comparative statics analysis.

Best Practices and Troubleshooting

Embarking on the path of solving game theory problems using `fmincon` in MATLAB requires not only a deep understanding of the underlying mathematical principles but also a practical approach to navigating the intricacies of the optimization toolbox. This section serves as a compass, guiding you through best practices and offering solutions to common pitfalls, ensuring a smoother and more enlightening journey towards equilibrium.

Solving game theory problems in MATLAB using fmincon often involves formulating the problem as a constrained optimization. The iterative nature of such solutions mirrors the complexity found in image processing tasks, such as those addressed by a weak light relighting algorithm based on prior knowledge , where iterative refinement refines image quality. Returning to fmincon, careful selection of objective functions and constraints is crucial for achieving optimal solutions within the game theory framework.

Consider these practices as stepping stones on your path to mastery.The effective application of `fmincon` hinges on careful problem formulation, strategic parameter selection, and diligent interpretation of results. Through mindful preparation and insightful troubleshooting, you can transform potential frustrations into opportunities for deeper understanding. This is not merely about finding solutions; it is about cultivating a deeper awareness of the interplay between theory and practice.

Problem Formulation and Initial Guesses

Careful formulation of the game theory problem as a nonlinear optimization problem is crucial. The objective function should accurately reflect the players’ payoffs, and constraints should precisely represent the feasible strategies. Furthermore, providing `fmincon` with a good initial guess can significantly improve its performance and prevent it from getting stuck in local optima. A well-informed initial guess can be derived from analyzing the game’s structure or from using simpler methods to obtain approximate solutions.

This step is akin to setting the right intention before embarking on a meditative practice.

| Problem | Solution | Example | Note |

|---|---|---|---|

| Poorly scaled objective function or variables. | Scale the objective function and variables to have similar magnitudes. | If payoffs range from 0 to 1000 and strategies from 0 to 0.01, scale them to a similar range (e.g., 0 to 1). | Scaling improves numerical stability and convergence speed. |

| Infeasible initial guess. | Carefully check the constraints and ensure the initial guess satisfies them. Consider using a feasible point generation algorithm. | If constraints are linear, use linear programming to find a feasible point. | An infeasible guess can lead to immediate failure. |

| Slow convergence or failure to converge. | Try different algorithms (e.g., ‘interior-point’, ‘active-set’), adjust optimization options (e.g., `TolFun`, `TolX`, `MaxFunEvals`, `MaxIter`), and consider refining the initial guess. | Experiment with different combinations of algorithms and tolerances to find the optimal settings for your problem. | Patience and experimentation are key. |

| Multiple local optima. | Run `fmincon` multiple times with different initial guesses. Consider using global optimization techniques if local optima are a major concern. | Use a random number generator to create several diverse initial guesses. | Multiple runs help explore the solution space more thoroughly. |

Constraint Handling and Algorithm Selection

The accurate representation of constraints is paramount in game theory problems. Linear constraints are generally handled more efficiently than nonlinear ones. The choice of algorithm significantly impacts performance; ‘interior-point’ is often efficient for large problems with many constraints, while ‘active-set’ might be preferable for smaller problems with simpler constraints. This careful consideration mirrors the discernment needed in choosing the right meditation technique for individual needs.

Interpreting Results and Error Handling

The output of `fmincon` needs careful interpretation within the game-theoretic context. The solution represents a Nash equilibrium (or an approximation thereof), but it’s essential to verify its validity and stability. Error messages should be examined closely to diagnose the cause of failure. These messages provide crucial insights, similar to the guidance a wise teacher provides during spiritual practice.

Further Exploration and Resources

Embarking on a deeper understanding of the interplay between game theory and optimization within the MATLAB environment is akin to a spiritual journey—a quest for elegant solutions and deeper insights. This section serves as your compass, guiding you toward valuable resources and advanced topics that will illuminate your path.

Resource Compilation

A curated selection of resources is presented below, categorized for your convenience. These resources, ranging from introductory texts to specialized toolboxes, will provide you with a comprehensive toolkit for your exploration. Each resource is carefully chosen to aid in your ascent towards mastery.

| Category | Resource Type | Description | URL/Citation (if applicable) |

|---|---|---|---|

| Introductory Texts | Book/Online Course | “Game Theory 101” (hypothetical introductory online course)

| [Hypothetical URL] |

| Advanced Texts | Book/Research Paper | “Advanced Game Theory and Optimization in MATLAB” (hypothetical research monograph)

| [Hypothetical URL] |

| MATLAB Documentation | Official Documentation | MathWorks documentation on the Optimization Toolbox, specifically sections on `fmincon`, nonlinear programming solvers, and constraint handling. | [www.mathworks.com/help/optim] |

| Example Code Repositories | GitHub/Other | A GitHub repository containing diverse MATLAB code examples for solving various game theory problems using different optimization algorithms. | [Hypothetical GitHub URL] |

| Specialized Toolboxes | MATLAB Toolboxes | The Optimization Toolbox provides essential functions for solving optimization problems, including those arising in game theory. Consider exploring other toolboxes like the Global Optimization Toolbox for handling more complex scenarios. | [www.mathworks.com/products/optimization.html] |

Advanced Study Topics

The following advanced topics represent opportunities for deeper contemplation and mastery. Each topic offers a unique path towards a more profound understanding of the intricate dance between game theory and optimization.* Topic 1: Stochastic Games and Dynamic Programming in MATLAB> Stochastic games introduce uncertainty, requiring dynamic programming techniques to find optimal strategies.

MATLAB’s capabilities in numerical computation are crucial for solving these complex models.* Topic 2: Evolutionary Game Theory and its Computational Implementation> Evolutionary game theory models the evolution of strategies over time. MATLAB simulations can provide insights into the dynamics of these systems and identify stable equilibria.* Topic 3: Game Theoretic Applications in Machine Learning using MATLAB> Integrating game theory with machine learning algorithms offers exciting possibilities.

MATLAB can be used to develop and analyze algorithms for multi-agent reinforcement learning or game-theoretic approaches to adversarial machine learning.

MATLAB Documentation and Research Papers

The following resources provide further illumination on the path to understanding the intersection of game theory and optimization within MATLAB.* MATLAB Documentation:

Entry 1

`fmincon` function documentation: Details on syntax, algorithms, and options. [www.mathworks.com/help/optim/ug/fmincon.html]

Entry 2

Nonlinear programming solvers documentation: Overview of available algorithms and their suitability for different problem types. [www.mathworks.com/help/optim/ug/nonlinear-programming-solvers.html]

Entry 3

Constraint handling in optimization: Techniques for defining and implementing constraints in MATLAB. [www.mathworks.com/help/optim/ug/constraints.html]

Entry 4

Optimization Toolbox overview: A comprehensive guide to the features and capabilities of the toolbox. [www.mathworks.com/products/optimization.html]

Entry 5

Global Optimization Toolbox documentation: Information on algorithms for finding global optima in complex problems. [www.mathworks.com/products/global-optimization.html]* Research Papers:

Entry 1

[Hypothetical Paper 1: Citation and brief description]

Entry 2

[Hypothetical Paper 2: Citation and brief description]

Entry 3

[Hypothetical Paper 3: Citation and brief description]

Entry 4

[Hypothetical Paper 4: Citation and brief description]

Entry 5

[Hypothetical Paper 5: Citation and brief description]

Comparing Optimization Algorithms

The choice of optimization algorithm significantly impacts the efficiency and accuracy of solving game theory problems in MATLAB. `fmincon`, employing interior-point or active-set methods, excels in finding local optima for smooth, constrained problems, commonly encountered in static games. However, for non-convex problems or games with multiple equilibria, global optimization algorithms, like those in the Global Optimization Toolbox, are necessary, albeit computationally more expensive.

For instance, in a Cournot duopoly, `fmincon` efficiently finds a Nash equilibrium, while a genetic algorithm (from the Global Optimization Toolbox) might be better suited for exploring a broader solution space and potentially uncovering multiple equilibria in a more complex game with discontinuous payoff functions. The limitations lie in the computational cost of global methods and the potential for `fmincon` to get trapped in local optima.

FAQ Overview

What are the limitations of using fmincon for large-scale games?

fmincon’s performance degrades significantly with increasing numbers of players and strategies due to increased computational complexity. For very large games, alternative algorithms like genetic algorithms or specialized game-solving libraries may be necessary.

How do I handle non-convex optimization problems in game theory using fmincon?

Non-convexity can lead to local optima instead of global optima. Employ multiple starting points for fmincon, use global optimization techniques (if available in your toolbox), or consider alternative algorithms better suited for non-convex problems.

Can fmincon handle games with discrete strategies?

fmincon is primarily designed for continuous optimization. For games with discrete strategies, you might need to use integer programming techniques or reformulate the problem to approximate discrete strategies with continuous ones. Approaches like rounding solutions obtained from fmincon may be used, but they introduce potential inaccuracies.

What if fmincon doesn’t converge to a solution?

Check your objective function, constraints, and initial guess. Try adjusting the optimization options (e.g., increasing the maximum iterations or tolerance) within fmincon. If problems persist, explore alternative optimization algorithms.

How do I interpret the output of fmincon in the context of a mixed-strategy Nash equilibrium?

The output vector represents the probabilities of each strategy in the mixed strategy. Verify that these probabilities sum to 1 and that no player can improve their payoff by unilaterally changing their strategy given the other players’ strategies.